Articles

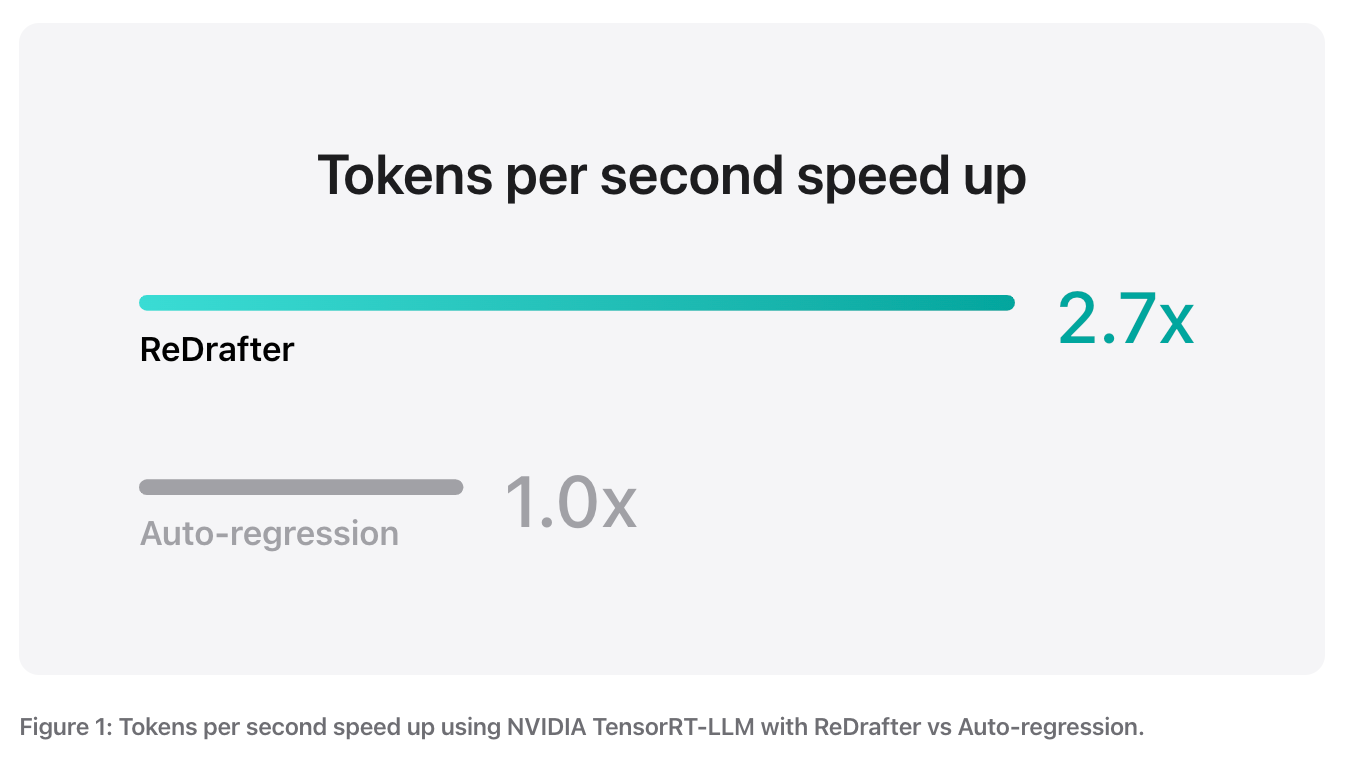

Apple has published a blog post on ReDrafter into NVIDIA's TensorRT-LLM framework, which makes the LLM much more efficient for inference use case. By combining novel speculative decoding techniques with the NVIDIA’s improved TensorRT library has produced 2.7x speed-up in token generation, coupled with reduced resource usage. This is a joint effort with NVIDIA and both companies collaborated on enabling the technique in production together.

Apple's ReDrafter represents a state-of-the-art approach to speculative decoding, which aims to predict multiple tokens in advance to speed up the generation process.

I also covered the speculative decoding a lot more in detail in the following post, you may want to refresh your memory before going further the ReDrafter’s advantage over vanilla speculative decoding:

ReDrafter modifies the speculative decoding in the following dimensions:

RNN Draft Model: ReDrafter employs a Recurrent Neural Network (RNN) as its draft model. RNNs are well-suited for sequential data and can maintain context over long sequences, making them effective for predicting multiple tokens ahead.

Beam Search: This technique explores multiple possible sequences simultaneously, keeping track of the most promising ones. It allows ReDrafter to consider various potential token paths, increasing the likelihood of finding optimal sequences.

Dynamic Tree Attention: This mechanism enables ReDrafter to efficiently manage and update the tree of possible token sequences as it generates text. It allows for more flexible and adaptive exploration of the token space compared to fixed-width beam search.

Performance Improvement: ReDrafter achieves up to 3.5 tokens per generation step for open-source models, surpassing the performance of previous speculative decoding techniques.

Through especially on the efficiency improvements, ReDrafter approach offers several advantages over other speculative decoding methods:

Improved Efficiency: By generating up to 3.5 tokens per step, ReDrafter significantly reduces the number of forward passes through the main LLM, leading to faster overall generation.

Adaptive Prediction: The combination of an RNN draft model with beam search allows ReDrafter to make more context-aware and flexible predictions compared to simpler draft models.

Better Handling of Uncertainty: The dynamic tree attention mechanism enables ReDrafter to efficiently manage multiple possible token sequences, adapting its predictions as more context becomes available.

NVIDIA’s blog post has also more details especially on the infrastructure and what they did to support this use case within their TensorRT-LLM library.

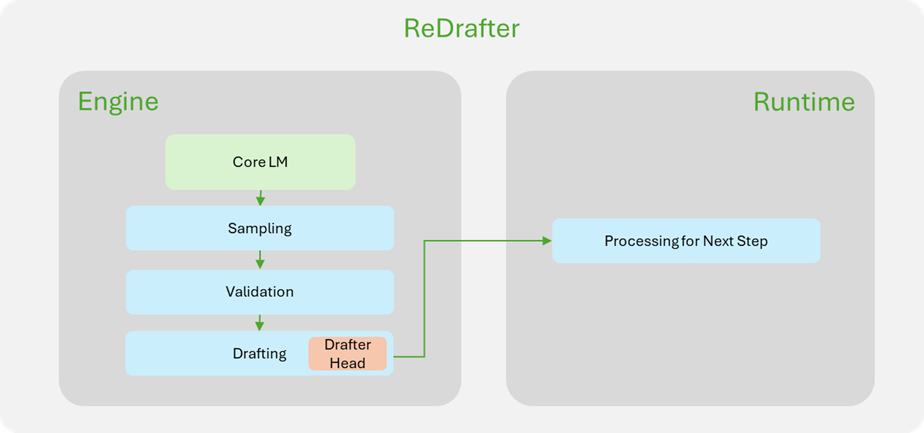

They mainly supported this effort through:

New Operators: NVIDIA added new operators and exposed existing ones to support ReDrafter's beam search and tree attention algorithms, which were previously unused in TensorRT-LLM.

Enhanced Framework Capabilities: These additions have considerably improved TensorRT-LLM's ability to accommodate sophisticated models and decoding methods.

Google Research has introduced a novel neural method called ZeroBAS for zero-shot mono-to-binaural speech synthesis. This technique allows for the generation of binaural audio from monaural audio recordings and positional information without the need for training on binaural data.

ZeroBAS has two key components:

A parameter-free transform

An off-the-shelf mono audio denoising model

The system takes a monaural audio input and positional information, then applies these components to synthesize binaural audio output.

Parameter-free Transform

The initial step involves using a simple, parameter-free transform to create a preliminary binauralization of the mono audio. This transform likely applies basic acoustic principles to simulate spatial audio effects based on the provided positional information.

The core innovation of ZeroBAS is the use of an off-the-shelf mono audio denoising model to enhance the initial binauralization. This model, which was not specifically trained for binaural tasks, demonstrates a surprising capability to bridge the representation gap between mono and binaural audio.

Further, it has the following properties:

1. Zero-shot Performance: ZeroBAS can subjectively match or outperform existing state-of-the-art neural mono-to-binaural renderers, despite never being trained on binaural data.

2. Universal Applicability: The method serves as a strong initial baseline for universal mono-to-binaural synthesis, capable of generalizing to arbitrary environments.

3. Overcoming Limitations: Analysis using a new benchmark (TUT Mono-to-Binaural) revealed that existing neural mono-to-binaural methods are often overfit to non-spatial acoustic properties. ZeroBAS addresses this limitation.

4. Representation Gap: Comprehensive ablations were performed to understand how ZeroBAS bridges the representation gap between mono and binaural audio.

5. Metric Analysis: The research team analyzed how current mono-to-binaural automated metrics are decorrelated from human ratings, highlighting the need for improved evaluation methods.

ZeroBAS has the following capabilities:

1. Generalization: ZeroBAS demonstrates the potential for creating more generalizable audio synthesis models that can adapt to various acoustic environments without specific training.

2. Transfer Learning: The success of using a mono audio denoising model for binaural synthesis suggests promising avenues for transfer learning in audio processing tasks.

3. Metric Development: The findings on metric decorrelation emphasize the importance of developing more accurate automated evaluation metrics for binaural audio quality.

4. Real-time Processing: While not explicitly stated, the use of a parameter-free transform and an existing

Libraries

DeepRec is a high-performance recommendation deep learning framework based on TensorFlow 1.15, Intel-TensorFlow and NVIDIA-TensorFlow. It is hosted in incubation in LF AI & Data Foundation.

TapeAgents is a framework that leverages a structured, replayable log (Tape) of the agent session to facilitate all stages of the LLM Agent development lifecycle. In TapeAgents, the agent reasons by processing the tape and the LLM output to produce new thoughts, actions, control flow steps and append them to the tape. The environment then reacts to the agent’s actions by likewise appending observation steps to the tape.

Key features:

Build your agent as a low-level state machine, as a high-level multi-agent team configuration, or as a mono-agent guided by multiple prompts

Debug your agent with TapeAgent studio or TapeBrowser apps

Serve your agent with response streaming

Optimize your agent's configuration using successful tapes; finetune the LLM using revised tape

DevOpsGPT combines LLM (Large Language Model) with DevOps tools to convert natural language requirements into working software. This innovative feature greatly improves development efficiency, shortens development cycles, and reduces communication costs, resulting in higher-quality software delivery.

🚀LLaMA2-Accessory is an open-source toolkit for pretraining, finetuning and deployment of Large Language Models (LLMs) and multimodal LLMs. This repo is mainly inherited from LLaMA-Adapter with more advanced features.🧠

✨Within this toolkit, presenting SPHINX, a versatile multimodal large language model (MLLM) that combines a diverse array of training tasks, data domains, and visual embeddings.

Dify is an open-source LLM app development platform. Its intuitive interface combines agentic AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

Below the fold

egui (pronounced "e-gooey") is a simple, fast, and highly portable immediate mode GUI library for Rust. egui runs on the web, natively, and in your favorite game engine.

egui aims to be the easiest-to-use Rust GUI library, and the simplest way to make a web app in Rust.

egui can be used anywhere you can draw textured triangles, which means you can easily integrate it into your game engine of choice.

bg-remove is a a powerful React + Vite application that removes backgrounds from images directly in your browser. This app leverages machine learning models through Transformers.js to process media locally, ensuring your files never leave your device.

🎯 One-click background removal for images

🎨 Custom background color and image selection

💾 Download options for both transparent and colored backgrounds

🏃♂️ Local processing - no server uploads needed

🔒 Privacy-focused - all processing happens in your browser

⚡ Optional WebGPU acceleration for supported browsers