Libraries

TorchRec is a PyTorch domain library built to provide common sparsity & parallelism primitives needed for large-scale recommender systems (RecSys). It allows authors to train models with large embedding tables sharded across many GPUs. An example on how to use TorchRec to build a recommendation model can be learned from here.

Diffrax is a JAX-based library providing numerical differential equation solvers.

Features include:

ODE/SDE/CDE (ordinary/stochastic/controlled) solvers

Lots of different solvers (including

Tsit5,Dopri8, symplectic solvers, implicit solvers)vmappable everything (including the region of integration)

Using a PyTree as the state

Dense solutions

Multiple adjoint methods for backpropagation

Support for neural differential equations

CORDS is COReset and Data Selection library for making machine learning time, energy, cost, and compute efficient. CORDS is built on top of PyTorch. Deep Learning systems are extremely compute intensive today with large turn around times, energy inefficiencies, higher costs and resource requirements. CORDS is an effort to make deep learning more energy, cost, resource and time efficient while not sacrificing accuracy.

Articles

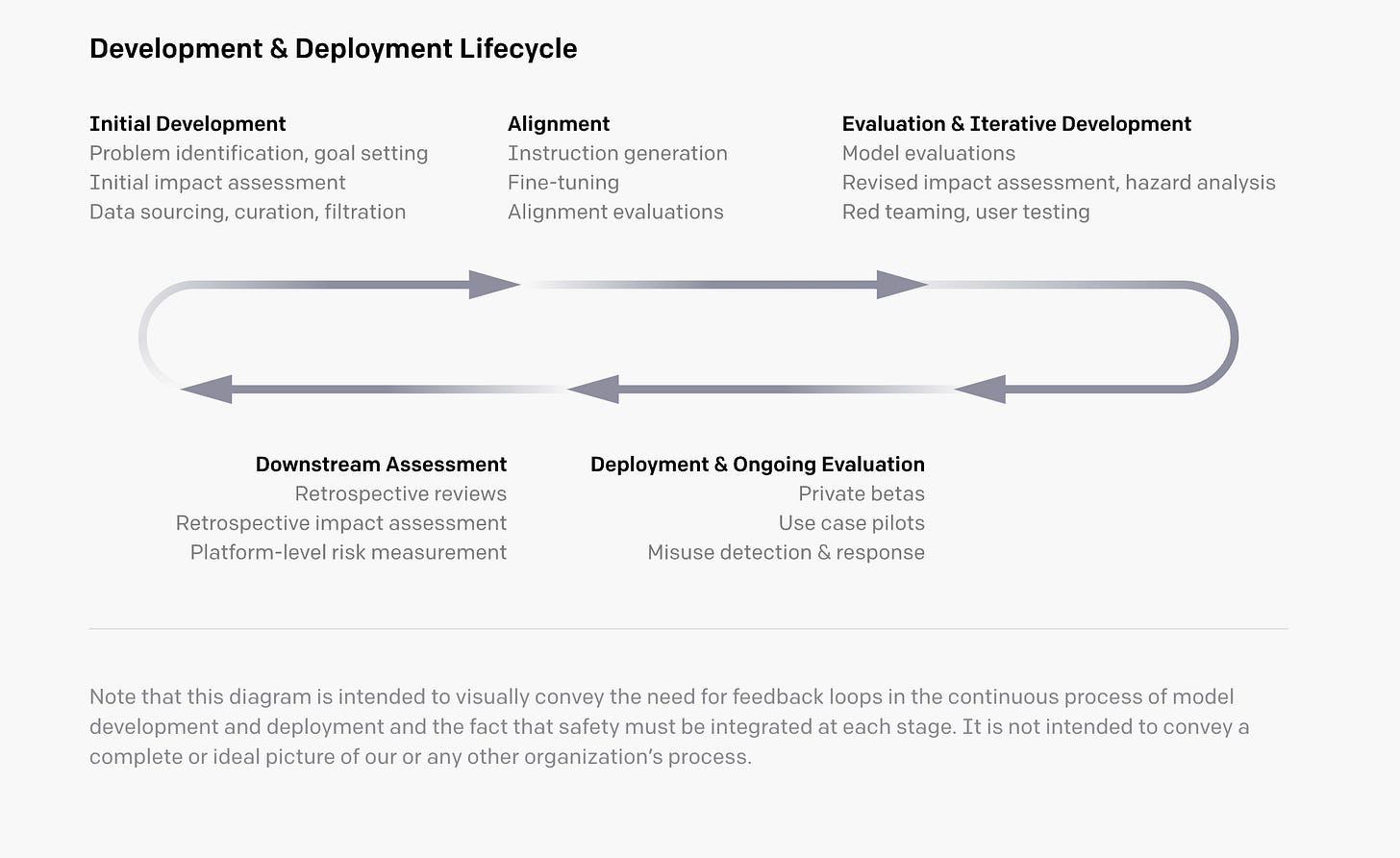

OpenAI wrote about their model safety and prevention of misuse of them in the following blog post.

They categorized their investment in this area in the following categories:

Pre-training data curation and filtering

Fine-tuning models to better follow instructions

Risk analysis of potential deployments

Providing detailed user documentation

Building tools to screen harmful model outputs

Reviewing use cases against our policies

Monitoring for signs of misuse

Studying the impacts of our models

Google wrote a blog post introducing a variant of Graph Neural Networks(GNN) which modifies GNNs on biased data, called Shift-Robust GNN (SR-GNN), this approach is designed to account for distributional differences between biased training data and a graph’s true inference distribution. SR-GNN adapts GNN models to the presence of distributional shift between the nodes labeled for training and the rest of the dataset.

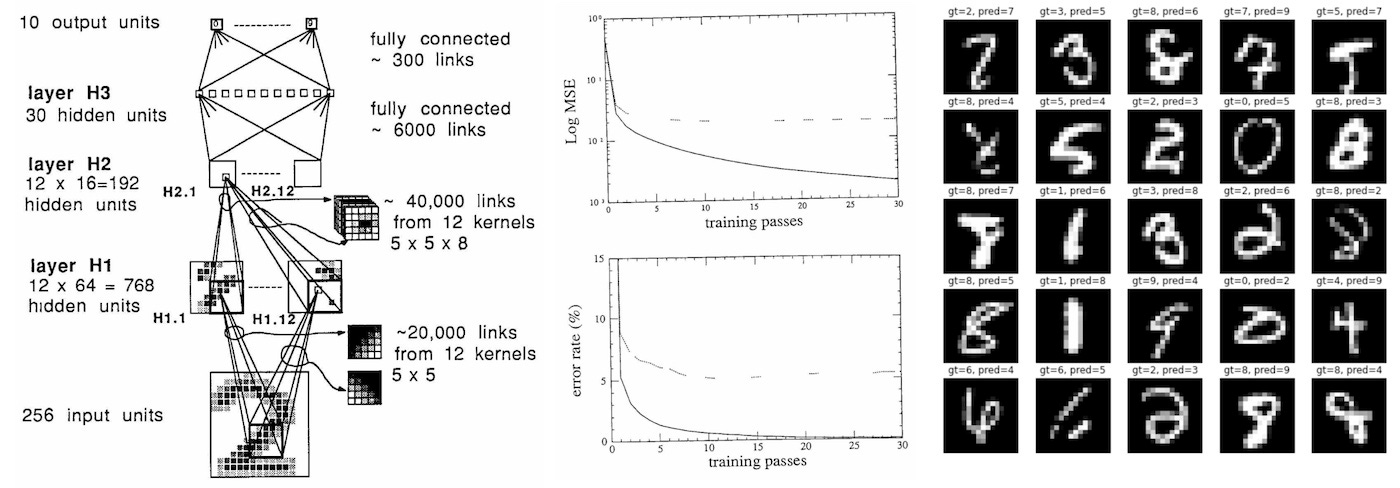

Andrej Karpathy wrote a rather interesting blog post by reproducing the paper by Lecun in 1989 in PyTorch. The paper is here, and code that is available in here as well. The reflections section draws a number of similarities between today’s deep learning methods vs what was available in 1989.

Reflections from the post:

First of all, not much has changed in 33 years on the macro level. We’re still setting up differentiable neural net architectures made of layers of neurons and optimizing them end-to-end with backpropagation and stochastic gradient descent. Everything reads remarkably familiar, except it is smaller.

The dataset is a baby by today’s standards: The training set is just 7291 16x16 greyscale images. Today’s vision datasets typically contain a few hundred million high-resolution color images from the web (e.g. Google has JFT-300M, OpenAI CLIP was trained on a 400M), but grow to as large as a small few billion. This is approx. ~1000X pixel information per image (384*384*3/(16*16)) times 100,000X the number of images (1e9/1e4), for a rough 100,000,000X more pixel data at the input.

The neural net is also a baby: This 1989 net has approx. 9760 params, 64K MACs, and 1K activations. Modern (vision) neural nets are on the scale of small few billion parameters (1,000,000X) and O(~1e12) MACs (~10,000,000X). Natural language models can reach into trillions of parameters.

A state of the art classifier that took 3 days to train on a workstation now trains in 90 seconds on my fanless laptop (3,000X naive speedup), and further ~100X gains are very likely possible by switching to full-batch optimization and utilizing a GPU.

I was, in fact, able to tune the model, augmentation, loss function, and the optimization based on modern R&D innovations to cut down the error rate by 60%, while keeping the dataset and the test-time latency of the model unchanged.

Modest gains were attainable just by scaling up the dataset alone.

Further significant gains would likely have to come from a larger model, which would require more compute, and additional R&D to help stabilize the training at increasing scales. In particular, if I was transported to 1989, I would have ultimately become upper-bounded in my ability to further improve the system without a bigger computer.

Hamel Husain gave a presentation on how to evaluate various MLOps tools in the following video: