Articles

Hazy Research from Stanford wrote an article on ThunderKittens which is an embedded DSL for GPUs, and post specifically talks about how TunderKittens applies in H100 GPUs. Article is talking about tensor operations used in machine learning workloads and explains how ThunderKittens is adding value on top of the existing solutions like Triton.

Leveraging New H100 Tensor Core Instructions

Warp Group Matrix Multiply-Accumulate (WGMMA) Instructions

One of the primary focuses of the article is on the new "warp group matrix multiply-accumulate" (WGMMA) instructions introduced in the H100 architecture. These instructions, referred to as wgmma.mma_async in PTX assembly, are crucial for extracting the full compute potential of the H100 tensor cores.

Without WGMMA instructions, the GPU seems to top out at around 63% of its peak utilization.

The tensor cores require a deep hardware pipeline to keep them fed, even from local resources like shared memory.

Utilizing WGMMA instructions is necessary to achieve the remaining 37% of potential performance on the H100.

The importance of leveraging these new WGMMA instructions comes in light to fully utilize the H100's tensor cores, which are designed for efficient matrix operations critical in machine learning workloads.

Optimizing Shared Memory Usage

Shared Memory Latency Challenges

The often-overlooked latency challenges associated with shared memory is talked about in depth, which is typically touted as a fast on-chip memory resource.

The single-access latency of shared memory is around 30 cycles on the H100.

During this latency period, the tensor cores could have performed almost two full 32x32 square matrix multiplies.

Shared memory latency can significantly impact performance, especially for tensor operations that rely heavily on shared memory.

To address the shared memory latency issue, NVIDIA introduced the Tensor Memory Accelerator (TMA) in the H100 architecture.

TMA allows specifying a multi-dimensional tensor layout in global and shared memory.

It can asynchronously fetch a subtile of the tensor, eliminating the need for address generation.

By overlapping TMA fetches with compute operations, the shared memory latency can be effectively hidden.

TMA is described as "completely indispensable" for achieving the full potential of the H100, potentially even more so than WGMMA instructions.

Occupancy and Asynchronous Execution

Asynchronous Features and Occupancy

The role of occupancy (the number of concurrent thread blocks executing on a Streaming Multiprocessor) and how the H100's asynchronous features also impact performance of GPUs.

The H100 is less reliant on occupancy than previous GPU generations.

Asynchronous features allow a single instruction stream to keep multiple hardware units busy simultaneously (fetching memory, running matrix multiplies, shared memory reductions, and register operations).

This asynchronous execution model reduces the need for high occupancy to achieve full utilization.

Embedded Domain-Specific Language (DSL) for CUDA

Motivated by the proliferation of new architectures and the complexity of optimizing for them (e.g., Flash Attention being 1200 lines of code), the researchers designed a domain-specific language (DSL) embedded within CUDA for their internal use. It is called ThunderKittens and has the best logo:

Automatic generation of WGMMA instructions

Automatic TMA fetches and synchronization

Automatic shared memory tiling and double buffering

Automatic register tiling and software pipelining

It is very interesting in terms of direction where instead of using a library like Triton where it is high level language, this article actually goes as much as granular in terms of instruction level to get the best performance/juice out of the H100.

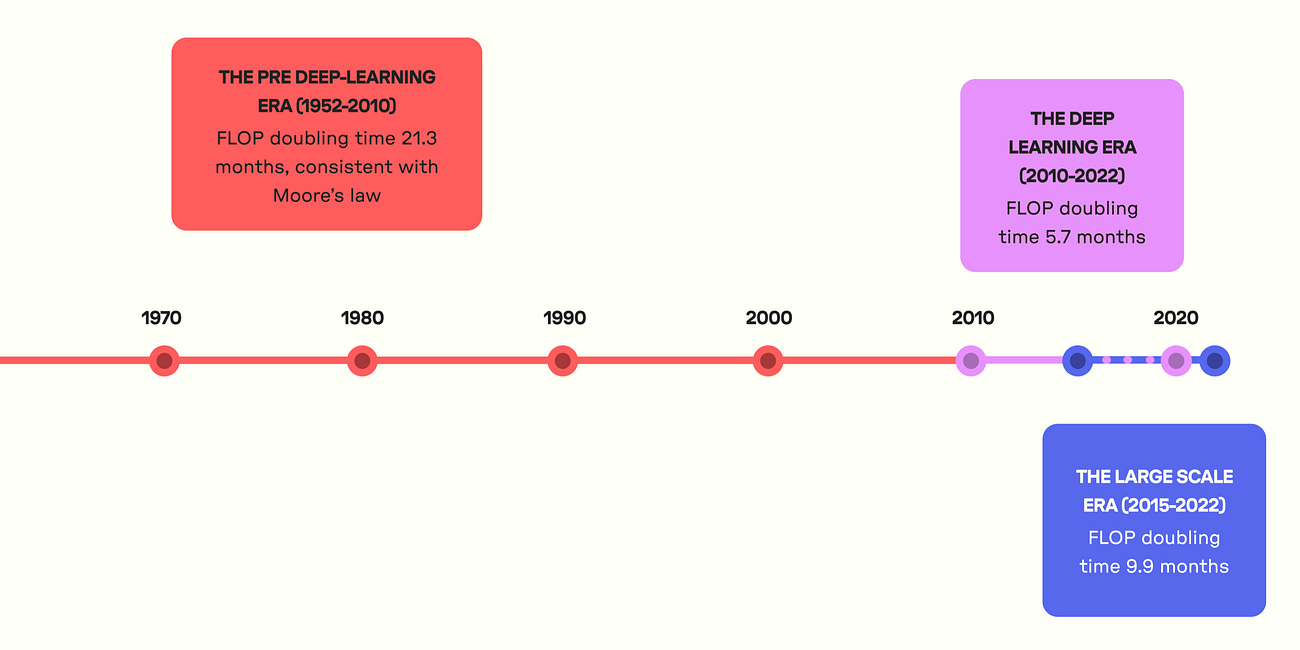

As mentioned before AI will require a ton of compute:

Compute and the role it plays in AI

I will cover the big news for GPT4-o next week and due to that, I covered anything but GPT4-o in this week’s instance. Be tuned for the next week’s newsletter, it will be full of GPT4-o. Now, usual programming: Articles AI Now Institute wrote a blog post

and it is important to be very efficient with regards to GPU utilization and efficiency of the existing hardware through better lowering techniques of the models into the hardware to unlock the full capability from hardware and ThunderKittens could be that DSL that can fill in for use cases that are computationally intensive.

The ThunderKittens framework is built around a tile-oriented programming model, where data is manipulated in tiles no smaller than 16x16 values. This approach is designed to enable efficient tensor operations and leverage the H100's hardware capabilities effectively.

Tile Primitives and Function Signatures

ThunderKittens provides a consistent function signature for operating on tiles. For example, if we have three 32x64 floating-point register tiles a, b, and c, we can element-wise multiply a and b and store the result in c with the following call:

kittens::mul(c, a, b);

Similarly, if we want to store the result into a shared tile s, we can write:

kittens::store(s, c);

Typing and Static Checks

ThunderKittens incorporates static type checking for tile layouts and operations to prevent silent failures and undefined behavior. The framework aims to ensure that the layouts of objects are compatible before allowing operations to be performed. This is particularly important for operations like matrix multiplies, where the operand layouts (e.g., column or row layout) can impact the correctness of the operation.

Scopes and Collaborative Groups

By default, ThunderKittens operations exist at the warp-level, meaning each function expects to be called by a single warp, and that single warp will perform the work of the function. However, some operations, particularly those involving WGMMA instructions, require collaborative groups of warps.These collaborative group operations are available through the templated kittens::group<collaborative size> namespace. For example, WGMMA instructions are accessible through kittens::group<4>::mma_AB (or kittens::warpgroup::mma_AB, which is an alias). Groups of warps can also collaboratively load shared memory or perform reductions in shared memory.

Restrictions and Special Operations

While most operations in ThunderKittens are pure functional, some operations have special restrictions. For example, a register tile transpose operation that needs separable arguments (i.e., the source and destination cannot be the same underlying registers) is named transpose_sep to warn users about this restriction.

Code is available in GitHub.

Modeling Dimension

Transformer-based Encoder-Decoder Architecture: At its core, Gemini 1.5 leverages a standard Transformer-based encoder-decoder architecture. This architecture is prevalent in many state-of-the-art LLMs. The encoder processes the input data, extracting semantic representations, while the decoder generates the output based on the encoded information.

Modality-Agnostic Representation Learning: A crucial aspect lies in the model's ability to represent information from diverse modalities (text, code, audio, video) in a unified manner. This is achieved through modality-specific encoders that convert each modality into a common embedding space. These embeddings capture the core meaning of the data, regardless of its format.

Hierarchical Transformers for Long Context Modeling: To handle the massive context window of millions of tokens, Gemini 1.5 employs hierarchical transformers. These transformers process the input data at multiple granularities, capturing both local and global relationships within the information. The model first breaks down the input into smaller segments, analyzes them individually using standard transformers, and then utilizes higher-level transformers to combine the understanding of these segments into a cohesive representation.

Masked Multimodal Self-Attention: One of the key breakthroughs lies in the implementation of masked multimodal self-attention. This mechanism allows the model to selectively focus on relevant parts of the input across modalities while attending to their relationships. It essentially masks out certain portions of the input data during training, forcing the model to learn to predict the masked information based on the context of the surrounding elements from all modalities. This process refines the model's ability to identify crucial information and make connections across different data types.

Encoder-Decoder Attention: The encoder-decoder attention mechanism facilitates communication between the encoder and decoder. The decoder attends to specific parts of the encoded representation to generate the output,ensuring the response aligns with the relevant context within the massive input data.

Data Dimension

Large Context Window: A defining characteristic of Gemini 1.5 is its ability to access and process information from millions of tokens of text, code, video, and audio. This vast context window empowers the model to reason over extensive amounts of data, enabling it to tackle tasks that necessitate a deep understanding of complex topics.

Multilingual Data: The model is trained on massive datasets of multilingual text and code. This allows it to not only understand and respond in different languages but also leverage its knowledge of similar languages to learn new languages with minimal data, a capability known as few-shot learning.

Multimodal Data: Beyond text and code, Gemini 1.5 incorporates audio and video data into its training process.This multimodal learning equips the model to process and reason over information from various sources, providing a more comprehensive understanding of the world.

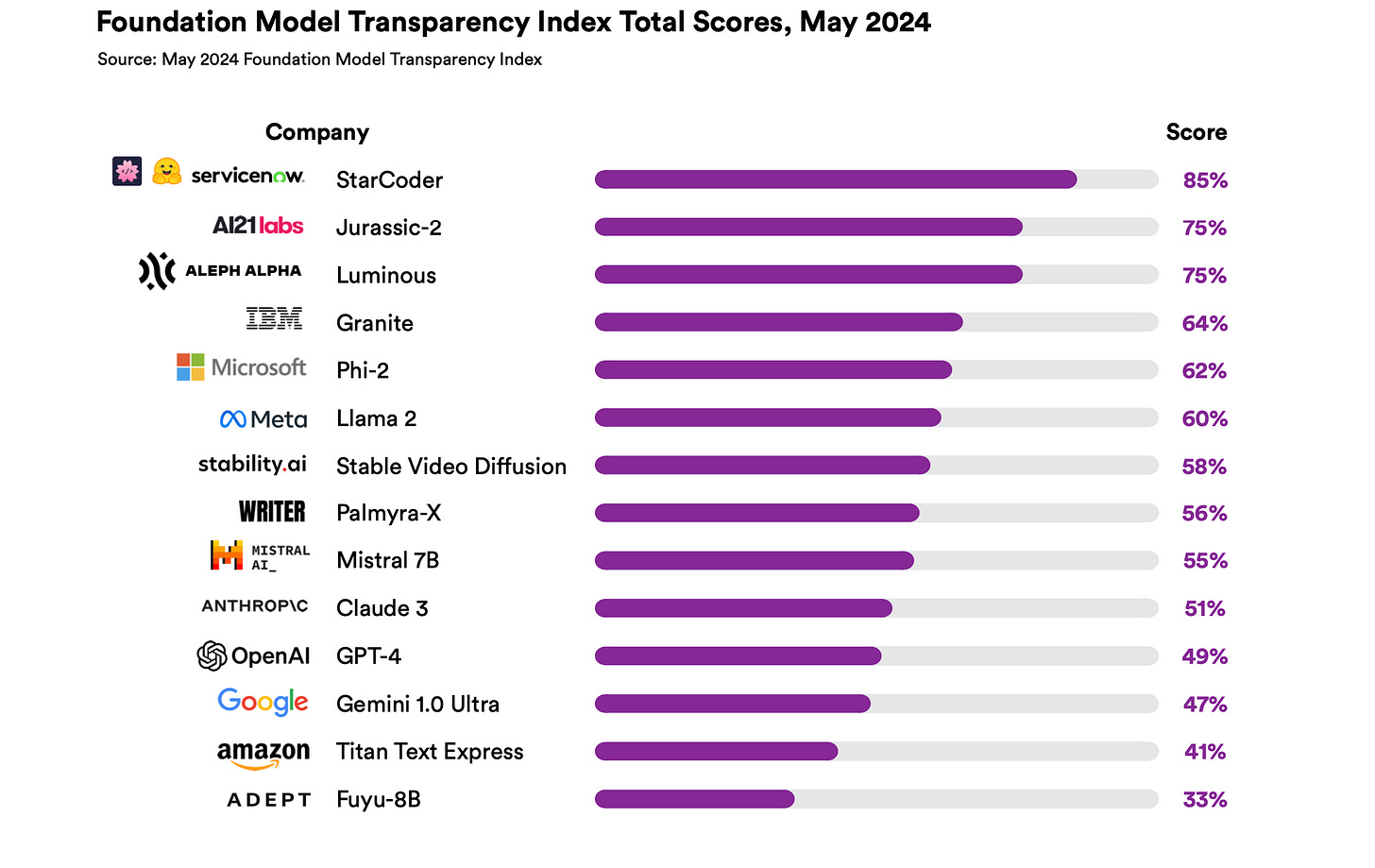

FMTI v1.0 was launched in October 2023, defining 100 transparency indicators across upstream (data, labor, compute), model (capabilities, risks, evaluations), and downstream (distribution, usage policies) aspects of foundation models.

It scored 10 major foundation model developers like OpenAI, Google, Meta, etc. to establish the status quo of transparency practices.

Stanford published their updated Foundation Model Transparency Index(FMTI) in May. They have multiple new announcements:

CRFM announced an advisory board for FMTI v1.1 comprising experts like Arvind Narayanan, Danielle Allen, and Rumman Chowdhury.

FMTI aims to concretize transparency by defining specific indicators that model developers could disclose to benefit various stakeholders.

It proactively scores developers to identify opaque areas (e.g. labor practices) and dimensions with more disclosure.

FMTI v1.0 received extensive media coverage, raising awareness about the need for transparency in foundation models.

It influenced policies and initiatives focused on AI transparency, like the proposed US AI Foundation Model Transparency Act and efforts by the UK AI Safety Institute.

They also have some policy updates as well:

For v1.1, CRFM is revising the scoring methodology based on feedback from developers, researchers, and the advisory board.

Changes include clarifying definitions, updating evidence requirements, and refining scoring criteria for better accuracy and consistency.

They are also expanding the set of models evaluated to provide a more comprehensive industry overview.

Automated benchmarks evaluate model performance on specific tasks or capabilities by providing input samples and comparing model outputs against reference outputs. Key aspects include:

Task Evaluation: Testing performance on well-defined tasks like spam classification by creating datasets with examples and measuring accuracy.

Capability Evaluation: Assessing broader capabilities like math skills using datasets of relevant problems as proxies.

Metrics: Quantitative metrics like accuracy scores allow comparing model performance.

Limitations: Lack of generality beyond tested tasks, potential for test set contamination from training data, difficulty defining and evaluating broad capabilities.

While useful for targeted evaluations, automated benchmarks struggle with open-ended tasks, risk contamination when made public, and may fail to capture general capabilities.

Clementine Fourier wrote an article on the LLM evaluation and how to evaluate LLMs in a comprehensive way for HuggingFace.

It talks very general two dimensions; Human Evaluation and Model evaluation, and use cases for evaluation and some of the challenges associated with the LLM evaluation itself.

Human Evaluation

Human evaluation involves people prompting models, then rating or ranking the outputs based on guidelines. It generally has the following categories to think about:

Open-Ended Tasks: Enables evaluation of complex, open-ended capabilities beyond pre-defined tasks. This is arguably one of the best area where LLM demonstrates a lot of general understanding and knowledge about the real world and ideally the open-ended tasks should give some understanding of this in this dimension.

Human Alignment: Ratings correlate with human preferences for outputs.

Subjectivity: Individual biases and preferences can impact ratings.

Reproducibility: Small-scale evaluations lack reproducibility across raters.

Cost: Paying human raters is expensive, especially for continuous evaluation.

Human evaluation suffers from subjectivity, lack of reproducibility, and cost challenges. There might be also biases such as confident but wrong result based on the human evaluators.

Model Evaluation

Generalist Models: High-capability models can provide ratings correlated with human preferences.

Specialized Judges: Small models trained on human preference data to discriminate outputs.

Biases: Models likely have subtle biases when evaluating outputs, favoring their own style.

Consistency: Models may struggle with consistent score ranges across evaluations.

While avoiding human subjectivity, model evaluation risks compounding biases when using LLMs to evaluate LLMs. Specialized judge models could mitigate this.

Some of the use cases for LLM Evaluation are in the following:

Non-Regression Testing: Ensuring training approaches are sound by checking performance trajectories and expected ranges, not absolute scores. This verifies changes haven't "broken" expected model behavior.

Model Ranking: Identifying the best models by looking at their relative rankings across evaluations, as rankings tend to be more robust than unstable scores.

Capability Assessment: Using evaluations as proxies for assessing broad capabilities like "good at math", though with no guarantees of generality.

Evaluations serve different roles - confirming training integrity, enabling model comparisons, and roughly approximating capabilities - rather than providing definitive capability scores.

Challenges and Considerations

Several overarching challenges and considerations arise in LLM evaluation:

Defining Capabilities: It is extremely difficult to rigorously define and evaluate general capabilities like reasoning or theory of mind.

Generality vs Specialization: Broad, open-ended evaluations lack clear generality, while narrow task evaluations miss general capabilities.

Interdisciplinary Approaches: Insights from fields like interpretability and social sciences could inform new evaluation frameworks.

Ranking Stability: Rankings may provide more robust signals than unstable scores highly sensitive to variations.

Compounding Biases: Using LLMs to evaluate LLMs risks subtly amplifying biases over time in unpredictable ways.

As LLM capabilities grow, the field faces defining meaningful capabilities, balancing general and specialized evaluations, and developing robust, unbiased evaluation frameworks drawing from multiple disciplines.

Libraries

marimo is a reactive Python notebook: run a cell or interact with a UI element, and marimo automatically runs dependent cells (or marks them as stale), keeping code and outputs consistent. marimo notebooks are stored as pure Python, executable as scripts, and deployable as apps.

Highlights.

reactive: run a cell, and marimo automatically runs all dependent cells

interactive: bind sliders, tables, plots, and more to Python — no callbacks required

reproducible: no hidden state, deterministic execution

executable: execute as a Python script, parametrized by CLI args

shareable: deploy as an interactive web app, or run in the browser via WASM

git-friendly: stored as

.pyfiles

More examples and use cases are in their project page.

seqax is a codebase for small-to-medium-scale LLM pretraining research. The entire training program---including the model implementation; optimizer; multihost FSDP and tensor parallel partitioning---is 500 lines of code, which scales well up to ~100 GPUs or TPUs1 and typically achieves good MFUs of 30-50%.

seqax is written in a style that makes the important information visible, rather than being hidden behind abstractions and indirections or being inferred automatically and unpredictably. This shows up in:

Math. seqax implements all of the training step's math, rather than calling into external libraries. If you want to understand or change the math, it's right there!

Memory. All tensors that go into a model checkpoint on disk are explicits. All tensors that occupy a lot of memory, including activations saved for the backwards pass, are explicit. You can straightforwardly read the memory footprint from the source code.

Partitioning and communication. The partitioned layout of all tensors and operations is explicit. All interchip communication is explicit.

Large language models (LLMs) have demonstrated impressive capabilities in various natural language processing tasks. Despite this, their application to information retrieval (IR) tasks is still challenging due to the infrequent occurrence of many IR-specific concepts in natural language. While prompt-based methods can provide task descriptions to LLMs, they often fall short in facilitating a comprehensive understanding and execution of IR tasks, thereby limiting LLMs' applicability. To address this gap, INTERS , they explore the potential of instruction tuning to enhance LLMs' proficiency in IR tasks. They introduce a novel instruction tuning dataset, INTERS, encompassing 20 tasks across three fundamental IR categories: query understanding, document understanding, and query-document relationship understanding. The data are derived from 43 distinct datasets with manually written templates. Our empirical results reveal that INTERS significantly boosts the performance of various publicly available LLMs, such as LLaMA, Mistral, and Phi, in IR tasks. Furthermore, they conduct extensive experiments to analyze the effects of instruction design, template diversity, few-shot demonstrations, and the volume of instructions on performance.

Things I Enjoyed(TIE)

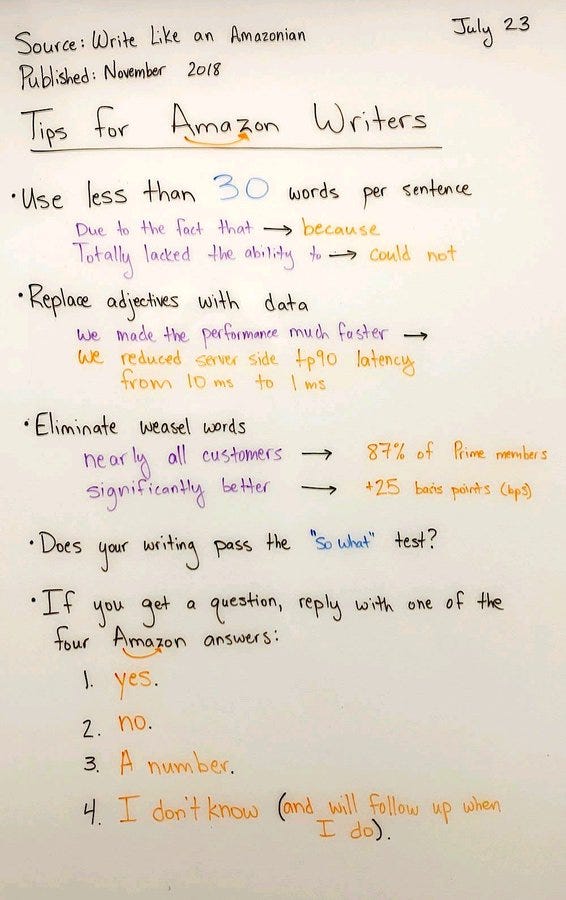

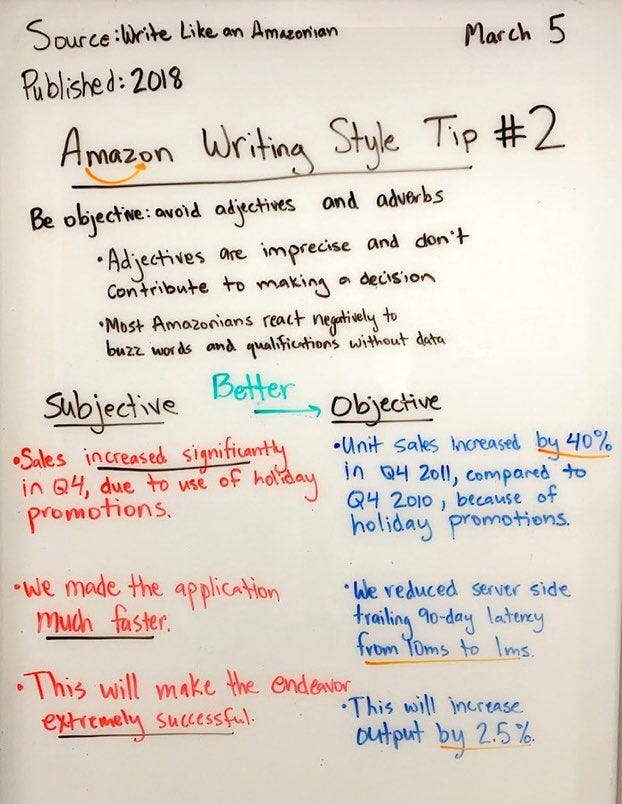

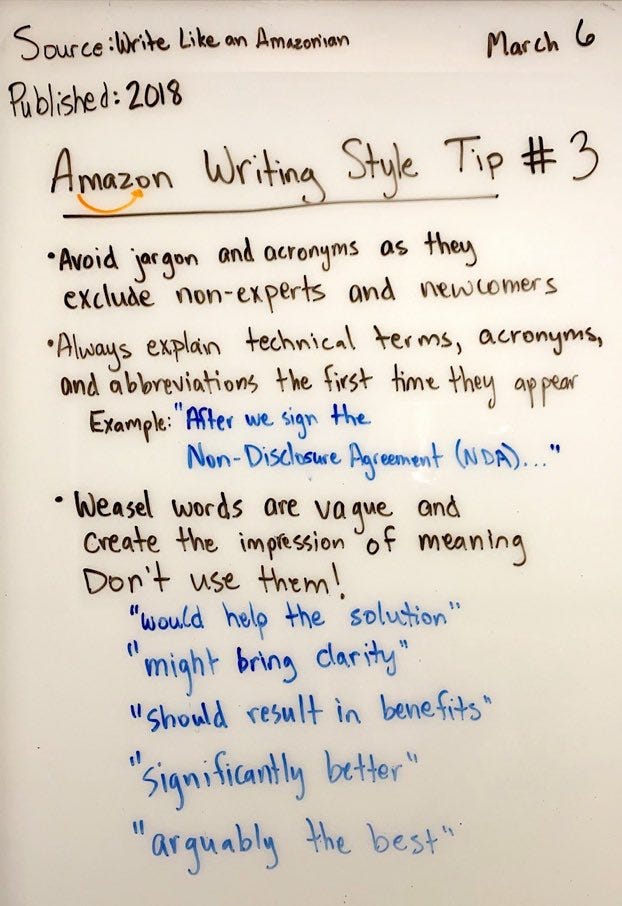

Timeless tips for writing well(concise and clear) and more quantitatively: