Scaling and Reliability Challenges of LLama3

Model Explorer from Google

Articles

LLama3 paper from Meta is a long paper(only 92 pages), but covers a variety of different topics when it comes to train large models.

There are a number of learnings for reliability and scalability challenges of these models as outlined in the table 5 of the above paper:

Reliability and Scalability Challenges in Training Llama 3

The development and training of Llama 3, particularly the 405B parameter model, presented significant reliability and scalability challenges. Training at such a massive scale pushed the boundaries of existing infrastructure and required innovative solutions to ensure efficient and stable training.

One of the most significant challenges was dealing with hardware failures and interruptions during the extended training process. As detailed in Table 5 of the paper, over a 54-day snapshot period of pre-training, there were 466 job interruptions, with 419 of them being unexpected. Approximately 78% of these unexpected interruptions were attributed to confirmed or suspected or related hardware issues. The most common causes of interruptions are:

Faulty GPUs (30.1% of interruptions)

GPU HBM3 Memory issues (17.2%)

Software bugs (12.9%)

Network switch/cable problems (8.4%)

Unplanned host maintenance (7.6%)

These frequent interruptions had several impacts on the training process:

Reduced effective training time: Team managed to achieve over 90% effective training time. However, the remaining 10% loss still represents a significant amount of compute at this scale.

Increased complexity in training management: The team had to develop robust checkpointing and recovery mechanisms to handle frequent interruptions without losing progress.

Potential impact on model quality: Frequent restarts and interruptions could potentially affect the stability of the training process and the final model quality, requiring careful monitoring and potential adjustments to the training recipe.

Scaling Communication Challenges

Training Llama 3 405B required scaling to 16K H100 GPUs, introduced significant communication challenges:

Network congestion: With thousands of GPUs needing to synchronize frequently, network congestion became a major bottleneck. The team had to carefully optimize their communication patterns and leverage technologies like NCCL and NCCLX to mitigate this issue.

Stragglers: Even a single slow GPU or node could dramatically slow down the entire training process due to the synchronous nature of training. The team developed tools to identify and mitigate straggler effects, which was crucial for maintaining training efficiency.

Collective communication scaling: Operations like all-reduce became increasingly expensive as the number of GPUs grew. The team had to carefully tune their algorithms and leverage hierarchical communication patterns to maintain efficiency.

Power Management Challenges

The massive scale of the Llama 3 training operation introduced unique power management challenges:

Power fluctuations: The paper notes that tens of thousands of GPUs increasing or decreasing power consumption simultaneously (e.g., during checkpointing or job startup/shutdown) could result in instant fluctuations of power consumption across the data center on the order of tens of megawatts. This pushed the limits of the power grid and required careful coordination with data center operations.

Thermal management: The team observed diurnal variations in training throughput of 1-2% based on time-of-day, attributed to higher mid-day temperatures impacting GPU dynamic voltage and frequency scaling. This highlights the importance of environmental factors in large-scale training operations.

Software and Algorithmic Challenges

Scaling to such a large model size and number of GPUs also introduced software and algorithmic challenges:

Memory management: Efficiently managing the massive memory requirements of the model across thousands of GPUs required careful optimization of memory allocation and data movement strategies.

Load balancing: Ensuring even distribution of work across all GPUs was crucial for maintaining high efficiency. This required sophisticated partitioning strategies for both the model and the training data.

Numerical stability: At such large scales, ensuring numerical stability of the training process became more challenging, potentially requiring adjustments to the optimization algorithm or learning rate schedules.

Impact on Training Process and Model Development

These reliability and scalability challenges had several impacts on the overall training process and model development:

Extended development time: Addressing these challenges likely extended the overall development timeline for Llama 3, requiring significant engineering effort alongside the core machine learning research.

Iterative infrastructure improvements: The team had to continuously improve their training infrastructure, developing new tools and optimizations to address emerging challenges as they scaled up.

Robust training recipes: The frequent interruptions and scaling challenges necessitated the development of highly robust training recipes that could maintain stability and performance despite these issues.

Trade-offs in model design: Some architectural choices, such as opting for a standard dense Transformer over more complex architectures like mixture-of-experts, were influenced by the need to maximize training stability at scale.

Emphasis on efficiency: The challenges in scaling led to a strong focus on training efficiency, resulting in innovations like the use of PagedAttention for more efficient rejection sampling during post-training.

Some of the other key dimensions and themes that they have improved upon with regards to model development:

Data Quality and Diversity:

The quality and diversity of training data is crucial for model performance. Llama 3 used 15T multilingual tokens for pre-training, a significant increase from Llama 2's 1.8T tokens.

Careful pre-processing, curation, and quality assurance of pre-training and post-training data is essential.

Developing domain-specific pipelines for extracting high-quality code and math-relevant web pages can improve performance in those areas.

For multilingual capabilities, language-specific heuristics and filters are needed to remove low-quality documents.

The data mix composition significantly impacts model performance. Llama 3 used roughly 50% general knowledge, 25% mathematical/reasoning, 17% code, and 8% multilingual tokens.

Model Architecture and Scaling:

Llama 3 uses a standard dense Transformer architecture, avoiding more complex architectures like mixture-of-experts for better training stability.

The largest model (405B parameters) was determined to be approximately compute-optimal based on scaling laws for the given training budget.

Grouped Query Attention (GQA) with 8 key-value heads was used to improve inference speed and reduce key-value cache sizes.

An attention mask preventing self-attention between different documents within the same sequence was implemented.

The vocabulary was expanded to 128K tokens to better support non-English languages.

Training Infrastructure and Efficiency:

Llama 3 was trained on Meta's production clusters using up to 16K H100 GPUs.

Various optimizations were implemented for efficient training at scale, including:

Tensor parallelism, pipeline parallelism, and data parallelism

Asynchronous communication for pipeline parallelism

Context parallelism for training on extremely long sequences

Custom CUDA kernels and PyTorch compilation for performance optimization

Training Recipe:

The training process involved initial pre-training, long-context pre-training, and annealing stages.

Gradual increase of batch size and context length during training for stability and efficiency.

Adjustments to the data mix during training to improve performance on specific tasks.

Long-context pre-training to support context windows up to 128K tokens.

Learning rate annealing and checkpoint averaging in the final stages of training.

Post-Training Alignment:

Multiple rounds of supervised finetuning (SFT) and Direct Preference Optimization (DPO) were used for aligning the model with human preferences.

A new multi-message chat protocol was implemented to support advanced capabilities like tool use.

Reward modeling was used to guide the alignment process.

Rejection sampling with a reward model was employed to generate high-quality responses for finetuning.

Capability-Specific Optimizations:

Code: Training a specialized code expert, generating synthetic data, and implementing code-specific quality filters.

Multilinguality: Training a multilingual expert, sourcing high-quality multilingual data, and addressing language-specific challenges.

Math and Reasoning: Generating diverse prompts, augmenting data with step-wise reasoning traces, and implementing self-verification techniques.

Long Context: Incorporating synthetic long-context data in finetuning and carefully balancing short and long-context capabilities.

Tool Use: Training on diverse tool use scenarios and implementing specialized adapters for different tools.

Factuality: Developing knowledge probing techniques and focusing on aligning the model to "know what it knows."

Steerability: Enhancing the model's ability to follow system prompts for response length, format, tone, and persona.

Safety Considerations:

Implementing safety measures at every stage of development, from pre-training data filtering to post-training alignment.

Developing safety-specific finetuning data and techniques, including adversarial and borderline examples.

Balancing violation rate (VR) and false refusal rate (FRR) across different model sizes and capabilities.

Addressing safety challenges in multilingual settings, long-context scenarios, and tool use cases.

Conducting extensive red team testing and iterative improvements based on discovered vulnerabilities.

Developing system-level safety components like Llama Guard 3 for flexible deployment in various applications.

Evaluation and Benchmarking:

Comprehensive evaluation on a wide range of benchmarks covering different capabilities.

Conducting human evaluations to assess more subtle aspects of model performance.

Analyzing model robustness to changes in multiple-choice question setups and adversarial inputs.

Performing contamination analysis to estimate the impact of training data overlap with evaluation sets.

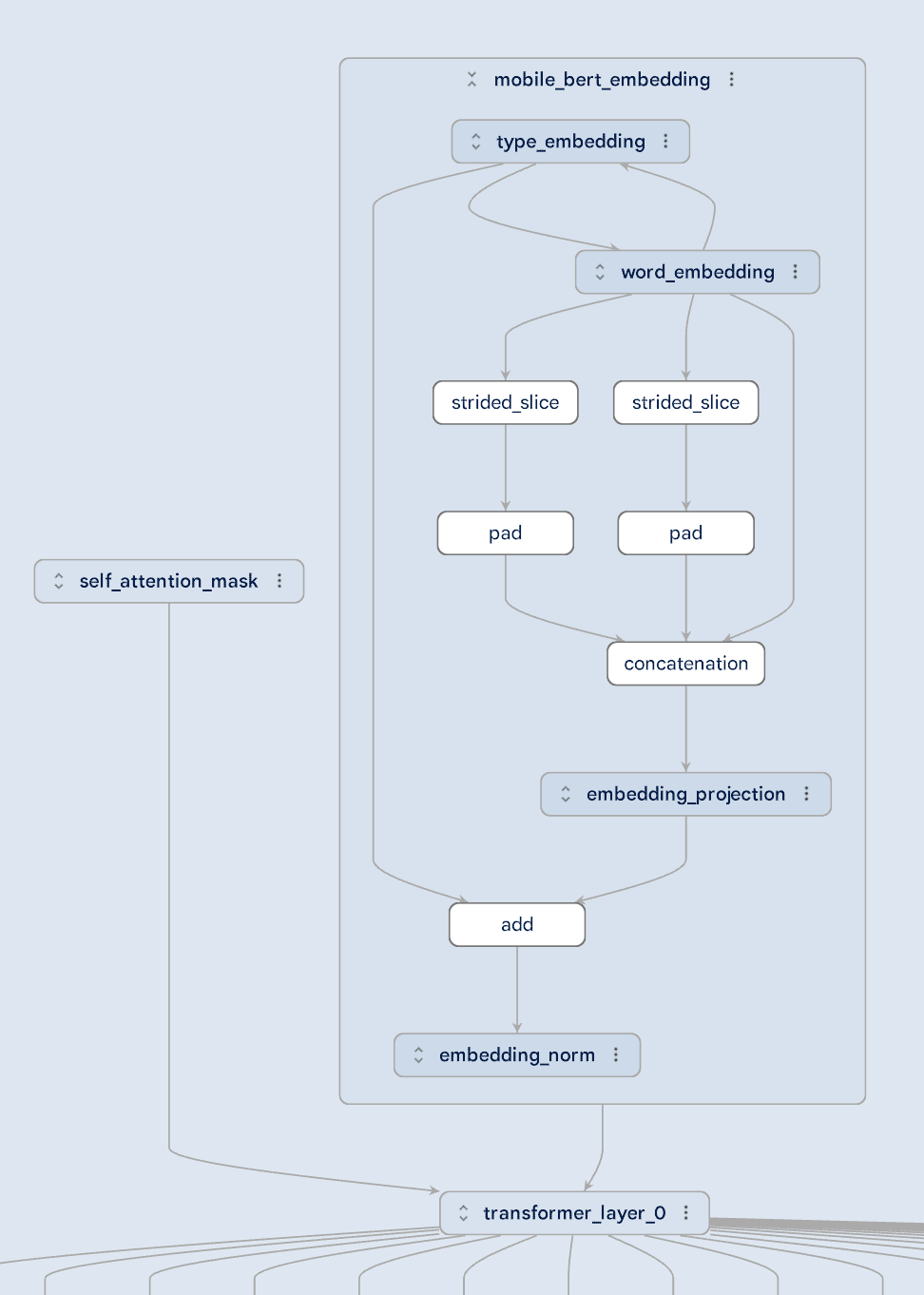

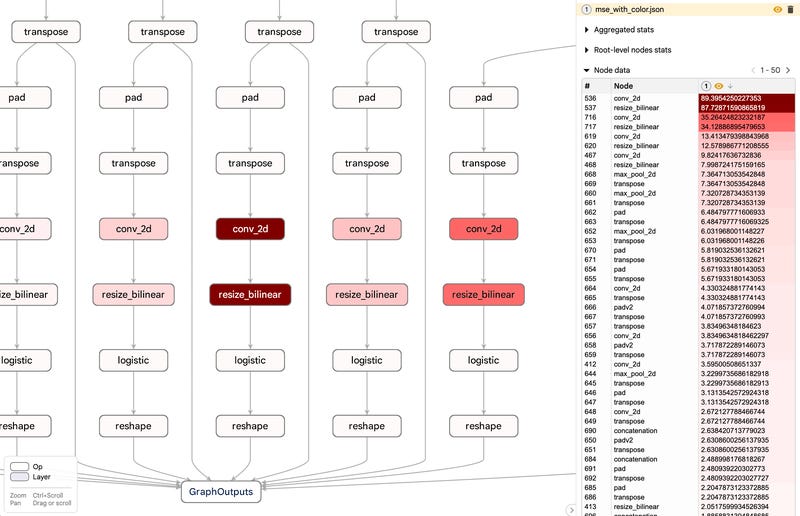

Google wrote an article around model explorer, which is a visualization tool that can shows different parts of the models and graphs in a very detailed manner.

Some of the advantages of this library:

Hierarchical visualization: It breaks down complex models into hierarchical layers, making them easier to understand and navigate.

Scalability: The tool can handle extremely large models with tens of thousands of nodes, maintaining smooth 60 FPS performance.

GPU-accelerated rendering: Utilizes WebGL and three.js for efficient graph rendering, enabling seamless interaction with complex structures.

Multi-format support: Compatible with various graph formats used by popular frameworks like JAX, PyTorch, TensorFlow, and TensorFlow Lite.

Side-by-side comparison: Allows users to compare different versions of a model, which is particularly useful for debugging conversion errors.

Data overlay: Supports overlaying per-node data for performance and numeric accuracy analysis.

Open-source: Google has made Model Explorer available as an open-source tool, promoting transparency and community contributions.

Some of the limitations for the model explorer are:

Learning curve: The hierarchical approach, while powerful, may require some time for users to become familiar with navigation and interpretation.

Specificity: As a specialized tool for large model visualization, it may not be as versatile for general-purpose machine learning tasks compared to broader platforms like TensorBoard.

Resource requirements: The GPU-accelerated rendering, while enabling smooth performance, may demand more computational resources than simpler visualization tools.

Model Explorer distinguishes itself from other visualization tools:

TensorBoard: While TensorBoard offers a broader suite of functionalities for ML experimentation, Model Explorer excels at handling very large models and provides a more intuitive hierarchical structure.

Netron: Compared to Netron, a popular general-purpose neural network visualization tool, Model Explorer is specifically designed to handle large-scale models effectively.

Traditional tools: Many existing visualization tools struggle with the scale and complexity of modern ML models, often resulting in rendering failures or unmanageable visual complexity. Model Explorer overcomes these limitations with its hierarchical approach and GPU-accelerated rendering.

Performance: The use of GPU-accelerated and instanced rendering techniques allows Model Explorer to handle much larger graphs smoothly compared to traditional SVG-based rendering used by many other tools.

Specialized features: Unique features like side-by-side model comparison and per-node data overlay are particularly useful for debugging conversion errors and performance issues in large models.

The code is available in GitHub.

Support for Deep Learning Libraries

Model Explorer demonstrates extensive support for various deep learning frameworks:

JAX: Supports graph formats used by JAX, enabling visualization of models built with this framework.

PyTorch: Compatible with PyTorch graph formats, allowing users to visualize and analyze PyTorch models.

TensorFlow: Fully supports TensorFlow graph formats, making it an excellent tool for TensorFlow users.

TensorFlow Lite: Provides support for TensorFlow Lite, which is crucial for visualizing models optimized for edge devices and mobile platforms.

Multi-framework compatibility: The ability to work with multiple frameworks makes Model Explorer versatile for teams using different tools or migrating between frameworks.

Tutorials

Anthropic's Prompt Engineering Interactive Tutorial

This course is intended to provide you with a comprehensive step-by-step understanding of how to engineer optimal prompts within Claude.

After completing this course, you will be able to:

Master the basic structure of a good prompt

Recognize common failure modes and learn the '80/20' techniques to address them

Understand Claude's strengths and weaknesses

Build strong prompts from scratch for common use cases

🗣️ Large Language Model Course

The LLM course is divided into three parts:

🧩 LLM Fundamentals covers essential knowledge about mathematics, Python, and neural networks.

🧑🔬 The LLM Scientist focuses on building the best possible LLMs using the latest techniques.

👷 The LLM Engineer focuses on creating LLM-based applications and deploying them.

For an interactive version of this course, I created two LLM assistants that will answer questions and test your knowledge in a personalized way:

🤗 HuggingChat Assistant: Free version using Mixtral-8x7B.

Libraries

AutoMix’s motivation:

Send a query to small language model (SLM), gets a noisy label on its correctness using few-shot self-verification done with the same model (SLM).

Use a meta-verifier to double check verifier's output, and route the query to a larger language model (LLM) if needed.

Prompt. Generate Synthetic Data. Train & Align Models.

DataDreamer is a powerful open-source Python library for prompting, synthetic data generation, and training workflows. It is designed to be simple, extremely efficient, and research-grade.

RLVF: Learning from Verbal Feedback without Overgeneralization

This repository includes a reference implementation of Contextualized Critiques with Constrained Preference Optimization (C3PO), a novel technique to align LLMs with user preferences from a single sentence of feedback and without overgeneralization. The repository also includes implementations of relevant baselines and other components necessary to explore the techniques proposed in the paper. See the project website for more information.

supertree is a Python package designed to visualize decision trees in an interactive and user-friendly way within Jupyter Notebooks, Jupyter Lab, Google Colab, and any other notebooks that support HTML rendering. With this tool, you can not only display decision trees, but also interact with them directly within your notebook environment. Key features include:

ability to zoom and pan through large trees,

collapse and expand selected nodes,

explore the structure of the tree in an intuitive and visually appealing manner.

Pretraining data detection problem: given a piece of text and black-box access to an LLM without knowing the pretraining data, can we determine if the model was trained on the provided text? To faciliate the study, we built a dynamic benchmark WikiMIA to systematically evaluate detecting methods and proposed Min-K% Prob 🕵️, a method for detecting undisclosed pretraining data from large language models.

T-GATE: Temporally Gating Attention to Accelerate Diffusion Model for Free! 🥳

Researchers explore the role of attention mechanism during inference in text-conditional diffusion models. Empirical observations suggest that cross-attention outputs converge to a fixed point after several inference steps. The convergence time naturally divides the entire inference process into two phases: an initial phase for planning text-oriented visual semantics, which are then translated into images in a subsequent fidelity-improving phase. Cross-attention is essential in the initial phase but almost irrelevant thereafter. However, self-attention initially plays a minor role but becomes crucial in the second phase. These findings yield a simple and training-free method known as temporally gating the attention (TGATE), which efficiently generates images by caching and reusing attention outputs at scheduled time steps. Experimental results show when widely applied to various existing text-conditional diffusion models, TGATE accelerates these models by 10%–50%.

Differential Language Analysis ToolKit

DLATK is an end to end human text analysis package for Python 3. It is specifically suited for social media, Psychology, and health research, developed originally for projects out of the University of Pennsylvania, Stony Brook University, and Stanford University. Currently, it has been used in over 100 peer-reviewed publicaitons (many from before there was an article to reference).

Dlatk is designed to handle the multi-level nature of language (words belong to documents, written by people, within communities) that makes it particularly useful for psychological and social science.

Some examples of what DLATK can perform:

linguistic feature extraction (i.e. turning text into features or variables for analyses)

differential language analysis (i.e. finding the language that is most associated with psychological or health variables)

wordcloud visualization

statistical- and machine learning-based supervised prediction (regression and classification)

statistical- and machine learning-based dimensionality reduction and clustering

mediation analysis

contextual embeddings: using deep learning transformers message, user, or group embeddings

part-of-speech tagging

gemma.cpp

gemma.cpp is a lightweight, standalone C++ inference engine for the Gemma foundation models from Google.

For additional information about Gemma, see ai.google.dev/gemma. Model weights, including gemma.cpp specific artifacts, are available on kaggle.

Insanely Fast Whisper

An opinionated CLI to transcribe Audio files w/ Whisper on-device! Powered by 🤗 Transformers, Optimum & flash-attn

TL;DR - Transcribe 150 minutes (2.5 hours) of audio in less than 98 seconds - with OpenAI's Whisper Large v3. Blazingly fast transcription is now a reality!⚡️

pipx install insanely-fast-whisper==0.0.15 --force

Yarn contains the code and data for the YaRN context window extension method.

Paper (ICLR 2024): YaRN: Efficient Context Window Extension of Large Language Models

Old Preprint (arXiv)

Love the detailed writeup!