Quantization Aware Training in PyTorch

Photoplethysmography(PPG) data to predict cardiovascular risk through Deep Learning

Articles

Quantization in deep learning refers to the process of reducing the precision of the numbers used to represent the model's parameters and activations. Typically, deep learning models use 32-bit floating-point numbers (float32) for computations. Quantization aims to use lower precision formats, such as 16-bit floating-point (float16), 8-bit integers (int8), or even lower bit-widths.

The reason why quantization is used is that, for heavy inference workloads, it is possible to reduce the cost either through memory or compute to use this saving for making the model complex without regressing the overall model accuracy as much.

There are multiple flavors of quantization:

Weight Quantization: Reduces the precision of model parameters.

Activation Quantization: Reduces the precision of activation outputs.

Full Integer Quantization: Converts both weights and activations to integers.

Mixed Precision: Uses different precisions for different parts of the model.

Granularity of the quantization within a particular method can also be customized:

Per-Tensor: Applies the same scale and zero-point across an entire tensor.

Per-Channel: Applies different scales and zero-points for each channel in a tensor.

Per-Group: Applies quantization to groups of channels or neurons.

Large models like GPT-3 (175B parameters) or BERT-Large (340M parameters) can be reduced by 75% or more. This would benefit memory usage for the machines as memory access often bottlenecks inference speed. Quantized models require less memory bandwidth, leading to faster inference.

Further, Many AI accelerators are optimized for low-precision arithmetic such as Google's TPUs, NVIDIA's Tensor Cores, and Intel's Nervana NNP.

It also enables running sophisticated models on resource-constrained devices. Lower precision computations consume significantly less energy. Running BERT models on smartphones for on-device natural language processing requires much less energy due to resource constrained in smartphones than server deployments.

Quantization Techniques

There are multiple ways to apply quantization. If the quantization is applied after training, it is called Post Training Quantization(PTQ). It generally is the most commonly used quantization technique across board as it is very simple to apply and no retraining is needed and also it does not introduce any regressions in training. However, this also reduces the accuracy of the models based on the quantization overall.

The other one that is less used, but it can be less intrusive in terms of model accuracy is called Quantization Aware Training(QAT). This method incorporates quantization effects during training process and that is why it is not “post-training” quantization as the training “emulate”s the quantization loss to some degree so that the quantization materialization would be less impacting model performance accuracy.

Needless to say that PTQ is well-supported and known, but QAT is less known and in different frameworks, it is less supported and less known. In order to shed some light, PyTorch wrote a blog post discussing Quantization-Aware Training (QAT) for large language models (LLMs).

They of course go through the reasoning and why it QAT is needed over PTQ:

Improved Model Quality: QAT significantly recovers accuracy and perplexity degradation compared to post-training quantization (PTQ).

Maintained Model Size and Speed: QAT models achieve better performance while maintaining the same model size and on-device inference/generation speeds as PTQ models.

Compatibility with Existing Workflows: QAT-produced models can be used as drop-in replacements for PTQ models, reusing existing backend delegation logic and kernels.

Effectiveness at Lower Bit-widths: QAT shows significant improvements even for challenging 2-bit and 3-bit weight-only quantization scenarios.

Integration with Fine-tuning: The QAT flow is integrated with PyTorch's fine-tuning APIs, allowing for easy application during model fine-tuning.

Their post covers the process of applying QAT through PyTorch APIs:

Fake Quantization: QAT simulates quantization numerics during training without actually casting to lower bit-widths.

API Structure: The QAT API in torchao involves two main steps:

prepare: Applies transformations to linear layers to simulate quantization numerics.convert: Actually quantizes the layers to lower bit-widths after training.

Supported Quantization Scheme: Currently supports int8 dynamic per-token activations + int4 grouped per-channel weights (8da4w) for linear layers.

Straight-Through Estimator (STE): Used in the backward pass to estimate gradients flowing through non-smooth quantization functions.

They reported multiple experiment output in Llama2, Llama3 and different versions through parameter counts.

Llama2-7B:

Recovered 62% of normalized accuracy degradation on hellaswag

Recovered 58% and 57% of word and byte perplexity degradation on wikitext

Llama3-8B:

Recovered 96% of normalized accuracy degradation on hellaswag

Recovered 68% and 65% of word and byte perplexity degradation on wikitext

Recovered 51% of normalized accuracy degradation on arc_challenge

Lower Bit Weight-Only Quantization:

2-bit: Recovered 53% of normalized accuracy degradation on hellaswag, 99% and 89% of word and byte perplexity degradation on wikitext

3-bit: Recovered 63% of normalized accuracy degradation on hellaswag, 72% and 65% of word and byte perplexity degradation on wikitext

These are some of the further optimization techniques that they used. Especially, selective layer quantization based on sensitivity is very important to reduce the model performance accuracy decrease.

Selective Layer Quantization: For 2-bit and 3-bit quantization, skipping quantization for the first 3 and last 2 layers (most sensitive) significantly improved results.

Group Size Adjustment: Used a group size of 32 for 2-bit and 3-bit quantization experiments for finer-grained quantization.

QAT is not all advantageous as mentioned above, speed of training is actually slower as the training has to do more operations to “emulate” quantization throughout the training. However, even memory-side, they found that memory increase per GPU as the quantization operation has to be serialization and deserialized in each checkpointing and resuming from checkpointing.

Speed: 8da4w QAT fine-tuning is approximately 34% slower than regular full fine-tuning.

Memory: With activation checkpointing, memory increase per GPU is around 2.35 GB.

Computational Impact: QAT inserts many fake quantize operations, adding overhead to both fine-tuning speed and memory usage.

Burden of cardiovascular diseases is very large in the world’s economy and it is projected to increase:

From 2008 to 2012, there were increased yearly trends for both hospitalizations (from 250,354 to 322,676) and total costs (from US $ 388.52 to 721.58 million per year in 2014 currency) in Shanghai. Cost per CVD hospitalization in 2012 averaged US $ 2236.29, with the highest being for chronic rheumatic heart diseases (US $ 4710.78). Most direct medical costs were spent on medication. By the end of 2030, the average cost per visit per month for all CVDs was estimated to be US $ 4042.68 (95% CI: US $ 3795.04–4290.31) for all CVDs, and the total health expenditure for CVDs would reach over US $1.12 billion (95% CI: US $ 1.05–1.19 billion) without additional government interventions.

In order to reduce this burden and improve the quality of life for people, the need for accessible and affordable heart health screening methods is needed. Traditional methods like electrocardiograms (ECGs) and echocardiograms, while effective, are often expensive and require specialized equipment and trained personnel, making them less accessible in low-resource settings.

Photoplethysmography (PPG) is a simple and low-cost optical technique used to detect blood volume changes in the microvascular bed of tissue. It is commonly used in wearable devices like smartwatches and fitness trackers to monitor heart rate.

PPG technology offers several advantages:

Non-Invasive: It uses light to measure blood flow, making it non-invasive.

Cost-Effective: PPG sensors are inexpensive and can be integrated into widely used consumer devices.

Accessibility: Given its integration into everyday devices, PPG can be used for continuous monitoring, providing a wealth of data for analysis.

This is where Google Research comes and publishes a post on leveraging PPG data to develop algorithms that can detect early signs of cardiovascular disease. The research involves collecting large datasets of PPG signals from various sources and using machine learning models to analyze these signals.

Their ML Model building are in the following way:

They first build model embeddings of PPGs by training a 1D-ResNet18 model to predict multiple attributes of an individual (e.g., age, sex, BMI, hypertension status, etc) using only the PPG signal.

This is the portion where it learns various attributes and how it can predict for these attributes through PPG signal. One can consider this more like a content understanding with regards to various topics that model is predicting based on what is ingests and what it sees as a label.

The resulting learned embeddings and associated metadata as features is then inputted to a survival model for predicting 10-year incidence of major adverse cardiac events.

The survival model is a Cox proportional hazards model, which is often used to study long term outcomes when individuals may be lost to follow up, and is also common in estimating disease risk.

This approach is still more about learned embedding representation out of the features and eventually is needed into a Cox proportional hazards model which then further estimates the survival rate of the user. The embeddings are trained to detect patterns in PPG signals that correlate with cardiovascular health issues, which can identify irregularities that might indicate conditions such as atrial fibrillation, hypertension, and other heart-related problems.

One of the things I am personally very excited is to have Apple Watch and other watches that have these types of sensor capabilities that can detect various irregularities on signals that are predictive such as PPG and improve the preventive care in such a way that the diseases either would not develop as much they would or provide signals early on so that it can trigger behavior change on people who are wearing these watches.

Libraries

sgrep is a command-line tool that performs semantic searches on text input using word embeddings. It's designed to find semantically similar matches to the query, going beyond simple string matching. The experience is designed to be similar to grep.

vLLM is a fast and easy-to-use library for LLM inference and serving.

vLLM is fast with:

State-of-the-art serving throughput

Efficient management of attention key and value memory with PagedAttention

Continuous batching of incoming requests

Fast model execution with CUDA/HIP graph

Quantization: GPTQ, AWQ, SqueezeLLM, FP8 KV Cache

Optimized CUDA kernels

Performance benchmark: Meta includes a performance benchmark that compares the performance of vllm against other LLM serving engines (TensorRT-LLM, text-generation-inference and lmdeploy).

vLLM is flexible and easy to use with:

Seamless integration with popular Hugging Face models

High-throughput serving with various decoding algorithms, including parallel sampling, beam search, and more

Tensor parallelism and pipeline parallelism support for distributed inference

Streaming outputs

OpenAI-compatible API server

Support NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, PowerPC CPUs

weights2weights is a manifold of the weights, space of weights spanned by a large collection of customized diffusion models. Snapchat populate this space by creating a dataset of over 60,000 models, each of which is fine-tuned to insert a different person’s visual identity. Next, they model the underlying manifold of these weights as a subspace, which they term weights2weights. They demonstrate three immediate applications of this space -- sampling, editing, and inversion. First, as each point in the space corresponds to an identity, sampling a set of weights from it results in a model encoding a novel identity. Next, they find linear directions in this space corresponding to semantic edits of the identity (e.g., adding a beard). These edits persist in appearance across generated samples. Finally, they show that inverting a single image into this space reconstructs a realistic identity, even if the input image is out of distribution (e.g., a painting). Our results indicate that the weight space of fine-tuned diffusion models behaves as an interpretable latent space of identities.

It has a great project page as well.

Evidence is an open-source, code-based alternative to drag-and-drop business intelligence tools.

Evidence generates a website from markdown files:

SQL statements inside markdown files run queries against your data sources

Charts and components are rendered using these query results

Templated pages generate many pages from a single markdown template

Loops and If / Else statements allow control of what is displayed to users

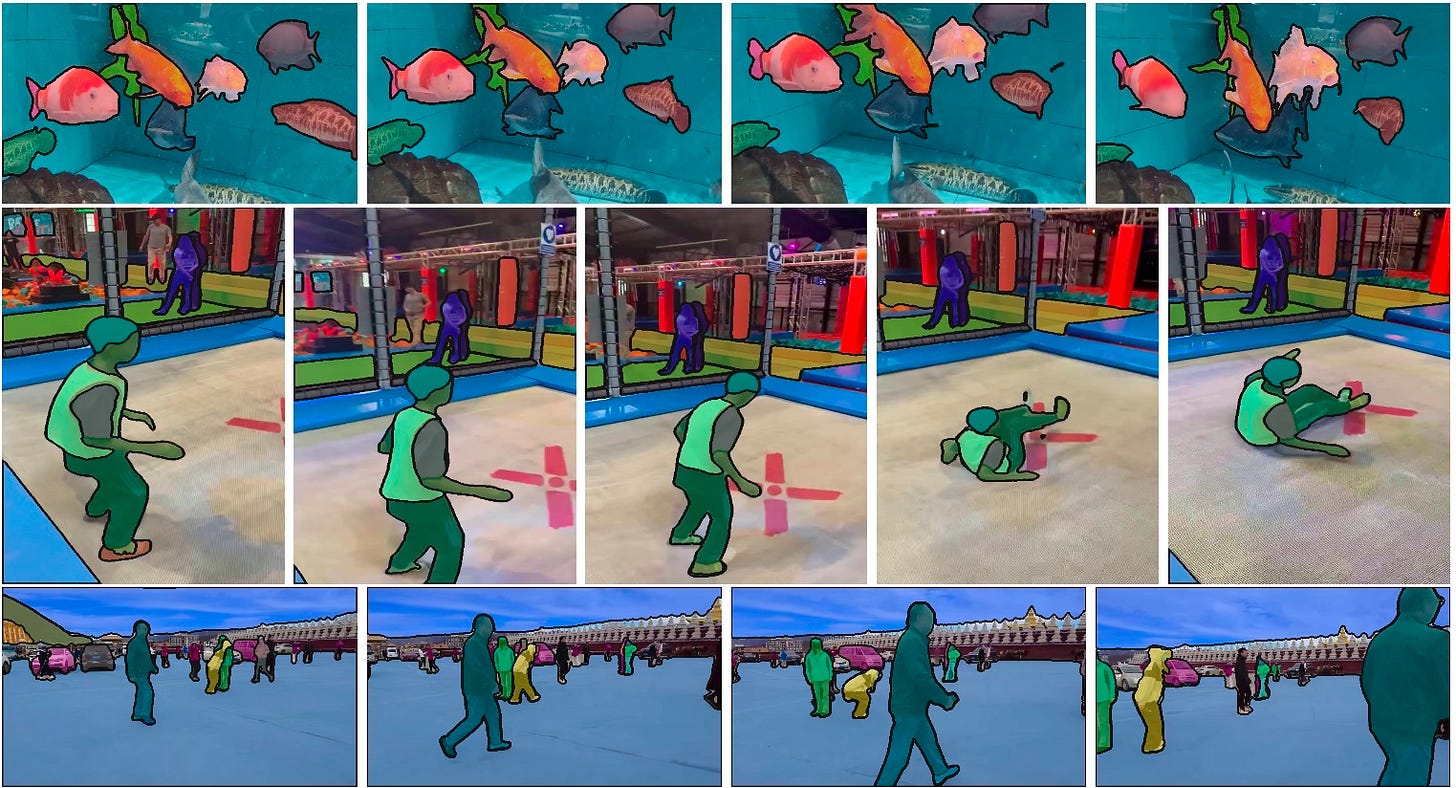

Segment Anything Model 2 (SAM 2) is a foundation model towards solving promptable visual segmentation in images and videos. SAM is extended to video by considering images as a video with a single frame. The model design is a simple transformer architecture with streaming memory for real-time video processing. It is built a model-in-the-loop data engine, which improves model and data via user interaction, to collect SA-V dataset, the largest video segmentation dataset to date. SAM 2 trained on our data provides strong performance across a wide range of tasks and visual domains.

Oscar is a project aiming to improve open-source software development by creating automated help, or “agents,” for open-source maintenance. We believe there are many opportunities to reduce the amount of toil involved with maintaining open-source projects both large and small.

The ability of large language models (LLMs) to do semantic analysis of natural language (such as issue reports or maintainer instructions) and to convert between natural language instructions and program code creates new opportunities for agents to interact more smoothly with people. LLMs will likely end up being only a small (but critical!) part of the picture; the bulk of an agent's actions will be executing standard, deterministic code.

Oscar differs from many development-focused uses of LLMs by not trying to augment or displace the code writing process at all. After all, writing code is the fun part of writing software. Instead, the idea is to focus on the not-fun parts, like processing incoming issues, matching questions to existing documentation, and so on.

Sensory is an illustrative code intended to accompany an upcoming research publication on models trained on mobile-sensor data to evaluate CVD risk.

Capture: Provides an example of how to capture camera data on Android devices that would be appropriate for such models.

Upload: Provides an example functionality for uploading processed images and image sequences to a blob-storage that can be owned by a 3P, which is a common need for data collection programs. This code is provided to underscore the need for robust uploading capabilities for these use cases. It is incomplete for production use, which would also need error-handling, authentication, etc.

FHIR Questionnaires: Integrates with FHIR-SDK to generate forms (participant registration and data capturing) using FHIR-SDC which generates FHIR resources (Patient, DocumentReference). Note: One needs to configure fhir server to sync fhir resources.

NeuralGCM is a Python library for building hybrid ML/physics atmospheric models for weather and climate simulation.

DoRA is an implementation of "DoRA: Weight-Decomposed Low-Rank Adaptation" (Liu et al, 2024) https://arxiv.org/pdf/2402.09353.pdf

Categorical systems theory (CST) is a doubly-categorical framework for approaching and developing structural theories of systems and behavior of any kind. These notes are meant to be (1) a basic reference for CST and (2) a source of examples and working developments. They're under active development, so quite far from camera-ready, and possibly never complete.