Pinterest's Text to SQL system through LLMs!

Netflix has built a Percolator system to power reverse search!

Generative Recommenders

We have recently open-sourced our next generation recommender system: Generative Recommender, check it out, give stars and fork if you are interested in contributing!

The paper that accompanies code is also here. If you have questions/comments, please send it to my way as well!

Now, back to the original programming:

Articles

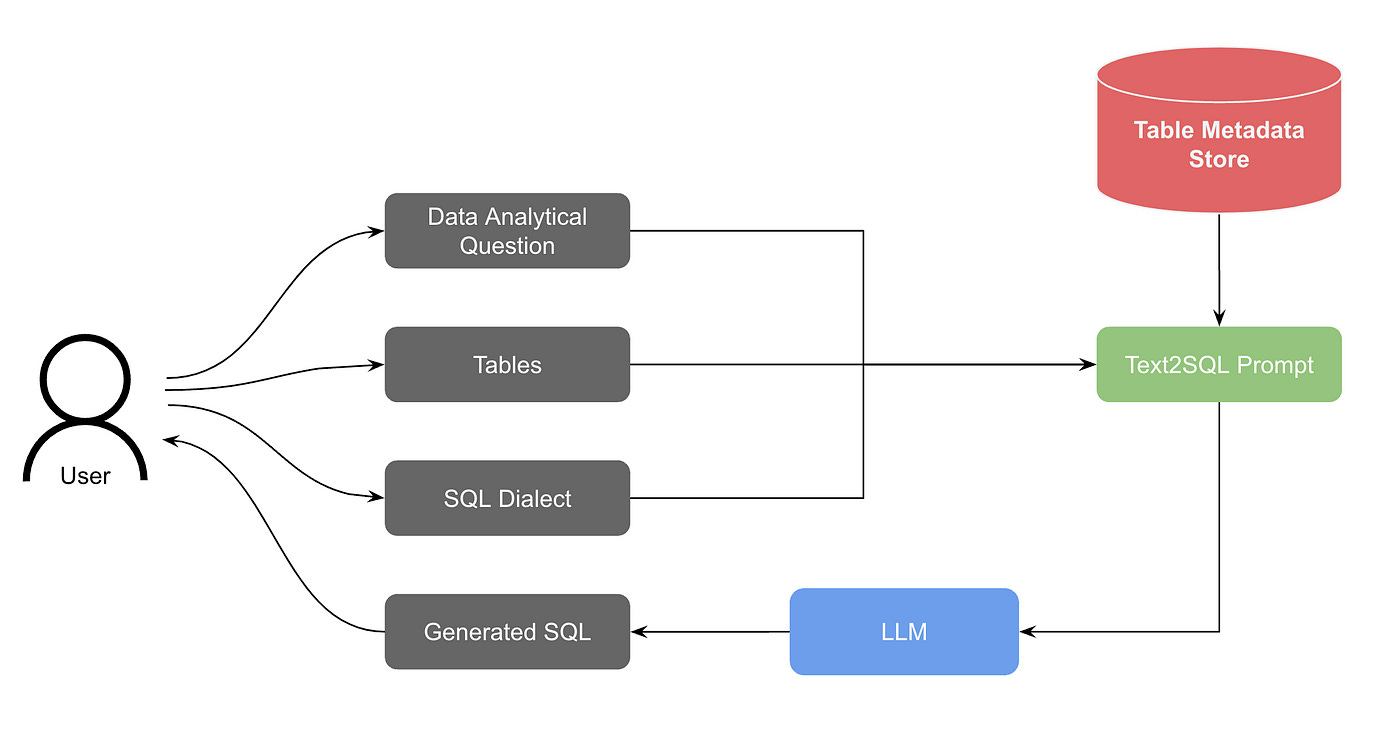

Pinterest wrote a blog post on generating SQL queries from text. A core part of this process involves writing SQL queries to analyze data and solve analytical problems. However, the Pinterest team identified that translating analytical questions into effective SQL code could be a significant challenge for many of their data users.

The goal was to create a solution that would allow data users to simply input an analytical question in natural language, and have the system automatically generate the corresponding SQL code and execute this SQL code under the hood for certain use cases. The initial version of the Text-to-SQL solution relied on an LLM as the core component. Users would input their analytical question and select the relevant tables they wanted to query. This information, along with the specific SQL dialect used at Pinterest, would then be compiled into a prompt and fed into the LLM. The LLM would then generate the SQL code to address the user's question.

The overall system consists of the following components:

Natural Language Processing (NLP): The system would use NLP techniques to parse the user's analytical question and extract the relevant information, such as the desired metrics, filters, and join conditions.

Table Selection: The user would be presented with a list of available tables and asked to select the ones relevant to their query. This information would be used to contextualize the LLM's understanding of the data.

SQL Dialect Mapping: The system would need to understand the specific SQL dialect used at Pinterest and generate code that adhered to the company's standards and best practices.

LLM Integration: The extracted question, table information, and SQL dialect would be compiled into a prompt and fed into the LLM, which would then generate the SQL code.

However, one key challenge was ensuring the quality and accuracy of the generated SQL code. LLMs, while powerful, can sometimes produce SQL that is syntactically correct but logically flawed or inefficient.

Pinterest team implemented a table standardization and summarization process to accommodate the weakness of LLM. They focused on indexing only the top-tier, high-quality tables in their data warehouse, ensuring that the LLM had access to the most reliable and relevant data sources. Further, they generated detailed summarizations of the table schemas and sample queries for each table, providing the LLM with more context about the data it was working with.

Table standardization mainly consisted of the following steps:

Catalog Curation: They carefully reviewed the company's data catalog and identified the most important, high-quality tables that were frequently used by data users.

Schema Indexing: They indexed the schema information for these curated tables, including column names, data types, and descriptions.

Sample Query Generation: For each table, they generated a set of sample SQL queries that demonstrated common use cases and data patterns.

Table summarization then can be done through both scheme and query summarization:

Schema Summarization: They created concise, human-readable summaries of the table schemas, highlighting the key columns, relationships, and use cases.

Query Summarization: They summarized the sample queries, explaining the analytical intent behind each query and the insights it was designed to uncover.

These enhancements had a significant impact on the quality of the SQL code generated by the Text-to-SQL feature. The LLM was now able to better understand the data structures and relationships, leading to more accurate and efficient SQL queries.

Netflix wrote about their search capabilities within their federated graph architecture. The federated graph architecture poses challenges for traditional search approaches, as querying across the various connected services can have significant traffic implications. They have developed a custom Graph Search DSL translated into Elasticsearch queries, rather than relying on Elasticsearch's query language directly to address the limitation.

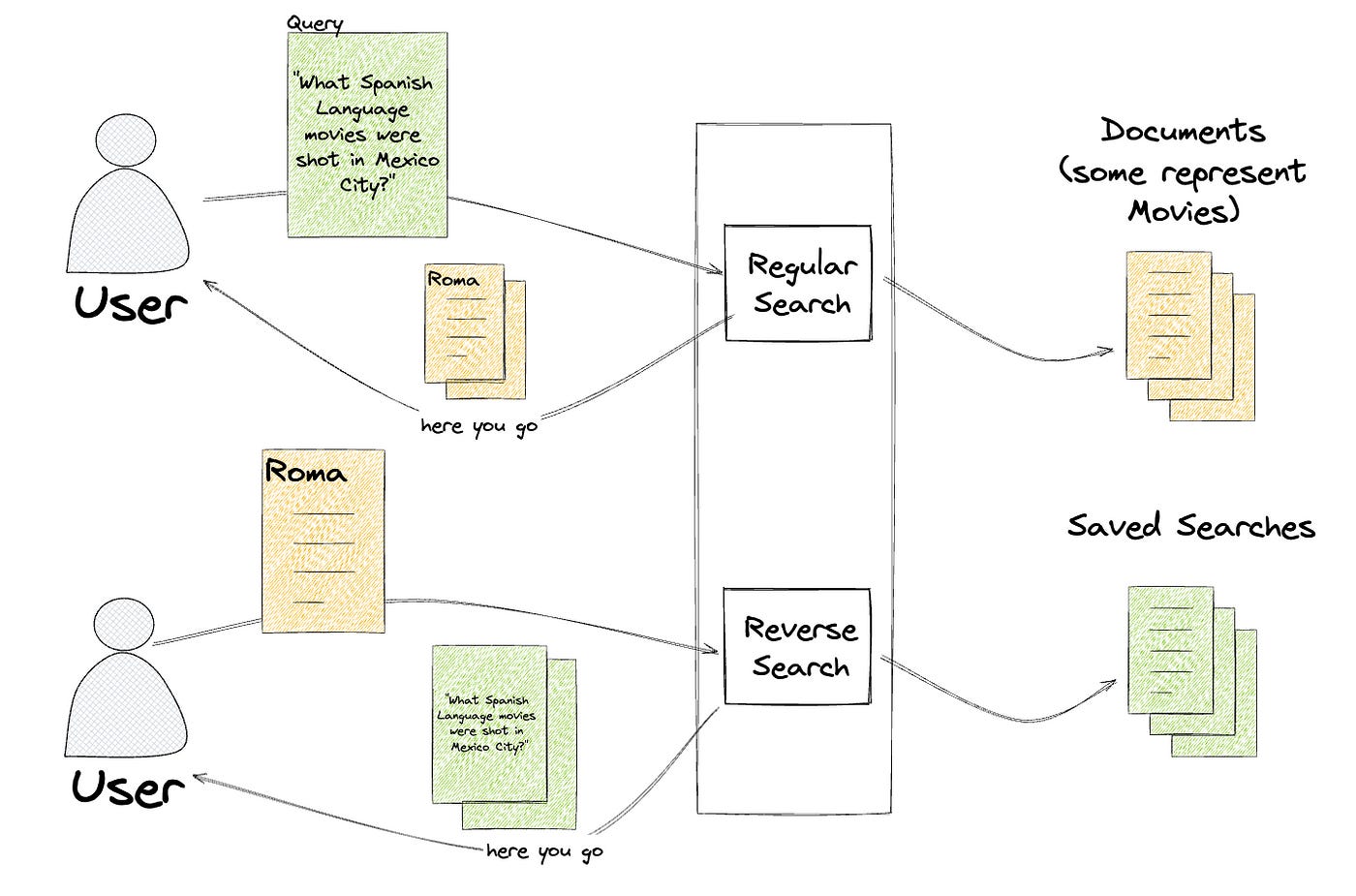

Reverse Search Functionality

The novelty in the article introduction of "reverse search" functionality within the Graph Search system. Reverse search inverts the standard querying pattern - instead of finding documents that match a query, it finds queries that match a document .This reverse search capability is powered by Elasticsearch's percolator capability and fields, which allow indexing of queries themselves. When a new document is added to the system, it can be "percolated" against the indexed queries to determine which saved searches it matches. This allows user to build “notifications” proactively where instead of storing the docs and making them searchable, it allows the searched queries to find the matching docs right after the doc is indexed in the index.

An example use case of this capability is that Netflix Post Production Coordinator, Tiffany, who subscribes to various notifications and updates related to the movies she oversees. Rather than subscribing to specific movies, Tiffany subscribes to queries that return a dynamic subset of movies based on certain criteria, such as "movies shooting in Mexico City which don't have a key role assigned" or "movies that are at risk of not being ready by their launch date." Percolator or “reverse search” capability comes handy to power these types of queries in the system.

Implementing Reverse Search

One can approach building a percolator system in the following way:

Saved Searches: The system stores "saved searches" as a first-class entity, with a schema that includes the search filter (expressed in the Graph Search DSL) and the index it applies to.

Percolator Fields: When a saved search is created, the filter is converted to an Elasticsearch query and indexed in a percolator field. Percolator fields allow the system to efficiently determine which saved searches match a given document.

Reverse Search Resolver: They added a new resolver to the Domain Graph Service (DGS) for Graph Search, which implements the reverse search functionality. This resolver takes a document as input and returns the set of saved searches that match that document.

Graph Search DSL: Netflix developed a custom Graph Search DSL to express search queries, rather than using Elasticsearch's query language directly. This DSL is then translated into Elasticsearch queries, which are indexed in the percolator fields. This is probably a good Intermediate Representation(IR) to make the system agnostic to the underlying SW that is being used to power for search capability. In future, if they decide to use another technology like Solar or some other search system, they can write the DSL to other query capability.

Benefits of Reverse Search

Efficient Notifications: As mentioned, by using reverse search to determine which saved searches match a given document, the system can efficiently notify only the relevant subscribers when a document changes, rather than querying all saved searches and notifying everyone.

Reduced Load on Federated Graph: The traditional approach of repeatedly querying for the results of every saved search would have significant traffic implications for the federated graph architecture. Reverse search allows the system to re-query based on change events with "laser precision," reducing the load on the broader ecosystem.

Externalized Criteria Matching: They provide an example of the Movie Matching service, which uses reverse search to classify movies based on complex, nuanced criteria. By storing the matching criteria as reverse searches, the service can efficiently determine which criteria a movie matches against.

Broader Applicability: Since every Graph Search index is now reverse-search capable, the functionality can be leveraged by various teams and applications across Netflix, beyond the initial movie use case.

Libraries

Penzai is a JAX library for writing models as legible, functional pytree data structures, along with tools for visualizing, modifying, and analyzing them. Penzai focuses on making it easy to do stuff with models after they have been trained, making it a great choice for research involving reverse-engineering or ablating model components, inspecting and probing internal activations, performing model surgery, debugging architectures, and more. (But if you just want to build and train a model, you can do that too!)

LibMTL is an open-source library built on PyTorch for Multi-Task Learning (MTL). See the latest documentation for detailed introductions and API instructions.

Pixie is an open-source observability tool for Kubernetes applications. Use Pixie to view the high-level state of your cluster (service maps, cluster resources, application traffic) and also drill down into more detailed views (pod state, flame graphs, individual full-body application requests).

Why Pixie?

Three features enable Pixie's magical developer experience:

Auto-telemetry: Pixie uses eBPF to automatically collect telemetry data such as full-body requests, resource and network metrics, application profiles, and more. See the full list of data sources here.

In-Cluster Edge Compute: Pixie collects, stores and queries all telemetry data locally in the cluster. Pixie uses less than 5% of cluster CPU and in most cases less than 2%.

Scriptability: PxL, Pixie’s flexible Pythonic query language, can be used across Pixie’s UI, CLI, and client APIs.

Volcano is a batch system built on Kubernetes. It provides a suite of mechanisms that are commonly required by many classes of batch & elastic workload including: machine learning/deep learning, bioinformatics/genomics and other "big data" applications. These types of applications typically run on generalized domain frameworks like TensorFlow, Spark, Ray, PyTorch, MPI, etc, which Volcano integrates with.

Volcano builds upon a decade and a half of experience running a wide variety of high performance workloads at scale using several systems and platforms, combined with best-of-breed ideas and practices from the open source community.

Pyro is a flexible, scalable deep probabilistic programming library built on PyTorch. Notably, it was designed with these principles in mind:

Universal: Pyro is a universal PPL - it can represent any computable probability distribution.

Scalable: Pyro scales to large data sets with little overhead compared to hand-written code.

Minimal: Pyro is agile and maintainable. It is implemented with a small core of powerful, composable abstractions.

Flexible: Pyro aims for automation when you want it, control when you need it. This is accomplished through high-level abstractions to express generative and inference models, while allowing experts easy-access to customize inference.