OpenAI announces new fine-tuning API!

Code Llama, How Linkedin uses sparse embeddings to build their home feed

Articles

OpenAI announced a powerful fine-tuning capabilities in a blog post. I want to give a holistic explanation of fine-tuning and how OpenAI builds this capability to their base models in below:

What is fine-tuning?

Fine-tuning is a process of adjusting the weights of a neural network to improve its performance on a specific task. In the case of GPT-3.5 Turbo, fine-tuning is done by feeding the model a dataset of text prompts and corresponding responses. The model then learns to associate the prompts with the responses, and this helps it to generate more accurate and relevant responses when given new prompts.

What data is needed for fine-tuning?

The amount of data needed for fine-tuning depends on the specific task. For simple tasks, such as answering questions, a small dataset of a few hundred prompts may be sufficient. For more complex tasks, such as generating creative text formats, a larger dataset of thousands or even millions of prompts may be required.

How is fine-tuning done?

Fine-tuning can be done on a local machine or in the cloud. If you are fine-tuning on a local machine, you will need to install the OpenAI API and the fine-tuning toolkit. Once you have the toolkit installed, you can upload your dataset to the API and start fine-tuning the model. If you are fine-tuning in the cloud, you can use a service like Google Cloud Platform or Amazon Web Services.

What are the benefits of fine-tuning?

Fine-tuning can improve the performance of GPT-3.5 Turbo on a variety of tasks. For example, fine-tuning can help the model to:

Generate more accurate and relevant responses

Learn to follow instructions

Avoid generating offensive or harmful content

Customize its output to a specific audience

Is fine-tuning difficult?

Fine-tuning can be a complex process, but it is not impossible. There are a number of resources available to help developers fine-tune GPT-3.5 Turbo, including documentation, tutorials, and pre-trained models.

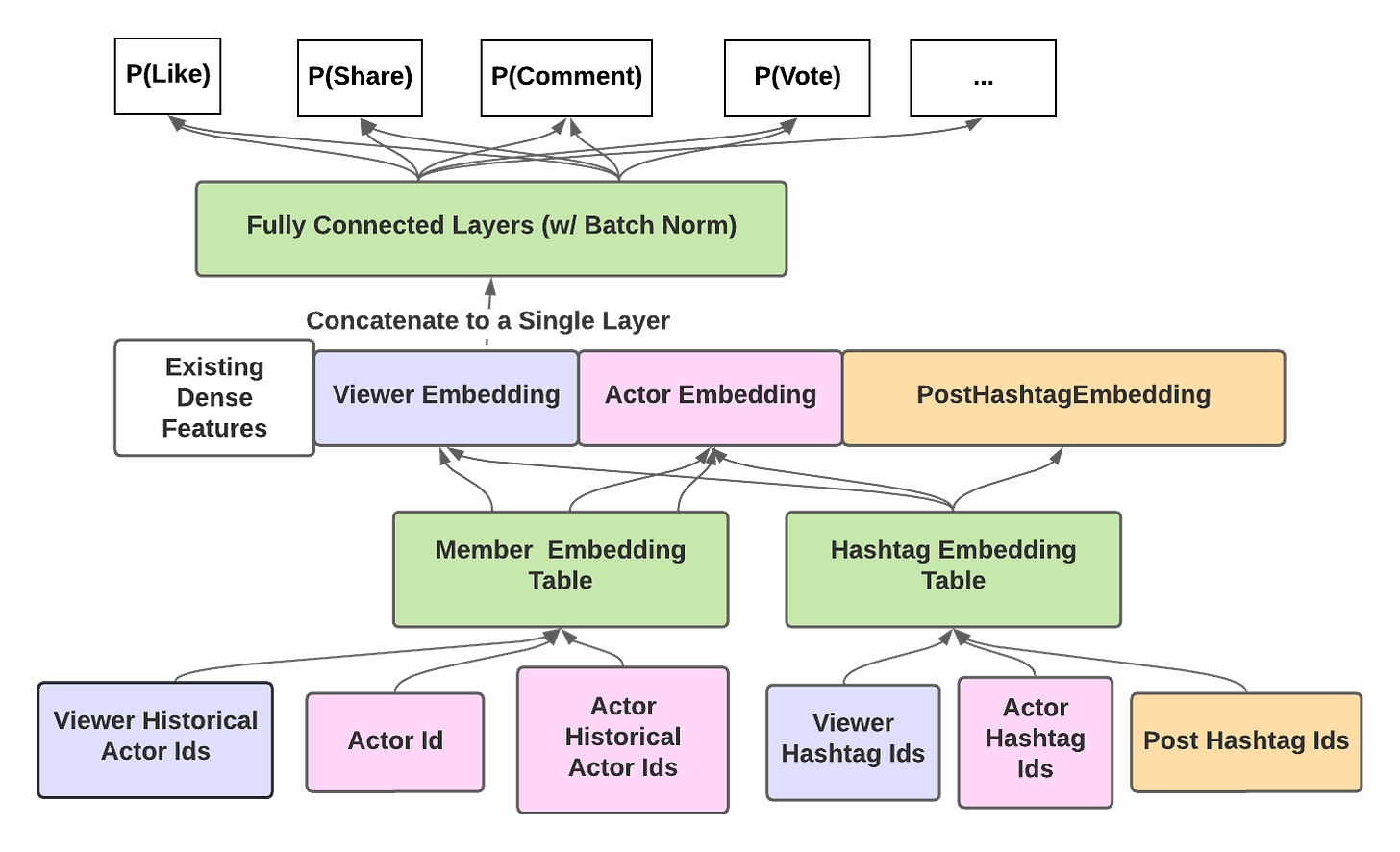

LinkedIn wrote how how they are using large corpus sparse ID embeddings (LARs) to improve the relevance of its homepage feed. LARs are a type of embedding that can be used to represent high-dimensional categorical data in a lower-dimensional continuous space. This makes it easier to learn the relationships between different items of data, such as posts, articles, and people.

LinkedIn uses LARs to represent the following features in its homepage feed:

User IDs : This allows LinkedIn to personalize the feed for each user.

Post IDs : This allows LinkedIn to group together posts that are similar to each other.

Hashtag IDs : This allows LinkedIn to surface posts that are related to a particular topic.

Company IDs : This allows LinkedIn to show users posts from companies they are interested in.

And the way they are approaching to train LARs:

The first step is to train a LAR model on a large corpus of data.

The LAR model learns the relationships between all of the features in the data.

Once the LAR model is trained, it can be used to represent any new data in the same lower-dimensional space.

This allows the LAR model to learn the relationships between the new data and the data it was trained on.

LARs are powerful that can be used to improve the performance of many different machine learning models. They are particularly well-suited for tasks that involve learning the relationships between high-dimensional categorical data:

They can be used to represent high-dimensional categorical data in a lower-dimensional continuous space.

They can learn the relationships between different features in a large corpus of data.

They can be used to improve the performance of many different machine learning models.

Together AI released Llama-2-7B-32K-Instruct, a long-range LLM that can be used for a variety of tasks, including question answering, summarization, and code generation. The model is a 32K context model, which means that it can process up to 32,000 tokens of text at a time. This makes it well-suited for tasks that require understanding long-range dependencies, such as question answering and summarization.

It is also fine-tuned using Together AI's Instruct API, which allows users to provide instructions to the model on how to perform specific tasks. This makes it easy to customize the model for different downstream use cases.

Allen Institute for AI wrote a blog post open-sourcing a large dataset called Dolma for LLM training. Dolma is a dataset of 3 trillion tokens that can be used to LLMs. Dolma is the largest open dataset to date, and it is designed to be diverse and representative of the world's languages.

The motivation for this dataset is that LLMs are trained on massive amounts of text data, and the quality and diversity of the data can have a significant impact on the performance of the LLM. Dolma is designed to address this need by providing a large, diverse, and representative dataset for training LLMs.

Dolma is composed of text from a variety of sources, including web content, academic publications, code, books, and encyclopedic materials. The text in Dolma is also translated into multiple languages, which makes it a valuable resource for training LLMs that can understand and generate text in multiple languages.

Libraries

Unlimiformer is a method for augmenting pretrained encoder-decoder models with retrieval-based attention, without changing the mathematical definition of attention. This allows the use of unlimited length inputs with any pretrained encoder-decoder!

Guardrails is a Python package that lets a user add structure, type and quality guarantees to the outputs of large language models (LLMs). Guardrails:

does pydantic-style validation of LLM outputs. This includes semantic validation such as checking for bias in generated text, checking for bugs in generated code, etc.

takes corrective actions (e.g. reasking LLM) when validation fails,

enforces structure and type guarantees (e.g. JSON).

The high computational and memory requirements of generative large language models (LLMs) make it challenging to serve them quickly and cheaply. FlexFlow Serve is an open-source compiler and distributed system for low latency, high performance LLM serving. FlexFlow Serve outperforms existing systems by 1.3-2.0x for single-node, multi-GPU inference and by 1.4-2.4x for multi-node, multi-GPU inference.

Manticore Search is an easy to use open source fast database for search. Good alternative for Elasticsearch. What distinguishes it from other solutions is:

It's very fast and therefore more cost-efficient than alternatives, for example Manticore is:

182x faster than MySQL for small data (reproducible❗)

29x faster than Elasticsearch for log analytics (reproducible❗)

15x faster than Elasticsearch for small dataset (reproducible❗)

5x faster than Elasticsearch for medium-size data (reproducible❗)

4x faster than Elasticsearch for big data (reproducible❗)

LLaMA2-Accessory is an open-source toolkit for pre-training, fine-tuning and deployment of Large Language Models (LLMs) and mutlimodal LLMs

TypeChat is a library that makes it easy to build natural language interfaces using types.

Building natural language interfaces has traditionally been difficult. These apps often relied on complex decision trees to determine intent and collect the required inputs to take action. Large language models (LLMs) have made this easier by enabling us to take natural language input from a user and match to intent. This has introduced its own challenges including the need to constrain the model's reply for safety, structure responses from the model for further processing, and ensuring that the reply from the model is valid. Prompt engineering aims to solve these problems, but comes with a steep learning curve and increased fragility as the prompt increases in size.

TypeChat replaces prompt engineering with schema engineering.

Simply define types that represent the intents supported in your natural language application. That could be as simple as an interface for categorizing sentiment or more complex examples like types for a shopping cart or music application. For example, to add additional intents to a schema, a developer can add additional types into a discriminated union. To make schemas hierarchical, a developer can use a "meta-schema" to choose one or more sub-schemas based on user input.

After defining your types, TypeChat takes care of the rest by:

Constructing a prompt to the LLM using types.

Validating the LLM response conforms to the schema. If the validation fails, repair the non-conforming output through further language model interaction.

Summarizing succinctly (without use of a LLM) the instance and confirm that it aligns with user intent.

Code Llama is a family of large language models for code based on Llama 2 providing state-of-the-art performance among open models, infilling capabilities, support for large input contexts, and zero-shot instruction following ability for programming tasks. They provide multiple flavors to cover a wide range of applications: foundation models (Code Llama), Python specializations (Code Llama - Python), and instruction-following models (Code Llama - Instruct) with 7B, 13B and 34B parameters each. All models are trained on sequences of 16k tokens and show improvements on inputs with up to 100k tokens. 7B and 13B Code Llama and Code Llama - Instruct variants support infilling based on surrounding content. Code Llama was developed by fine-tuning Llama 2 using a higher sampling of code.