Stanford's Hazy Research group recently explored how to maximize the speed of open-source models on modern GPUs, particularly in the challenging scenario of generating a single sequence with Llama-3.2-1B. Their findings reveal significant performance limitations in popular LLM inference engines and introduce a "megakernel" approach that delivers better speed improvements comparing to other approaches.

They identified an opportunity when running a single sequence with Llama-1B, performance is strongly memory-bound, meaning that execution speed is primarily limited by how quickly model weights can be loaded from GPU global memory. Popular LLM inference engines like vLLM and SGLang are only able to utilize at most 50% of the available GPU bandwidth when running this workload on high-performance accelerators like NVIDIA's H100.

The core problem is that existing systems break down a model's forward pass into approximately one hundred separate kernels, each implementing only a few operations such as RMS normalization, attention mechanisms, MLP layers with activations, and rotary position embeddings. Each of these kernels comes with setup and teardown periods during which no useful work is performed and creates overhead, resulting in inefficiencies.

Three key problems with the current kernel-based approach that create what they call "memory pipeline bubbles"—periods when the GPU is not actively loading from memory:

Strict Ordering of GPU Kernels: GPU kernels are launched in a strict sequence where thread blocks in a new kernel cannot start until all thread blocks from previous kernels have completely finished. This creates waiting periods as stragglers finish their work.

Kernel Launch and Teardown Costs: Each kernel launch incurs overhead. Even with NVIDIA's CUDA graphs (designed to hide these costs), measurements on an H100 showed launch costs of about 1.3 microseconds per kernel—time during which the GPU performs no useful work.

Weight and Activation Loading Delays: After a new kernel starts, the GPU must wait to load weights and activations before computation can begin. These latencies result in thousands of idle cycles.

For a model like Llama-1B with 16 layers and approximately 7 kernel launches per layer, even with an optimistic 5 microseconds of stalling per kernel, these inefficiencies limit performance. The theoretical memory limit on an H100 would allow approximately 1,350 forward passes per second, but pipeline bubbles reduce this to around 770 forward passes per second or even lower with further inefficiencies.

To address these limitations, one approach could be to merging the entire Llama-1B forward pass into a single "megakernel" that eliminates kernel boundaries altogether. This approach achieves remarkable performance, utilizing 78% of memory bandwidth on an H100 and outperforming existing systems by over 1.5x.

However, there are many challenges building and pipelining all of the kernel operations into a single kernel.

Challenge 1: Fusing Numerous Operations

Traditional kernel fusion typically merges just two or three operations, but the Llama-1B forward pass requires fusing approximately one hundred operations. To manage this complexity, one needs to build an on-GPU interpreter where each streaming multiprocessor (SM) receives and executes a sequence of instructions. In order to do so, there are several key instructions for their Llama forward pass megakernel:

Fused RMS norm & QKV & RoPE instruction

Attention computation instruction

Attention reduction instruction (for ThunderGQA on long sequences)

O-projection + residual instruction

Fused RMS norm & up-gate & SiLU instruction

Down-projection + residual instruction

RMS norm & language modeling head instruction

These instructions were implemented using a common CUDA template with standardized load, store, and compute functions to ensure interoperability within the interpreter framework.

Challenge 2: Managing Shared Memory Resources

The megakernel architecture allows for pipelining memory loads across instructions, starting to load weights for an upcoming instruction even while a previous instruction is still finishing. However, this creates a resource allocation challenge: the loaded weights need somewhere to be stored.

The solution was to implement a shared memory paging system. On an H100, the authors of the blog post divided the first 213KB of shared memory into thirteen 16KB pages, with remaining shared memory reserved for instruction parameters. Instructions explicitly request and release these pages from the interpreter, which automatically passes released pages to subsequent instructions. This allows new instructions to begin loading data as soon as shared memory becomes available, which also helps increasing utilization of the memory bandwidth.

Challenge 3: Explicit Synchronization

In traditional multi-kernel execution, CUDA ensures that all input tensors for a kernel are fully produced and available before the kernel launches. With megakernels, this guarantee doesn't exist—when an SM starts executing a new instruction, its inputs might not be ready.

To address this synchronization challenge, the authors implemented a counter system in GPU global memory. When an instruction completes, it increments a specific counter; when a new instruction starts, it waits for relevant counters to reach target values, confirming that all dependencies have been satisfied.

This approach enabled advanced optimizations like chunking the intermediate state in multi-layer perceptrons (MLPs). Rather than waiting for the entire hidden state to complete before beginning the down projection matrix multiply (as would be necessary with PDL), the megakernel produces and consumes the intermediate state in four chunks, each with its own counter. This allows the down projection to start processing a chunk as soon as it's ready, without waiting for the entire hidden state.

Performance Results

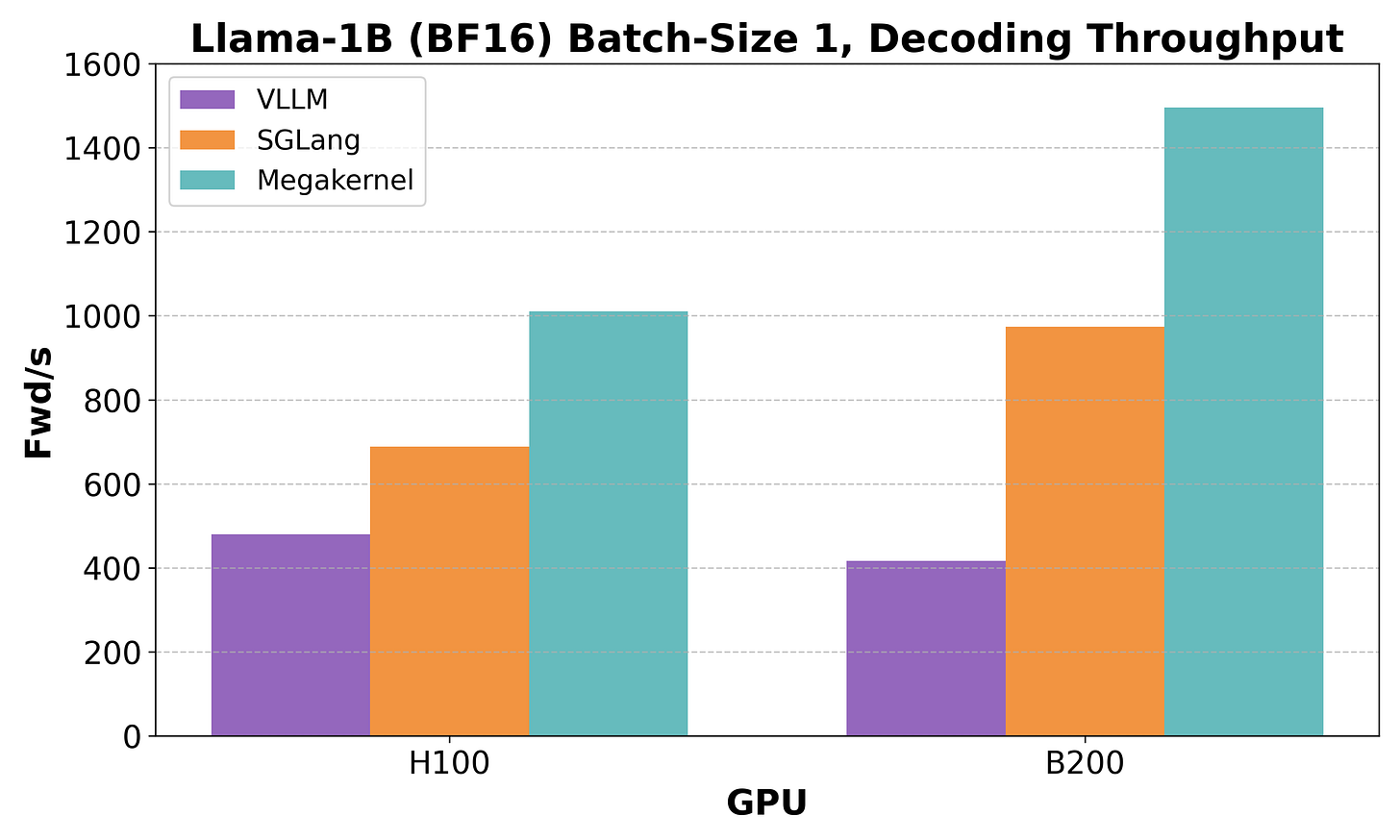

The megakernel approach improved the existing implementation through speed quite a bit:

On an H100, it runs almost 2.5x faster than vLLM and over 1.5x faster than SGLang.

On a B200, the performance gap with vLLM increases to over 3.5x, while maintaining more than 1.5x advantage over SGLang.

Other Approaches

While the megakernel approach demonstrates superior performance, the authors also acknowledge other technical approaches have their own merits:

CUDA Graphs: This NVIDIA feature helps hide kernel launch costs, reducing launch overhead from about 2.1 microseconds to 1.3 microseconds on an H100. While this represents a 38% improvement, it still leaves significant performance on the table compared to the megakernel approach.

Programmatic Dependent Launch (PDL): This NVIDIA mechanism allows the next kernel to start preparing while the previous kernel is running, potentially improving pipelining.However, the authors found that PDL's synchronization mechanism (cudaGridDependencySynchronize) is too coarse-grained. For example, it requires waiting for all queries, keys, and values to complete before starting attention calculations, rather than allowing heads to start as soon as they're ready. The megakernel's fine-grained counter-based synchronization offers more flexibility and efficiency.

Traditional Kernel-Based Execution: The standard approach of dividing operations into separate kernels does provide automatic synchronization guarantees and simpler programming models. However, these benefits come at the cost of significant performance penalties for low-latency, memory-bound workloads.

Libraries

garak checks if an LLM can be made to fail in a way we don't want. garak probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. If you know nmap or msf / Metasploit Framework, garak does somewhat similar things to them, but for LLMs.

garak focuses on ways of making an LLM or dialog system fail. It combines static, dynamic, and adaptive probes to explore this.

garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

miniDiffusion is a reimplementation of the Stable Diffusion 3.5 model in pure PyTorch with minimal dependencies. It's designed for educational, experimenting, and hacking purposes. It's made with the mindset of having the least amount of code necessary to recreate Stable Diffusion 3.5 from scratch, with only ~2800 spanning from VAE to DiT to the Train and Dataset scripts.

Swift Scribe is a privacy-first, AI-enhanced transcription application built exclusively for iOS 26/macOS 26+ that transforms spoken words into organized, searchable notes. Using Apple's latest SpeechAnalyzer and SpeechTranscriber frameworks (available only in iOS 26/macOS 26+) combined with on-device Foundation Models, it delivers real-time speech recognition, intelligent content analysis, and advanced text editing capabilities.

Below the Fold

container is a tool that you can use to create and run Linux containers as lightweight virtual machines on your Mac. It's written in Swift, and optimized for Apple silicon.

The tool consumes and produces OCI-compliant container images, so you can pull and run images from any standard container registry. You can push images that you build to those registries as well, and run the images in any other OCI-compliant application.

container uses the Containerization Swift package for low level container, image, and process management.

Zellij is a workspace aimed at developers, ops-oriented people and anyone who loves the terminal. Similar programs are sometimes called "Terminal Multiplexers".

Zellij is designed around the philosophy that one must not sacrifice simplicity for power, taking pride in its great experience out of the box as well as the advanced features it places at its users' fingertips.

Zellij is geared toward beginner and power users alike - allowing deep customizability, personal automation through layouts, true multiplayer collaboration, unique UX features such as floating and stacked panes, and a plugin system allowing one to create plugins in any language that compiles to WebAssembly.

pgroll is an open source command-line tool that offers safe and reversible schema migrations for PostgreSQL by serving multiple schema versions simultaneously. It takes care of the complex migration operations to ensure that client applications continue working while the database schema is being updated. This includes ensuring changes are applied without locking the database, and that both old and new schema versions work simultaneously (even when breaking changes are being made!). This removes risks related to schema migrations, and greatly simplifies client application rollout, also allowing for instant rollbacks.

Ephe is an ephemeral markdown paper to organize your daily todos and thoughts.

Traditional todo apps can be overwhelming.

Ephe is designed to organize your tasks with plain Markdown.

Ephe gives you just one clean page to focus your day.

patolette is a C / Python color quantization and dithering library.

At its core, it implements a weighted variant of Xiaolin Wu's PCA-based quantizer (not to be confused with the popular one from Graphics Gems vol. II, which is already available here).

Some of its key features are:

Avoids axis-aligned subdivisions

Supports the CIEL*u*v* and ICtCp color spaces

Optional use of saliency maps to give higher weight to areas that stand out visually

Optional KMeans refinement

RomM (ROM Manager) allows you to scan, enrich, browse and play your game collection with a clean and responsive interface. With support for multiple platforms, various naming schemes, and custom tags, RomM is a must-have for anyone who plays on emulators.