Articles

OLMo 2 32B

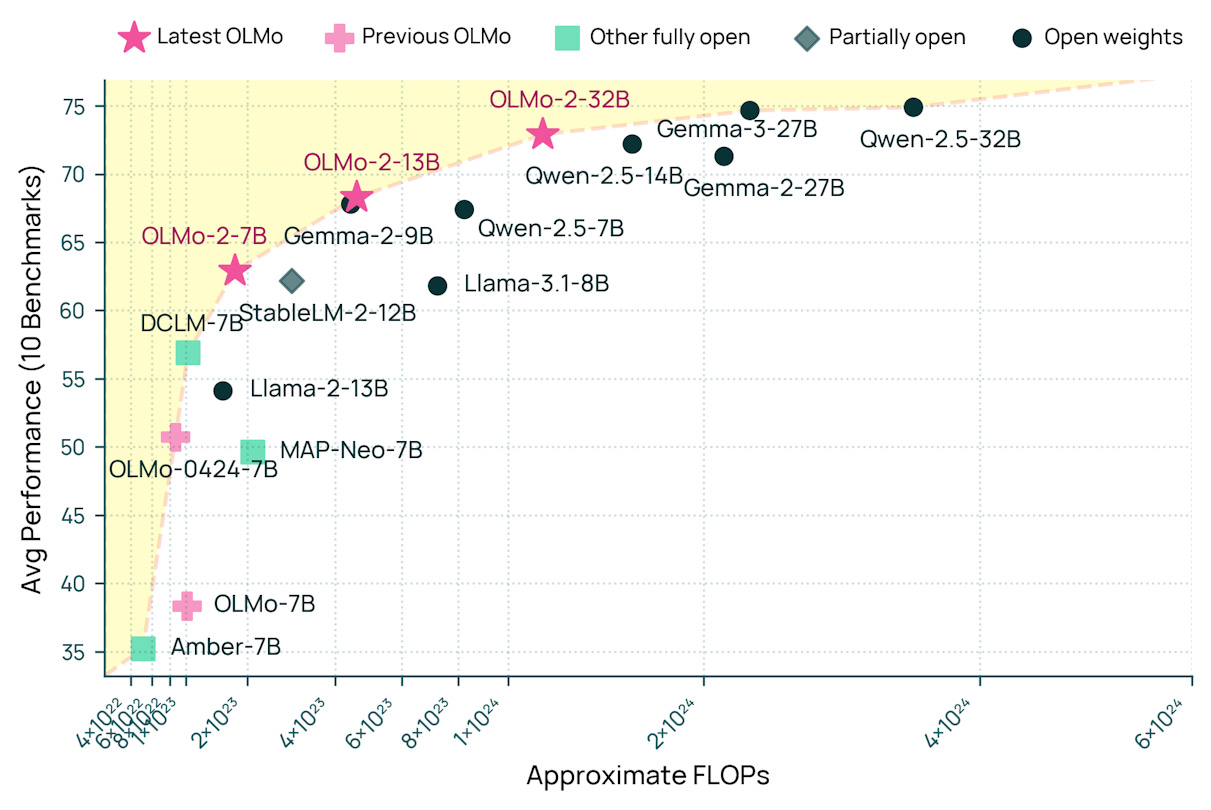

The Allen Institute for AI (AI2) has released OLMo 2 32B, a Foundation Model in open-source language models. This model is the largest and most capable in the OLMo 2 family, building upon the foundation laid by its 7B and 13B predecessors. OLMo 2 32B is the first fully open model, with all data, code, weights, and training details publicly available, to outperform GPT-3.5-Turbo and GPT-4o mini on a range of academic benchmarks.

State-of-the-art performance: OLMo 2 32B matches or surpasses GPT-3.5 Turbo, GPT4-o mini, Qwen 2.5 32B, and Mistral 24B, and approaches the performance of Qwen 2.5 72B and Llama 3.1/3.3 70B.

Fully open recipe: AI2 provides a complete, end-to-end recipe for language model training, including data, training methodology, and software, enabling researchers and developers to build and customize state-of-the-art pipelines.

Efficient pretraining: The model is trained on the same resource-efficient pretraining and mid-training mixes used for the 7B and 13B models. The training codebase was rewritten as OLMo-core, which provides better support for larger models, different training paradigms, and multimodality.

Refined post-training: The model integrates reinforcement learning with verifiable rewards (RLVR) as part of the Tülu 3.1 recipe, using Group Relative Policy Optimization (GRPO) and improved training infrastructure.

Accessibility and support: OLMo 2 32B is supported in Hugging Face's Transformers library and vLLM's main branch.

Pre-Training and Fine-Tuning

The development of OLMo 2 32B consists of two flavors of training namely pre-training and fine-tuning:

Base Model Training: This phase focuses on pretraining and mid-training the model on a large corpus of text data.

Instruct Model Training: This phase refines the base model using supervised fine-tuning (SFT), direct preference optimization (DPO), and reinforcement learning with verifiable rewards (RLVR) to create an instruction-following model.

Even pre-training is separated out in the post that there is a pre-training and “mid-training” which is on top of pre-training, but also not supervised fine-tuning.

Pretraining

Dataset: OLMo-Mix-1124, a collection of 3.9 trillion tokens sourced from DCLM, Dolma, Starcoder, and Proof Pile II.

Training Duration: The 32B model was trained for 1.5 epochs, totaling 6 trillion tokens.

Objective: The goal of pretraining is to expose the model to a vast amount of text data, enabling it to learn general language patterns, syntax, and semantics.

Mid-training

Dataset: Dolmino, a curated collection of 843 billion tokens, derived from:

High-quality documents re-sampled from OLMo-Mix-1124 using a quality filter.

Educational, math, and academic content not included in OLMo-Mix-1124.

Instruction-tuning data, including synthetic and human-generated data from the Tulu 3 Mix.

Training: The 32B model undergoes a process involving two samples of 100B and 300B tokens. Three checkpoints are created using the 100B samples with different data orders, and one checkpoint is created using the 300B sample. Model souping is then performed on these four model variants.

Objective: Mid-training aims to refine the model's knowledge by focusing on higher-quality and more specific data, improving its understanding of complex topics and instruction-following abilities.

This differs from the post-training where I think this area is not so much about instruction or label information, but rather a different dataset distribution that the model has not seen on the pre-training and therefore the name of “mid-training”

Instruct Model Training (Post-training)

The post-training recipe for OLMo 2 32B closely follows the Tülu 3 approach, which consists of three stages:

Supervised Fine-Tuning (SFT): Training the model on high-quality instruction data.

Direct Preference Optimization (DPO): Training the model to align with human preferences.

Reinforcement Learning with Verifiable Rewards (RLVR): Using reinforcement learning to further improve the model's performance and ensure alignment with desired behaviors.

Modifications to the Tülu 3 Recipe

Data Filtering: Instructions and chosen responses mentioning date cutoffs from the synthetic data generation process were filtered out, resulting in updated datasets: Tulu 3 SFT Mixture 0225 and OLMo-2-32B-pref-mix-0325.

Majority Voting for Math Questions: To enhance the quality of answers to synthetic math questions, majority voting was implemented. Only prompts and completions where the model reached a majority vote over 5 completions were included in the updated math and grade school math datasets.

Group Relative Policy Optimization (GRPO): The RLVR training stage was applied to GSM8K, IFEval, and MATH prompts, similar to the Llama 3.1 8B Tülu 3.1 model, using Group Relative Policy Optimization (GRPO). Intermediate RL checkpoints have been released for researchers to study the impacts of RLVR on instruction models.

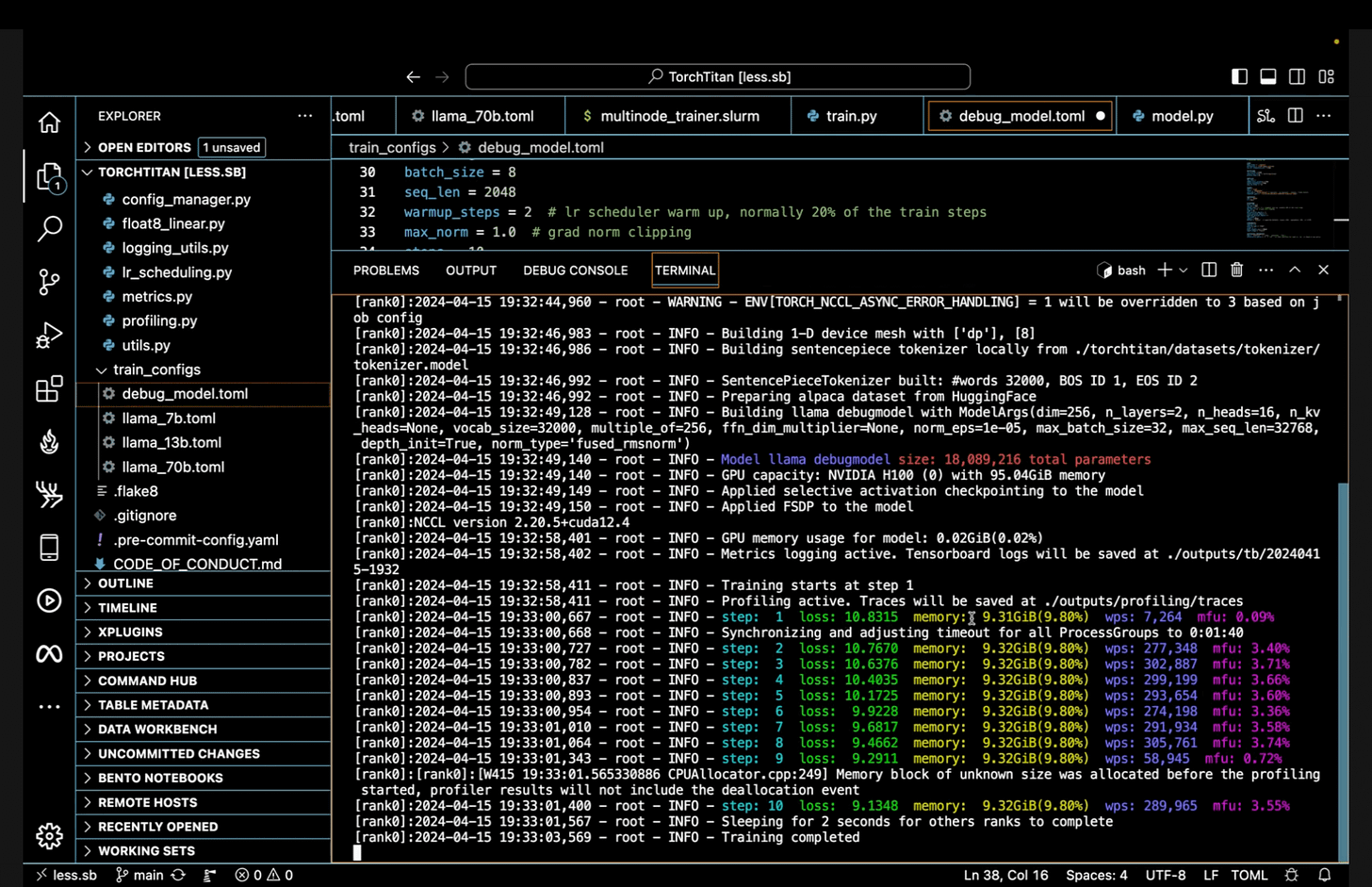

OLMo-core is a redesigned pretraining codebase that supports larger models, diverse training paradigms, and modalities beyond text. It focuses on efficiency, scalability, and flexibility.

Efficiency

Asynchronous Distributed Checkpointing: Checkpoint state is copied to CPU and asynchronously saved to disk or cloud storage while training continues, using PyTorch's

distributed.checkpointAPI. This ensures each rank in the distributed process group saves its local shard of the model's parameters and optimizer state, parallelizing the saving process.Minimal Host-Device Syncs: The codebase minimizes synchronization points by maintaining a small running buffer of metrics like loss on CUDA and periodically flushing it to CPU for logging operations.

PyTorch Best Practices: OLMo-core leverages new PyTorch features such as

compile, FSDP2, and selective activation checkpointing, following best practices from torchtitan.

Scalability

4D+ Parallelism: OLMo-core supports pipeline parallelism, data parallelism (through DDP, FSDP, or HSDP), context parallelism, tensor parallelism, and expert parallelism for MoE models.

Fine-Grained Activation Checkpointing: The library offers a configurable activation checkpointing API, enabling fine-grained strategies that can target specific submodules or operations.

Flexibility

Modular Design: OLMo-core's modular design allows for easy integration of custom model components, optimizers, learning rate schedules, and data loaders. The trainer is agnostic to the model architecture, parallelism strategy, and data format, and it features a flexible callback API for training loop customization.

Unified API for Local and Remote Filesystems: The trainer recognizes whether the save folder is a cloud storage URL or a local path and automatically uploads checkpoints to the remote folder. The data loader seamlessly streams data from cloud storage, enabling easy migration of training jobs between clusters.

Efficiency Improvements

Underperforming Compute Nodes: Identification and replacement of underperforming nodes, which significantly impact overall job performance, was expedited with Google engineering support.

Network Topology: Aligning NCCL ranks with the underlying hardware topology improved network utilization and job reliability.

Asynchronous Checkpointing Improvements: Reducing the frequency of checkpointing and throttling the upload speed to Google Storage mitigated performance impacts on the cluster management network, resulting in a 30% improvement in throughput.

Hybrid Sharding: Implementation of hybrid sharding, where the model is sharded across smaller groups of GPUs, addressed performance compromises associated with FSDP at high GPU counts. Switching to PyTorch 2.6, which fixed a bug preventing this configuration from working, yielded an immediate speed-up of around 20%.

Faster NCCL Collectives: Utilizing a new version of Google's NCCL drivers, which enabled the NCCL LL128 algorithm, provided flexibility for future training runs regarding batch sizes and recomputation.

PyTorch has a new website called “Landscape” where it displays a number of different libraries that are based or works with PyTorch.

If you want to read more about the documentation, there is a good guide that can go over different types of libraries as well.

Libraries

torchtitan is a proof-of-concept for large-scale LLM training using native PyTorch. It is (and will continue to be) a repo to showcase PyTorch's latest distributed training features in a clean, minimal codebase. torchtitan is complementary to and not a replacement for any of the great large-scale LLM training codebases such as Megatron, MegaBlocks, LLM Foundry, DeepSpeed, etc. Instead, we hope that the features showcased in torchtitan will be adopted by these codebases quickly. torchtitan is unlikely to ever grow a large community around it.

Principles for torchtitan:

Designed to be easy to understand, use and extend for different training purposes.

Minimal changes to the model code when applying multi-dimensional parallelism.

Modular components instead of a monolithic codebase.

Key Features

Multi-dimensional composable parallelisms

FSDP2 with per-parameter sharding

Tensor Parallel (including async TP)

Meta device initialization

Selective (layer or operator) activation checkpointing

Distributed checkpointing (including async checkpointing)

Interoperable checkpoints which can be loaded directly into

torchtunefor fine-tuning

torch.compilesupportDDP and HSDP

TorchFT integration

Checkpointable data-loading, with the C4 dataset pre-configured (144M entries) and support for custom datasets

Flexible learning rate scheduler (warmup-stable-decay)

Loss, GPU memory, throughput (tokens/sec), TFLOPs, and MFU displayed and logged via Tensorboard or Weights & Biases

Debugging tools including CPU/GPU profiling, memory profiling, Flight Recorder, etc.

All options easily configured via toml files

download tokenizers from Hugging Face

convert original Llama 3 checkpoints into the expected DCP format

estimate FSDP/HSDP memory usage without materializing the model

run distributed inference with Tensor Parallel

🐫 CAMEL is an open-source community dedicated to finding the scaling laws of agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

TorchCodec is a Python library for decoding videos into PyTorch tensors, on CPU and CUDA GPU. It aims to be fast, easy to use, and well integrated into the PyTorch ecosystem. If you want to use PyTorch to train ML models on videos, TorchCodec is how you turn those videos into data.

We achieve these capabilities through:

Pythonic APIs that mirror Python and PyTorch conventions.

Relying on FFmpeg to do the decoding. TorchCodec uses the version of FFmpeg you already have installed. FFmpeg is a mature library with broad coverage available on most systems. It is, however, not easy to use. TorchCodec abstracts FFmpeg's complexity to ensure it is used correctly and efficiently.

Returning data as PyTorch tensors, ready to be fed into PyTorch transforms or used directly to train models.

Cube is a Foundation model trained on vast amounts of data have demonstrated remarkable reasoning and generation capabilities in the domains of text, images, audio and video. Roblox’s goal is to build such a foundation model for 3D intelligence, a model that can support developers in producing all aspects of a Roblox experience, from generating 3D objects and scenes to rigging characters for animation to producing programmatic scripts describing object behaviors.

garak checks if an LLM can be made to fail in a way we don't want. garak probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. If you know nmap or msf / Metasploit Framework, garak does somewhat similar things to them, but for LLMs.

garak focuses on ways of making an LLM or dialog system fail. It combines static, dynamic, and adaptive probes to explore this.

garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

SpatialLM is a 3D large language model designed to process 3D point cloud data and generate structured 3D scene understanding outputs. These outputs include architectural elements like walls, doors, windows, and oriented object bounding boxes with their semantic categories. Unlike previous methods that require specialized equipment for data collection, SpatialLM can handle point clouds from diverse sources such as monocular video sequences, RGBD images, and LiDAR sensors. This multimodal architecture effectively bridges the gap between unstructured 3D geometric data and structured 3D representations, offering high-level semantic understanding. It enhances spatial reasoning capabilities for applications in embodied robotics, autonomous navigation, and other complex 3D scene analysis tasks.

Torch Lens Maker is an open-source Python library for differentiable geometric optics based on PyTorch. Currently a very experimental project, the goal is to be able to design complex real-world optical systems (lenses, mirrors, etc.) using modern computer code and state-of-the art numerical optimization.

Below the Fold

fd is a program to find entries in your filesystem. It is a simple, fast and user-friendly alternative to find. While it does not aim to support all of find's powerful functionality, it provides sensible (opinionated) defaults for a majority of use cases.

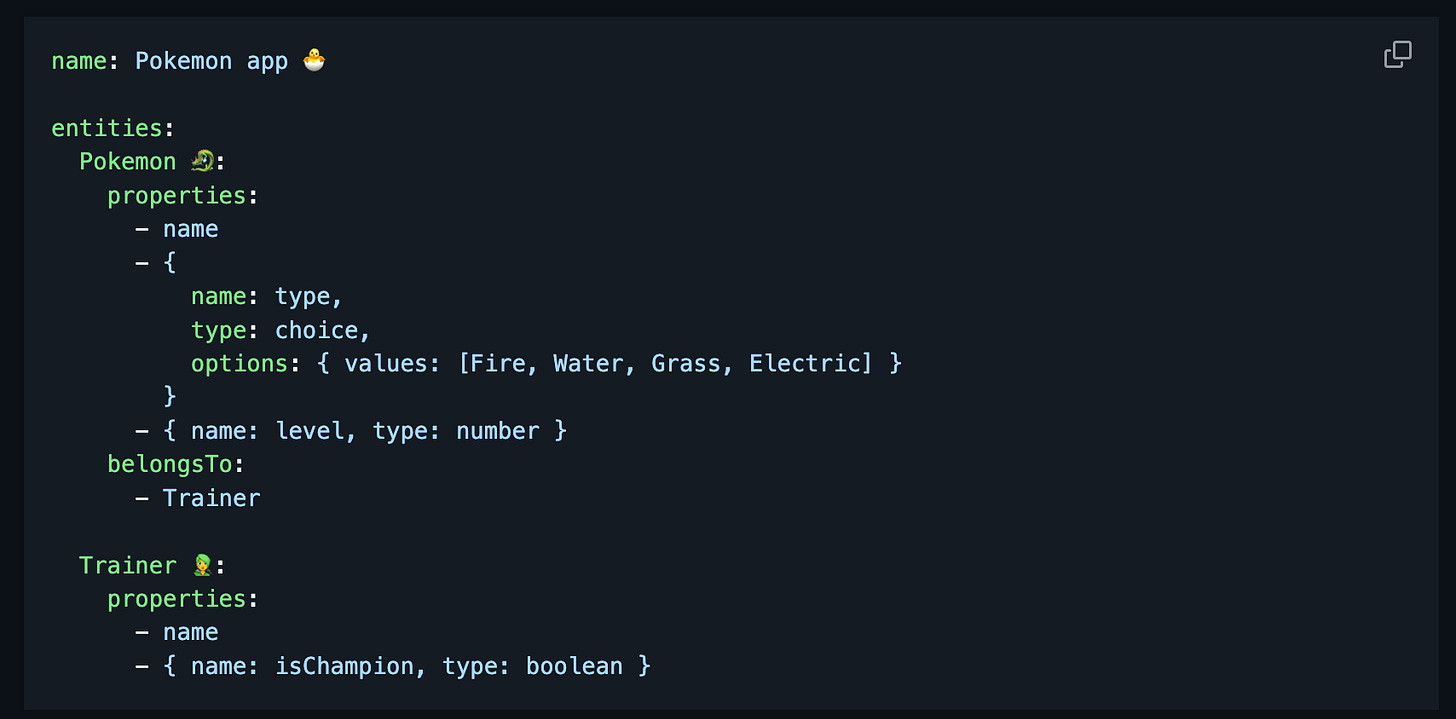

80% of websites and apps only use the most basic backend features. Using over-engineered solutions lead to unnecessary costs and complexity.

Manifest keeps it simple, delivering only the essential backend features and smoothly integrating in your project like any other file in your codebase.

Docs is a collaborative text editor designed to address common challenges in knowledge building and sharing.

Features

😌 Simple collaborative editing without the formatting complexity of markdown

🔌 Offline? No problem, keep writing, your edits will get synced when back online

💅 Create clean documents with limited but beautiful formatting options and focus on content

🧱 Built for productivity (markdown support, many block types, slash commands, keyboard shortcuts).

✨ Save time thanks to our AI actions (generate, sum up, correct, translate)