Netflix's ML Engineering Experience through Metaflow and Spin

Netflix wrote an excellent in depth article on the engineering experience of an ML Engineer in Netflix and how they develop and iterate on the ML models in Netflix with the technology stack that they use.

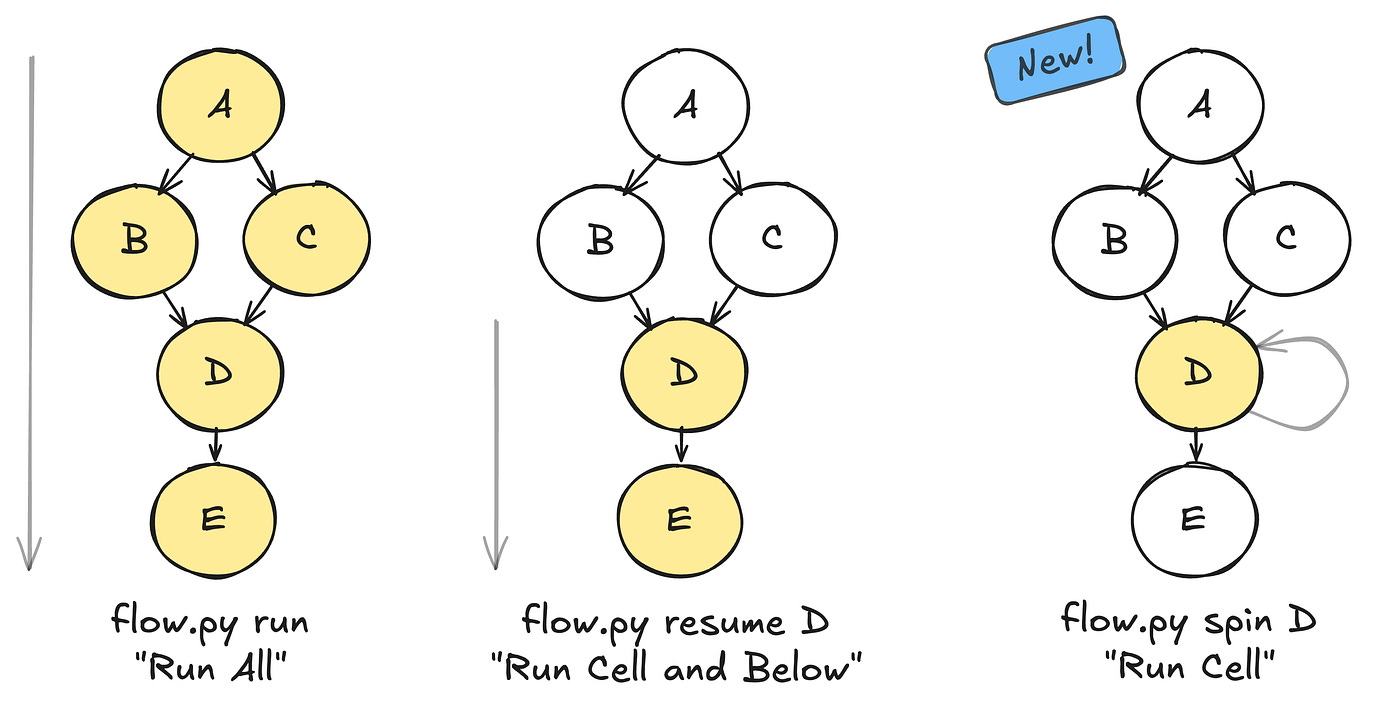

That stack centers around Metaflow, an open-source framework that allows engineers to build, iterate, and deploy models. Netflix cares about understanding of engineering productivity, design principles from development with infrastructure tooling eventually that transforms the complex world of machine learning operations into something much more accessible, especially for junior engineers.

Netflix’s approach recognizes a fundamental problem in modern ML development: ML engineers need freedom to experiment and iterate rapidly, yet their workflows must eventually integrate with production systems(ideally without issues!). Unlike traditional software engineering where explicit control flow and deterministic execution are the norm, machine learning development operates in fundamentally different territory where control flows and execution are anything but deterministic. Models require experimental iteration with visible state, versioned and persisted artifacts to support reproducibility and observability, and the ability to test individual components without re-executing entire pipelines. Metaflow comes into play in this problem and provides what various good abstractions that manage the complete lifecycle of ML projects—from rapid local prototyping in notebooks to reliable, maintainable production deployments scaling across cloud infrastructure.

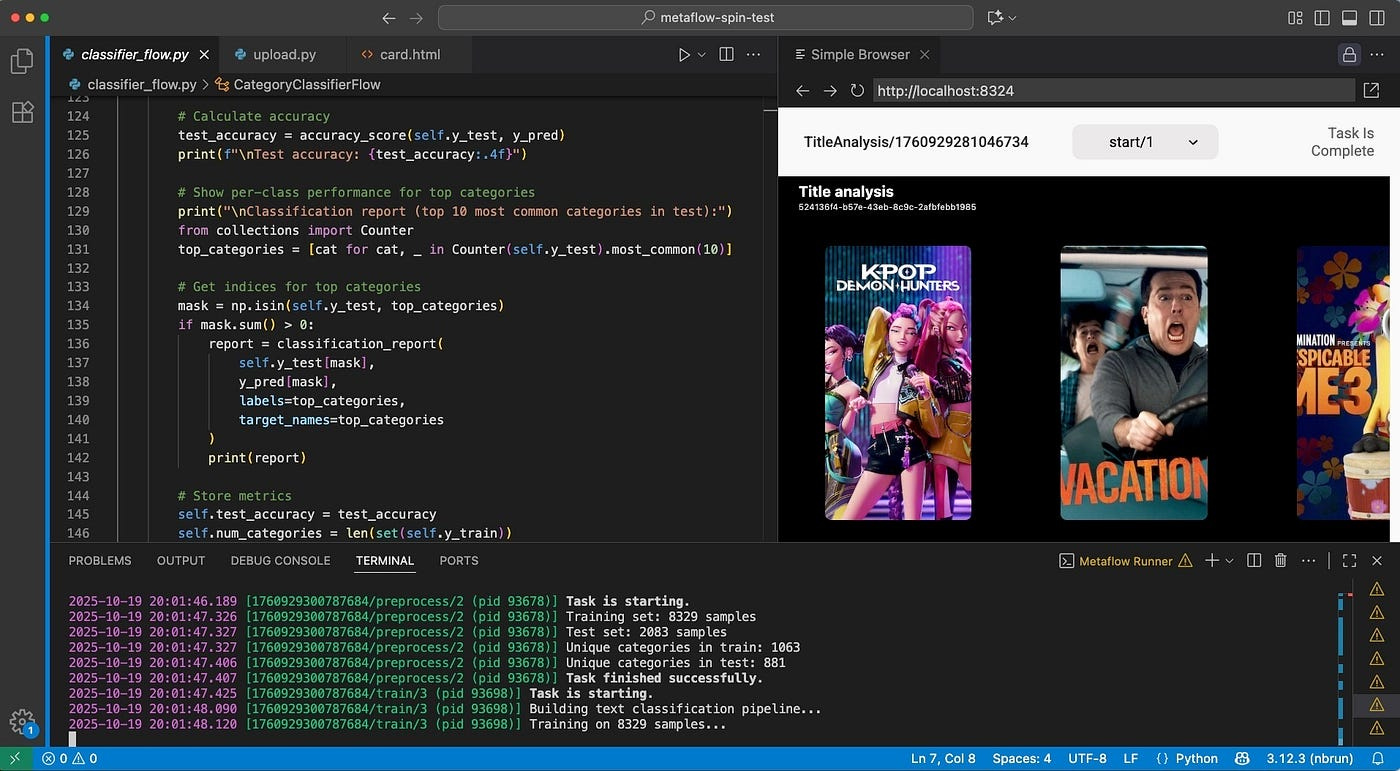

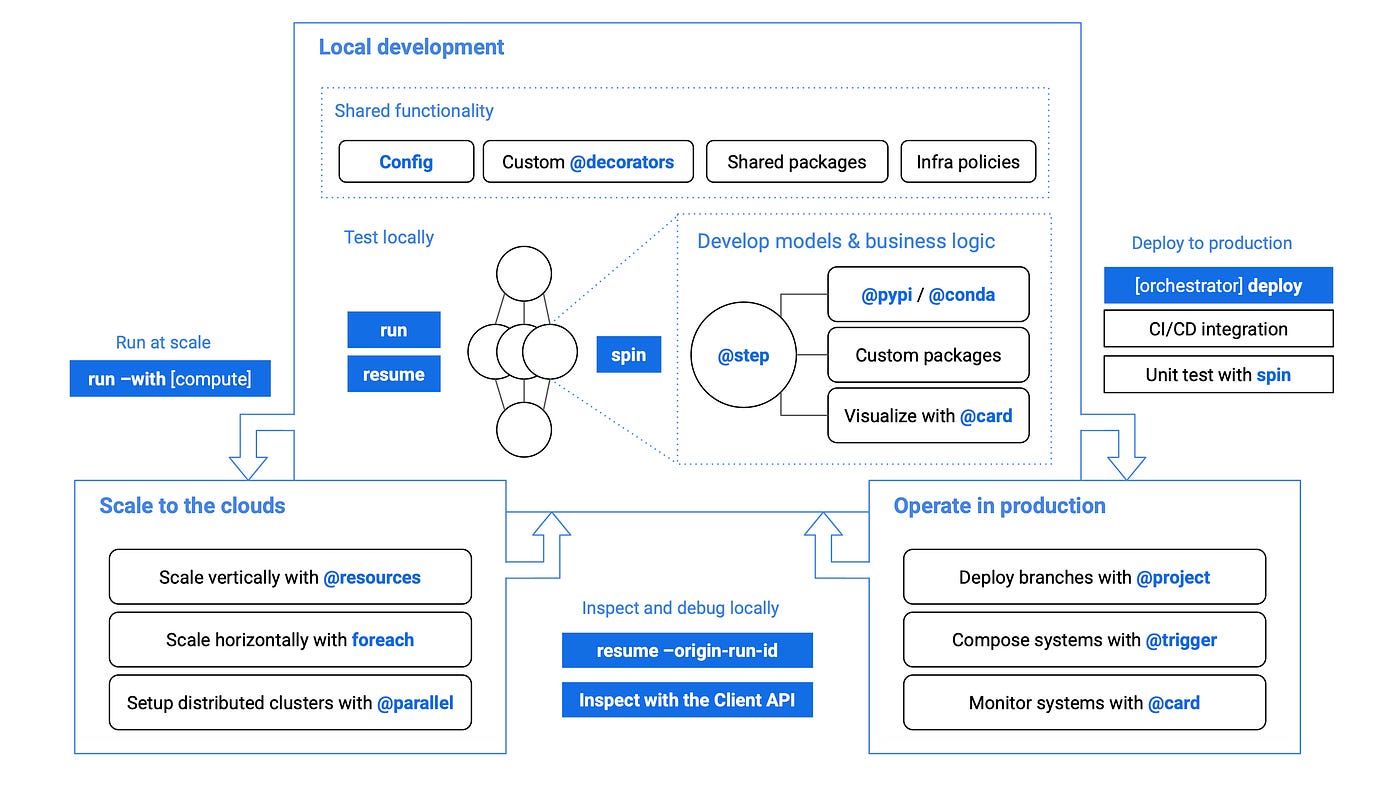

Metaflow code looks like a workflow — similar to Airflow: each Metaflow @step serves as a checkpoint boundary. At the end of every step, Metaflow automatically persists all instance variables as artifacts, allowing the execution to resume seamlessly from that point onward.

However, this has an issue in the reproducibility in the production setting as MetaFlow cannot put the same workflow to production the way experimentation takes it to.

To solve that problem, Netflix recently shipped a feature called Spin that solves that pain point of making the experimentation code to be ready for production ready. Spin allows engineers develop Metaflow workflows incrementally, step by step, with local iterations—by providing the notebook experience while building production-ready systems that scale across distributed infrastructure. The feature follows an incremental pattern: develop a stub flow with start and end steps, execute it to generate initial inputs, then use python myflow.py spin somestep to test changes quickly using artifacts from previous runs.

Each step can be tested independently with appropriate input data without orchestrating metadata or persisting artifacts globally, allowing engineers to eyeball logs and optionally access output artifacts locally. Once a step functions correctly, engineers run the complete flow normally to capture comprehensive metadata and artifacts. This mirrors the workflow patterns that made Jupyter notebooks successful —visual output and analysis attached to the Python code, and incremental development with cached state.

Metaflow can also integrate Claude, which can construct complete ML workflows using Spin, detecting and fixing issues with reduced human intervention and faster completion times than traditional manual development. It creates “human in the loop” experience by augmenting the systems by handling routine pattern application and execution cycles.

Claude integrated Spin can write tests based on input/output pairs, run them to confirm failure, implement code to pass tests, and iterate until all tests pass—all without human prompting between cycles. This test-driven development approach accelerates not just individual development but creates self-validating systems that resist common ML pitfalls like overfitting or architectural inconsistencies within the model.

This framework currently powers over 3,000 ML projects at Netflix, executing hundreds of millions of data-intensive compute jobs annually while processing petabytes of data.

Libraries

Helion is a Python-embedded domain-specific language (DSL) for authoring machine learning kernels, designed to compile down to Triton, a performant backend for programming GPUs and other devices. Helion aims to raise the level of abstraction compared to Triton, making it easier to write correct and efficient kernels while enabling more automation in the autotuning process.

OpenPCC is an open-source framework for provably private AI inference, inspired by Apple’s Private Cloud Compute but fully open, auditable, and deployable on your own infrastructure. It allows anyone to run open or custom AI models without exposing prompts, outputs, or logs - enforcing privacy with encrypted streaming, hardware attestation, and unlinkable requests.

DataChain is a Python-based AI-data warehouse for transforming and analyzing unstructured data like images, audio, videos, text and PDFs. It integrates with external storage (e.g. S3) to process data efficiently without data duplication and manages metadata in an internal database for easy and efficient querying.

ETL. Pythonic framework for describing and running unstructured data transformations and enrichments, applying models to data, including LLMs.

Analytics. DataChain dataset is a table that combines all the information about data objects in one place + it provides dataframe-like API and vectorized engine to do analytics on these tables at scale.

Versioning. DataChain doesn’t store, require moving or copying data (unlike DVC). Perfect use case is a bucket with thousands or millions of images, videos, audio, PDFs.

Incremental Processing. DataChain’s delta and retry features allow for efficient processing workflows:

Delta Processing: Process only new or changed files/records

Retry Processing: Automatically reprocess records with errors or missing results

Combined Approach: Process new data and fix errors in a single pipeline

pgmpy is a Python library for causal and probabilistic modeling using graphical models. It provides a uniform API for building, learning, and analyzing models, such as Bayesian Networks, Dynamic Bayesian Networks, Directed Acyclic Graphs (DAGs), and Structural Equation Models (SEMs). By integrating tools from both probabilistic inference and causal inference, pgmpy enables users to seamlessly transition between predictive and causal analyses.

Below The Fold

ClickHouse® is an open-source column-oriented database management system that allows generating analytical data reports in real-time

Magnitude uses vision AI to enable you to control your browser with natural language.

🧭 Navigate - Sees and understands any interface to plan out actions

🖱️ Interact - Executes precise actions using mouse and keyboard

🔍 Extract - Intelligently extracts useful structured data

✅ Verify - Built-in test runner with powerful visual assertions

You can use it to automate tasks on the web, integrate between apps without APIs, extract data, test your web apps, or as a building block for your own browser agents.