Monarch Matrices(M2) instead of Transformers?

Congress Wants Tech Companies to Pay Up for AI Training Data

Articles

Stanford wrote a blog post on Monarch Mixer(M2) that is promising to replace Transformers and improve the scaling properties of Transformers along two axes; sequence length and model dimension.

Some of the other properties of M2 are:

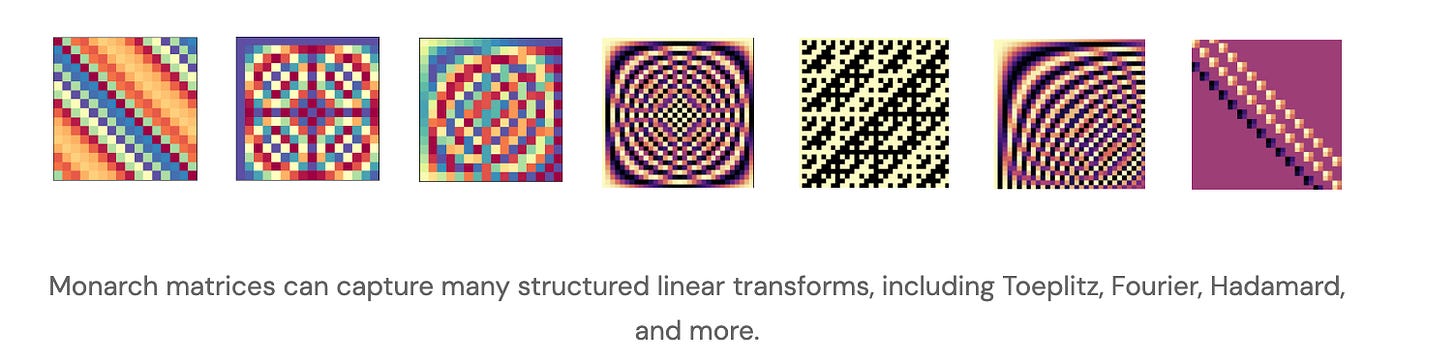

It can efficiently capture all sorts of structured linear transforms, including Toeplitz, Fourier, Hadamard, and more. This means that it can learn a wide range of relationships between different parts of the input sequence.

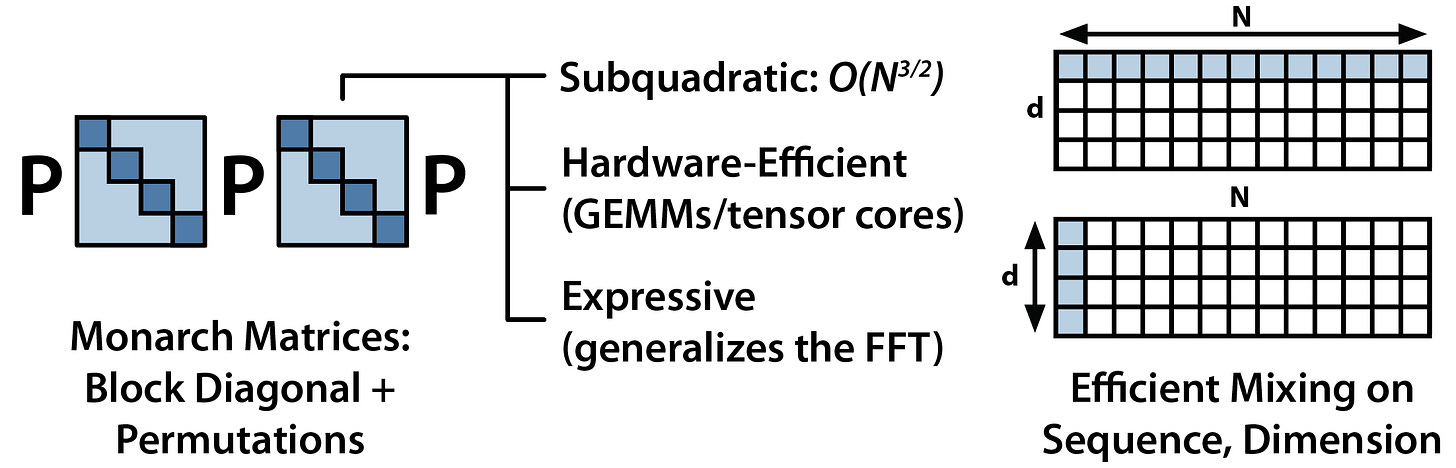

It replaces attention by using Monarch matrices to construct a gated long convolution layer. Monarch matrices, which are a family of block-sparse matrices defined as block-diagonal matrices, interleaved with permutations. This makes it more efficient than traditional attention mechanisms, which can be computationally expensive for long sequences.

It replaces the MLPs by replacing the dense matrices in the MLP with block-diagonal matrices. This also makes it more efficient, as it reduces the number of parameters that need to be learned.

In M2, Monarch matrices are used to replace both attention and MLPs in Transformers. This makes the model look similar to MoE models, without learned routing. Their basic idea is to replace the major elements of a Transformer with Monarch matrices — which are a class of structured matrices that generalize the FFT and are sub-quadratic, hardware-efficient, and expressive. In Monarch Mixer, they use layers built up from Monarch matrices to do both mixing across the sequence (replacing the Attention operation) and mixing across the model dimension (replacing the dense MLP).

The code is available in GitHub.

Alibaba has released a new model that allows you to “replace anything”. The code will be released in GitHub, but the model is available for you to play with in HuggingFace.

I generally do not cover the popular news, but given I have written the moat for the Foundation Models:

However, one dimension I am very clear is the data dimension to be moat for the foundation models. This unfortunately is not very different than traditional software as the “reads” of database becomes much more important than “writes” in the database. Reads in this case on how the user is using the app can become more important than what the app is actually useful for. In here, I want to introduce a concept of ML Data Growth Cycle

…

For example, Quora/Reddit like online forums generally have a large number of questions and answers and people generally come to find the answers through the website. However, users can also vote and like different answers which can be used to train an ML model on what a good answer could look like. This makes the data collected much more useful from the users along with the answer as users can make the app to be more useful. Another good example is Stackoverflow where a lot of software engineers can ask a lot of questions and there are a good number of answers to these questions.

I wanted to cover the Wired article titled: Congress Wants Tech Companies to Pay Up for AI Training Data, TLDR is that using content in the model training without paying to the content owner is not fair. Fair use did not capture the data usage in these models and model owners will have to pay a price to the content owner. This would of course benefit large companies and less so to the startups, but this is a right step in the right direction as the data has to be a large dimension of these Foundation Models and companies will create different moats in the data dimension even though model architectures can become commoditized/shared over time.

Google wrote a rather interesting article to help identifying errors and correcting them through what they call backtracking, the technique involves the following steps:

Generate a Chain-of-Thought (CoT) trace, which is a record of the steps the LLM takes to solve a problem.

Identify the location of the first logical mistake in the CoT trace. This can be done using a classifier or by manually labeling the data.

Re-generate the mistake step at a higher temperature. This means that the LLM is more likely to generate different and creative outputs.

Select a new output that is different from the original mistake step.

Use the new step to generate the rest of the CoT trace.

This can be effective in correcting mistakes made by LLMs, even if the LLMs are not very good at finding the mistakes themselves. They also claim that the technique can generalize to tasks that the LLMs have never seen before, which is an emergent behavior of the technique.

Libraries

JaxIRL contains JAX implementation of algorithms for inverse reinforcement learning (IRL). Inverse RL is an online approach to imitation learning where we try to extract a reward function that makes the expert optimal. IRL doesn't suffer from compounding errors (like behavioural cloning) and doesn't need expert actions to train (only example trajectories of states). Depending on the environment and hyperparameters, our implementation is about 🔥 100x 🔥 faster than standard IRL implementations in PyTorch (e.g. 3.5 minutes to train a single hopper agent ⚡). By running multiple agents in parallel, you can be even faster! (e.g. 200 walker agents can be trained in ~400 minutes on 1 GPU! That's 2 minutes per agent ⚡⚡).

LP-MusicCaps is a implementation of LP-MusicCaps: LLM-Based Pseudo Music Captioning. This project aims to generate captions for music. 1) Tag-to-Caption: Using existing tags, We leverage the power of OpenAI's GPT-3.5 Turbo API to generate high-quality and contextually relevant captions based on music tag. 2) Audio-to-Caption: Using music-audio and pseudo caption pairs, we train a cross-model encoder-decoder model for end-to-end music captioning.

Caption-Upsampling implements the idea of "caption upsampling" from DALL-E 3 with Zephyr-7B and gathers results with SDXL.

Llama-recipes is a companion to the Llama 2 model. The goal of this repository is to provide examples to quickly get started with fine-tuning for domain adaptation and how to run inference for the fine-tuned models. For ease of use, the examples use Hugging Face converted versions of the models. See steps for conversion of the model here.

MultiresConv is a convolutional sequence modeling layer that is simple to implement (15 lines of PyTorch code using standard convolution and linear operators) and requires at most O(N log N) time and memory.

The key component of the layer is a multiresolution convolution operation (MultiresConv, left in the figure) that mimics the computational structure of wavelet-based multiresolution analysis.

Motif elicits the preferences of a Large Language Model (LLM) on pairs of captioned observations from a dataset of interactions collected on NetHack. Automatically, it distills the LLM's common sense into a reward function that is used to train agents with reinforcement learning.

To facilitate comparisons, we provide training curves in the pickle file motif_results.pkl, containing a dictionary with tasks as keys. For each task, we provide a list of timesteps and average returns for Motif and baselines, for multiple seeds.

As illustrated in the following figure, Motif features three phases:

Dataset annotation: use an LLM's preferences on pairs of captioned observations to create an annotated dataset of pairs;

Reward training: train a reward function using the annotated dataset of pairs and the preferences from the LLM as supervision signal;

Reinforcement learning training: train an agent using Motif's reward function.

Data and Network Introspection toolkit DNIKit, an open source Python framework for analyzing machine learning models and datasets. DNIKit contains a collection of algorithms that all operate on intermediate network responses, providing a unique understanding of how the network perceives data throughout the different stages of computation.

With DNIKit, you can:

create a comprehensive dataset analysis report

find dataset samples that are near duplicates of each other

discover rare data samples, annotation errors, or model biases

compress networks by removing highly correlated neurons

detect inactive units in a model

NetHack for the win! (Excellent post, as always.)