What might be coming in 2025 for LLMs

Google Research 2024 overview

Happy new Years!

After 2023 was a breakthrough year for LLMs, 2024 was another excellent year that built upon 2023’s breakthrough year; we got a lot of things in this year such as reasoning, multi-modal, long context(million of tokens) and voice based interactions in real-time!

Different companies released their model upgrades that can do a variety of different capabilities such as reasoning, multi-modal, video generations in this year. Above highlights is taken from Artificial Analysis Leaderboard and it shows that each model has different types of strengths as well as advantages over others and some of the models are leading the model performance(in terms of quality) where certain other models can provide better cost effective performance.

This year, I am mixing it up a bit and will do some predictions as an “outsider” for LLM domain and the industry and want to share some of those predictions, I will report by end of the year how many I got it right and how many wrong. Here are 7 predictions for 2025:

Some LLM/FM Predictions

Not one FM to rule them all: I do not think we will have a single FM model to rule the other models; that is one or two models that will be significantly be better than all of the other models(closed/open source). I think we will have many models that will be similar to each other in terms of quality, but will have their distinctive advantages in various shape or forms.

This would result in more verticalization albeit the name of “Foundation Models”. We will have multiple FMs that will have their purpose for a variety of reasons.

This would result in companies finding other ways to create moats and lock-in for their models in data domain or slightly different use cases for their specific applications.

I think data will play a big role for moats and lock-ins for these models. Microsoft/Azure has a natural advantage due to their Office suit and GitHub for end users, but for enterprise data companies and their vendors(Databricks, Mongo) can also provide solutions around open-source models probably.

More companies will focus on data: Given there is a sentiment of data becoming more and more a bottleneck in making the models larger and bigger, we will see a lot more companies that will focus on data generation(synthetic and otherwise).

The above model scaling will have ramifications to cool down the model scaling and demand for larger and larger models. This might decrease the slope of the growth of the HW vendors(still increase, but in a lower derivative function).

Augmentation, translation and other types of data enrichments methods will be much more important to improve the models as they will need more and more data. High-resource languages interoperable to each other can increase the availability of information and some of these languages might have information that other languages may not have due to historical reasons.

Data scaling might bring a lot more emerging capabilities: Focus on data scaling especially in programming domain can unlock a lot of new capabilities for the models. So called “emerging abilities” can appear a lot more frequently in the synthetic dataset world than the existing natural world observations.

This is I think can also create the next breakthrough as in history, we are always limited by the physical world and its simulations to understand the law of physics and experiment.

In this new world, one can builds worlds, run simulation and learn against these simulations; it might pave the way for a lot more emerging abilities as these territories can be explored very efficiently and through functional verification.

I am most excited about this prediction as it can really bring new capabilities and creativity for the models that they are trained for a given domain.

Usage of the models will shape their distinctive advantages: Each model will have their distinctive advantages through their most used feature or features within a larger app ecosystem and model owners will learn and improve the models in that dimension a lot more and better. Because the data is the bottleneck element for the model development, and reads(usage of the application) will determine the writes(for model improvements), I expect the company that has a lot about app usage is in a better place to improve the model in that dimension a lot better.

If model is being used for image generation, the company that has commercial success on image generation will have the best image generation ability.

If the model is really good at summarizing long articles, books, that model will have more distinctive advantage of other models and will draw a lot more usage for this model.

I expect a lot more “orchestration” apps like perplexity.ai that can use a variety of models under the hood to pick the best model for the job. This also will provide the best experience to the user and less worry about which model to choose from.

I expect companies that serve inference optimization solutions for different models also will do well in this year as they need to serve various different models and different models might have different inference optimizations techniques that can optimize the most for their models.

More companies will adapt in-house LLMs through adoption of open-source LLMs: Companies will use off-the shelf open source models like Llama to fine-tune their datasets and respond to some of their use cases through this mechanism.

This is perhaps not surprising as the open-source models are very close to their closed source equivalents in terms of model performance and accuracy. Companies that will use the open-source models for their knowledge base or wiki like applications naturally. This allows them to not share their data with other third party LLM providers and still have the flexibility on the fine-tuning for their applications.

This would require for companies to build training capabilities for their fine-tuning capabilities and inference capacity to serve these use cases well.

Small and Edge models will be significantly better: I do not think industry will go in this direction as server side models will be still significantly better. However, I do think that small models that can be deployed to desktop, phones as well as edge devices will start getting more traction.

As the bottleneck is the data, I expect companies to extract better performance and model accuracy for a given model size as they improve the data dimension. This would result in better and more efficiency in smaller models.

TPUs can be a commercial success?: My last and dark horse prediction one that Google will make an announcement to make their TPU available not just through GCP, but also sell in terms of pure hardware(NVIDIA/AMD play) and even darker horse prediction: release a separate software stack similar to CUDA(based on Jax).

I have no insider information, but it comes to me surprise that TPUs that take a such a large workload of Google(probably second largest in the world after NVIDIA GPUs) and allow them to build and iterate on the series of Gemini models would leave the competition completely on the table with NVIDIA/AMD.

Even though TPU chips are not competitive in terms of capability-wise with NVIDIA GPUs, they are built ground-up for large AI workloads for Google and can provide better cost efficiency than NVIDIA due to Google’s strong focus on system design, integration, communication and reliability improvements that they have made over the years.

What are your thoughts on these predictions, do you have any?, leave a comment below!

Google research wrote a comprehensive blog post on 2024 and here are 7 themes that a great research that came from Google:

1. Machine Learning and AI Foundations

Large Language Models (LLMs) and Efficiency

Google made substantial progress in improving the efficiency and capabilities of machine learning models, particularly large language models.

Cascading Models

Research on cascades presented a method for leveraging smaller models for "easy" outputs, further enhancing efficiency in ML applications. This approach allows for more judicious use of computational resources, potentially reducing energy consumption and improving response times for a wide range of AI-powered services

Time Series Forecasting

Google introduced TimesFM, a decoder-only foundation model for time series forecasting. This 200-million parameter model was pre-trained on 100 billion real-world time-points, largely using data from Google Trends and Wikipedia pageviews. TimesFM outperformed even powerful deep-learning models that were trained specifically on target time-series, marking a significant advancement in predictive analytics

TimesFM has the following capabilities:

Zero-shot forecasting

Ability to handle variable context and horizon

Potential applications in retail, finance, and healthcare sectors

Quantum Computing

To enhance the reliability and usefulness of quantum computing at scale, Google introduced AlphaQubit, a neural network-based decoder developed in collaboration with Google DeepMind. Published in Nature, AlphaQubit identifies errors with state-of-the-art accuracy, potentially accelerating the development of practical quantum computing systems

2. AI in Gaming and Simulation

GameNGen

Google's GameNGen model demonstrated the ability to simulate complex video games in real-time with high quality using a neural model. This breakthrough could have far-reaching implications for game development, virtual reality, and simulation technologies across various industries

Some of the GameNGen capabilities are:

Based on a modified version of Stable Diffusion v1.4

Capable of simulating Doom at 20 frames per second

Trained using reinforcement learning agents

Potential to make game development more accessible and less costly

3. Healthcare and Medical AI

Google Research made substantial strides in applying AI to healthcare, potentially democratizing access to high-quality, personalized care.

MedLM and Search for Healthcare

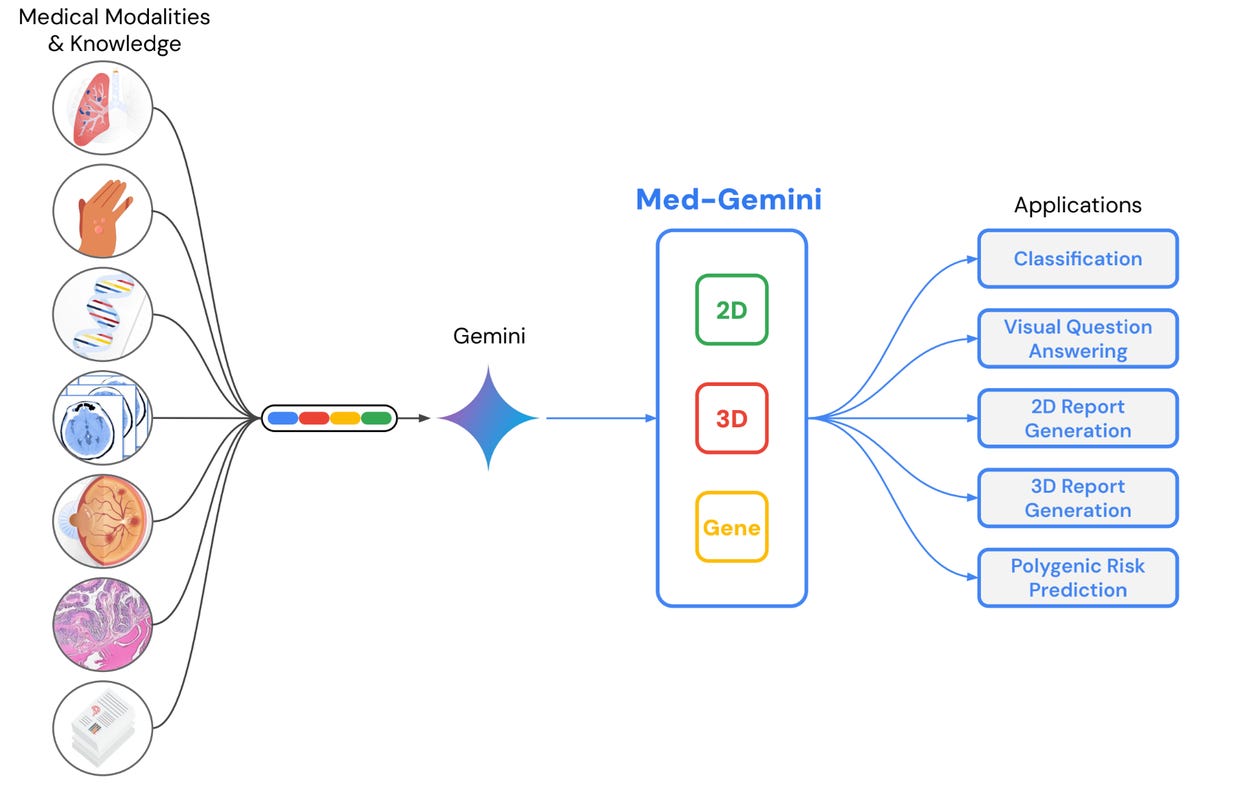

Fine-tuned models like MedLM and Search for Healthcare on Google Cloud Platform combine Gemini's multimodal and reasoning abilities with training on de-identified medical data. These models demonstrated state-of-the-art capabilities in interpreting 3D scans and generating radiology reports, marking a significant advancement in medical imaging and diagnostics

Personal Health LLM

Google introduced Personal Health LLM, capable of analyzing physiological data from wearable devices and sensors represents a step towards more personalized health insights. This model aims to provide Fitbit and Pixel users with tailored advice on questions related to their well-being, potentially transforming how individuals manage their health

Articulate Medical Intelligence Explorer (AMIE)

AMIE is an experimental system optimized for diagnostic reasoning and conversations. Its ability to ask intelligent questions based on a person's clinical history to help derive a diagnosis could significantly enhance the diagnostic process, especially in subspecialist medical domains like breast cancer

Genomics Advancements

Google made significant progress in genomics research:

REGLE: An unsupervised deep learning model that helps researchers use high-dimensional clinical data at scale to discover associations with genetic variants.

DeepVariant models: As part of a collaboration on Personalized Pangenome References, new open-source models reduced errors by 30% when analyzing genomes of diverse ancestries

AlphaFold 3

In May 2024, Google announced AlphaFold 3, an advanced model for predicting the structure and interactions of life's molecules. This breakthrough holds significant promise for advancing scientific understanding and medical research

4. Scientific Breakthroughs

Google Research's computational capabilities enabled significant scientific advancements in 2024.

Connectomics

Marking 10 years of connectomics research, Google, in partnership with Harvard, published the largest ever AI-assisted reconstruction of human brain tissue at the synaptic level. This breakthrough, published in Science, provides unprecedented insights into brain structure and function, potentially revolutionizing neuroscience and our understanding of cognitive processes

Some of the capabilities built through this effort are:

Revealed never-before-seen structures within the human brain

Full dataset, including AI-generated annotations for each cell, made publicly available

Potential to accelerate research in neuroscience and brain-related disorders

Ionosphere Mapping

Using aggregated sensor measurements from millions of Android phones, Google created detailed maps of the ionosphere. This innovative approach to studying the Earth's upper atmosphere could improve our understanding of geomagnetic events and their impact on critical infrastructure, particularly satellite communications and navigation systems

Capabilities:

Enhanced understanding of the ionosphere's impact on satellite communications

Potential improvements in GPS accuracy and reliability

New insights into space weather phenomena

5. Climate Change and Environmental Monitoring

Google Research made significant strides in addressing global challenges related to climate change and environmental monitoring.

Flood Forecasting

Building on their flood forecasting project initiated in 2018, Google Research expanded coverage to 100 countries and 700 million people worldwide in 2024. The AI model now achieves reliability in predicting extreme riverine events in ungauged watersheds at up to a seven-day lead time, matching or exceeding the accuracy of now casts

.Key improvements:

Extension of lead time from five to seven days

Expanded coverage to 100 countries

Potential to save countless lives and reduce economic losses from flooding

Wildfire Detection

In partnership with the U.S. Forest Service, Google Research developed FireSat, an AI model and new global satellite constellation designed to detect and track wildfires as small as a classroom within 20 minutes.

Higher-resolution imagery for wildfire detection

Faster detection times (within 20 minutes)

Potential to revolutionize wildfire response and management

Weather Prediction

Building on the 2023 launch of GraphCast, Google DeepMind's weather prediction model continued to outperform industry gold-standard systems in 2024

Ability to predict weather conditions up to 10 days in advance

Improved accuracy compared to traditional methods

Enhanced cyclone tracking capabilities

Faster computation times

6. AI in Mathematics and Problem-Solving

Google DeepMind made remarkable progress in applying AI to complex mathematical reasoning.

AlphaGeometry and AlphaProof

DeepMind announced AlphaGeometry, an AI system capable of solving complex geometry problems at a level approaching a human Olympiad gold-medalist. The subsequent Gemini-trained model, AlphaGeometry 2, combined with a new model called AlphaProof, solved 83% of all historical International Mathematical Olympiad (IMO) geometry problems from the past 25 years

Demonstrates AI's growing ability to reason and solve problems beyond current human capabilities

Potential for systems that can discover and verify new mathematical knowledge

Applications in education and advanced mathematical research

7. Materials Science and Sustainable Technologies

GNoME Advancements

Building on its 2023 announcement, GNoME has discovered 380,000 materials that are stable at low temperatures, according to simulations

Potential applications of this advancement:

Development of better solar cells

Improved battery technologies

Discovery of potential superconductors

Addressing critical needs in sustainable energy and advanced materials

Libraries

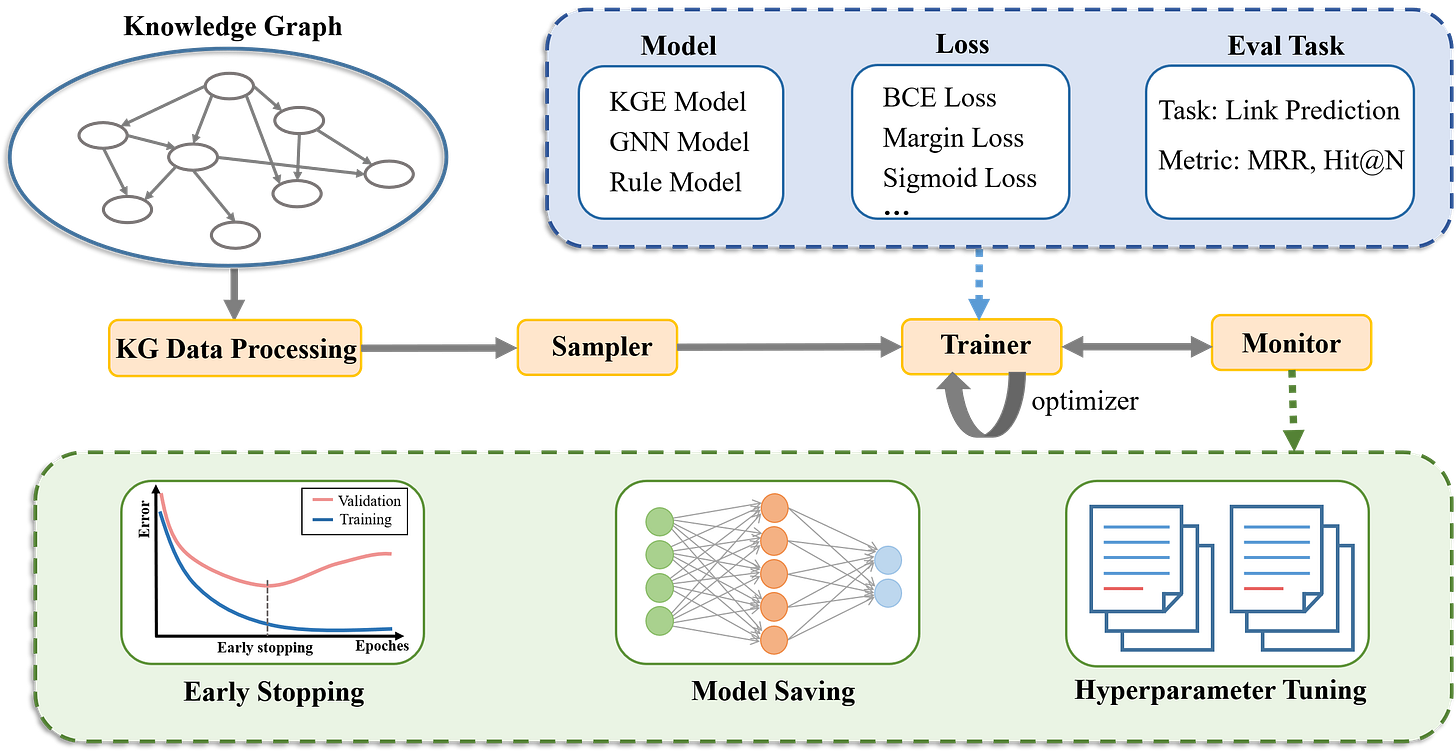

NeuralKG is a python-based library for diverse representation learning of knowledge graphs implementing Conventional KGEs, GNN-based KGEs, and Rule-based KGEs. They provide comprehensive documents for beginners and an online website to organize an open and shared KG representation learning community.

NeuralKG is built on PyTorch Lightning. It provides a general workflow of diverse representation learning on KGs and is highly modularized, supporting three series of KGEs. It has the following features:

Support diverse types of methods. NeuralKG, as a library for diverse representation learning of KGs, provides implementations of three series of KGE methods, including Conventional KGEs, GNN-based KGEs, and Rule-based KGEs.

Support easy customization. NeuralKG contains fine-grained decoupled modules that are commonly used in different KGEs, including KG Data Preprocessing, Sampler for negative sampling, Monitor for hyperparameter tuning, Trainer covering the training, and model validation.

long-term technical maintenance. The core team of NeuralKG will offer long-term technical maintenance. Other developers are welcome to pull requests.

TensorFlow Probability is a library for probabilistic reasoning and statistical analysis in TensorFlow. As part of the TensorFlow ecosystem, TensorFlow Probability provides integration of probabilistic methods with deep networks, gradient-based inference via automatic differentiation, and scalability to large datasets and models via hardware acceleration (e.g., GPUs) and distributed computation.

TFP also works as "Tensor-friendly Probability" in pure JAX!: from tensorflow_probability.substrates import jax as tfp -- Learn more here.

Phoenix is an open-source observability library designed for experimentation, evaluation, and troubleshooting. It allows AI Engineers and Data Scientists to quickly visualize their data, evaluate performance, track down issues, and export data to improve.

AutoGen is an open-source framework for building AI agent systems. It simplifies the creation of event-driven, distributed, scalable, and resilient agentic applications. It allows you to quickly build systems where AI agents collaborate and perform tasks autonomously or with human oversight.

FLAML is a lightweight Python library for efficient automation of machine learning and AI operations. It automates workflow based on large language models, machine learning models, etc. and optimizes their performance.

FLAML enables building next-gen GPT-X applications based on multi-agent conversations with minimal effort. It simplifies the orchestration, automation and optimization of a complex GPT-X workflow. It maximizes the performance of GPT-X models and augments their weakness.

For common machine learning tasks like classification and regression, it quickly finds quality models for user-provided data with low computational resources. It is easy to customize or extend. Users can find their desired customizability from a smooth range.

It supports fast and economical automatic tuning (e.g., inference hyperparameters for foundation models, configurations in MLOps/LMOps workflows, pipelines, mathematical/statistical models, algorithms, computing experiments, software configurations), capable of handling large search space with heterogeneous evaluation cost and complex constraints/guidance/early stopping.

FLAML is powered by a series of research studies from Microsoft Research and collaborators such as Penn State University, Stevens Institute of Technology, University of Washington, and University of Waterloo.

llm-viz displays a 3D model of a working implementation of a GPT-style network. That is, the network topology that's used in OpenAI's GPT-2, GPT-3, (and maybe GPT-4).

The first network displayed with working weights is a tiny such network, which sorts a small list of the letters A, B, and C. This is the demo example model from Andrej Karpathy's minGPT implementation.

The renderer also supports visualizing arbitrary sized networks, and works with the smaller gpt2 size, although the weights aren't downloaded (it's 100's of MBs).

Netron is a viewer for neural network, deep learning and machine learning models.

Netron supports ONNX, TensorFlow Lite, Core ML, Keras, Caffe, Darknet, PyTorch, TensorFlow.js, Safetensors and NumPy.

Netron has experimental support for TorchScript, TensorFlow, MXNet, OpenVINO, RKNN, ML.NET, ncnn, MNN, PaddlePaddle, GGUF and scikit-learn.