Kafka Tiered Storage from Uber

How to impute the impairments in a multi-modal setting for an LLM, Computer Vision Classes

Articles

Kafka proposes a new extension to Kafka called Kafka Tiered Storage(KTS) in their blog post, a solution aimed at improving Apache Kafka's storage capabilities and efficiency. The proposal addresses several challenges associated with Kafka's current storage model and introduces a new architecture to enhance scalability, efficiency, and operational costs. In Uber’s usage, Kafka stores messages in append-only log segments on the broker's local storage. Topics can be configured with targeted retention based on size or time, allowing users to consume data within the retention period even if consuming applications fail or slow down. However, this storage model presents several challenges:

Scaling storage typically requires adding more broker nodes, which also unnecessarily increases memory and CPU resources.

Larger clusters with more nodes increase deployment complexity and operational costs.

There is a tight coupling between storage and processing in the broker.

To address these issues, Uber proposed Kafka Tiered Storage with the following main goals:

Extend storage beyond the broker

Support for remote storage (including cloud/object stores like S3/GCS/Azure)

Maintain durability and consistency semantics similar to local storage

Isolate reading of latest and historical data

Require no changes from clients

Enable easy tuning and provisioning of clusters

Improve operational and cost efficiency

The main underlying tone of the post is also they want to leverage the Kafka somehow long term solution for almost like a storage mechanism so that they can get the benefit of the streaming that Kafka provides as well as guarantees and scalability to use the messages within Kafka and store them almost like a database.

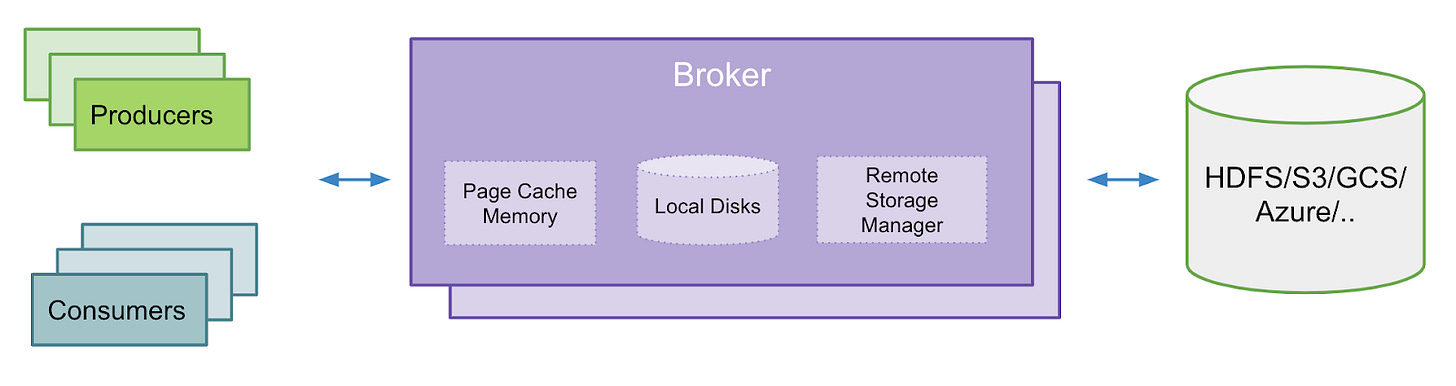

The new architecture introduces two tiers of storage: local and remote. The local tier remains on the broker's local storage, while the remote tier is an extended storage option such as HDFS, S3, GCS, or Azure. Both tiers have their own retention policies based on specific use cases. Key aspects of the new architecture include:

Reduced local tier retention: The retention period for the local tier can be significantly reduced from days to a few hours.

Extended remote tier retention: The remote tier can have a much longer retention period, ranging from days to months.

Efficient data access: Applications sensitive to latency can perform tail reads from the local tier, utilizing Kafka's efficient page cache. Applications requiring older data, such as those performing backfills or recovering from failures, can access data from the remote tier.

This approach offers several benefits over everything in a single place:

Improved scalability: Storage can be scaled without being tied to memory and CPU resources.

Long-term storage viability: Kafka becomes a viable option for long-term data storage.

Reduced local storage burden: Decreases the amount of data to be transferred during recovery and rebalancing.

Direct remote access: Log segments in the remote tier can be accessed directly without restoration on the broker.

Elimination of separate data pipelines: Longer data retention without the need for separate pipelines to transfer data from Kafka to external storage.

The second point where Long-Term Storage is the main aspect of it, where one can think of the storage of the various segments and Kafka using their internal underlying technology to stitch them together while of course it will do the streaming based on these messages. Replays and debugging certain messages will be much easier and does not have to comply the retention limitation of the broker anymore as the long term storage actually takes care of the retention on a remote storage place.

The new architecture divides a topic partition's log into two logical components:

Local log: Contains a list of local log segments

Remote log: Contains a list of remote log segments

The remote log subsystem copies eligible segments from local storage to remote storage. A segment is considered eligible when its end offset is less than the LastStableOffset of a partition. The architecture introduces three new components:

RemoteStorageManager: An interface providing actions for remote log segments, including copy, fetch, and delete operations from remote storage.

RemoteLogMetadataManager: An interface for managing the lifecycle of metadata about remote log segments with strongly consistent semantics. A default implementation uses an internal topic, but users can plug in their own implementation if desired.

RemoteLogManager: A logical layer responsible for managing the lifecycle of remote log segments, including copying segments to remote storage, cleaning up expired segments, and fetching data from remote storage.

The process of copying segments to remote storage involves the following steps:

The broker acting as the leader for a topic partition is responsible for copying eligible log segments to remote storage.

Segments are copied sequentially from the earliest to the latest.

The RemoteStorageManager is used to copy the segment along with its indexes, timestamp, producer snapshot, and leader epoch cache.

Entries are added and updated in the RemoteLogMetadataManager with respective states for each copied segment.

Cleaning up remote segments is performed at regular intervals by computing eligible segments using a dedicated thread pool. This process is separate from the asynchronous cleanup of local log segments. When a topic is deleted, remote log segments are cleaned up asynchronously without blocking the existing delete operation or recreating a new topic.Fetching segments from remote storage is handled as follows:

When a consumer fetch request is received for data only available in remote storage, it is served using a dedicated thread pool.

If the targeted offset is available in the broker's local storage, it is served using the existing local fetch mechanism.

This approach separates local reads from remote reads, preventing them from blocking each other.

The RemoteLogManager determines the targeted remote segment based on the desired offset and leader epoch by querying the metadata store using the RemoteLogMetadataManager.

The RemoteStorageManager is then used to find the position within the segment and start fetching the desired data

After reading the whole article, I thought how we used (DocumentDB → CosmosDB) in Jet. We wanted a solution that we wanted to write in a database and want to also get the change log as part of the database change as a streaming. By doing so, we get the benefit of both of the worlds where we will have a solid storage solution and also can stream the changes for downstream to consume. In this post, the solution is actually other way around where as they already have a streaming solution, they want to create a stronger guarantee on the storage as the storage will be impacted by the amount of the messages that they process and probably for various use cases, this may be too limiting for Uber(they do not exactly talk about the business problem that they are solving through the long term storage).

Google introduces Human I/O, a novel approach to detecting situationally induced impairments and disabilities (SIIDs) using large language models (LLMs), egocentric vision, and multimodal sensing. If this is mouthful and too many acronyms, it is indeed, but promise of the paper is rather interesting.

The post goes after challenges posed by temporary impairments that affect our ability to interact with devices in various everyday situations. Human I/O is designed as a generalizable and extensible framework for detecting SIIDs. Instead of creating individual models for specific activities like face-washing or tooth-brushing, it assesses the availability of four key user interaction channels:

Vision (e.g., reading text messages, watching videos)

Hearing (e.g., hearing notifications, phone calls)

Vocal (e.g., having conversations, using voice assistants)

Hand (e.g., using touch screens, gesture control)

The system's primary goal is to universally evaluate these channels' availability in real-time, allowing for adaptive interactions with devices. This is important as we are interacting with world, we generally face various impairments, either we cannot see something and try to deduct through the noise, or smell. They want to build an LLM that would be robust to these impairments.

Human I/O’s brings the following aspects:

Multimodal Sensing:

Human I/O utilizes egocentric vision as a primary input source. This involves using a camera that captures the user's first-person perspective, providing rich contextual information about their environment and activities. In addition to visual data, the system likely incorporates other sensors such as microphones and accelerometers to gather a comprehensive understanding of the user's situation.Large Language Model Integration:

The core of Human I/O's reasoning capabilities is built upon LLMs. These models are used to interpret the multimodal sensor data and make inferences about the availability of each interaction channel. The LLMs are likely fine-tuned or prompted to understand the relationship between observed situations and their impact on user interactions.Chain-of-Thought Reasoning:

The full version of Human I/O employs a chain-of-thought reasoning module. This approach allows the system to break down complex situations into a series of logical steps, improving the accuracy and interpretability of its predictions. The chain-of-thought process likely involves analyzing the sensor data, identifying relevant contextual cues, and reasoning about how these factors affect each interaction channel.Availability Prediction:

The system predicts the availability of each interaction channel on a continuous scale. This nuanced approach allows for more precise adaptations compared to binary available/unavailable classifications. The predictions take into account various factors such as environmental conditions, user activities, and potential physical or cognitive limitations.Human I/O Lite:

A simplified version of the system, called Human I/O Lite, replaces the chain-of-thought reasoning module with a one-shot prompt. This variant is designed to reduce computational requirements while still maintaining reasonable performance. The one-shot prompt likely provides a more direct instruction to the LLM for making predictions based on the input data.

The most important thing in here is to me is the chain-of-though reasoning which is crucial to impute various data that is missing in one of the channels with data that is coming from other channels.

Evaluation and Performance:

They have conducted a comprehensive evaluation of Human I/O using 300 clips selected from 60 in-the-wild egocentric video recordings, covering 32 different scenarios. The evaluation results demonstrate the system's effectiveness:

Accuracy: Human I/O achieved an 82% accuracy in predicting channel availability across the diverse set of scenarios.

Mean Absolute Error (MAE): The system achieved a low MAE of 0.22, indicating that its predictions closely align with actual availability levels. This means that, on average, the system's predictions deviate less than a third of the actual level.

Precision: 96% of the system's predictions were within one step of the actual availability level, showcasing its high precision.

Human I/O Lite Performance: The simplified version achieved an MAE of around 0.44, demonstrating a promising ability to predict SIIDs even with reduced computational resources.

Libraries

Insanely Fast Whisper is an opinionated CLI to transcribe Audio files w/ Whisper on-device! Powered by 🤗 Transformers, Optimum & flash-attn

TL;DR - Transcribe 150 minutes (2.5 hours) of audio in less than 98 seconds - with OpenAI's Whisper Large v3. Blazingly fast transcription is now a reality!⚡️

This software project accompanies the research paper, Stabilizing Transformer Training by Preventing Attention Entropy Collapse, published at ICML 2023.

Transformers are difficult to train. In this work, we study the training stability of Transformers by proposing a novel lense named Attention Entropy Collapse. Attention Entropy is defined as the quantity

Ent(𝐴𝑖)=−∑𝑗=1𝑇𝐴𝑖,𝑗log(𝐴𝑖,𝑗)

for an attention matrix 𝐴, with 𝐴𝑖,𝑗 corresponding to the 𝑖 -th query and 𝑗 -th key/value, respectively. Our observation is that training instability often occurrs in conjunction with sharp decreases of the average attention entropy, and we denote this phenomenon as entropy collapse. There are two implementations. One in PyTorch, applied to the Vision Transformer (VIT) setting; and another in JAX, applied to speech recognition (ASR). Please refer to the vision and speech folders for details. The same PyTorch implementation was used for language modeling (LM) and machine translation (MT) experiments.

Intel Neural Compressor aims to provide popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet, as well as Intel extensions such as Intel Extension for TensorFlow and Intel Extension for PyTorch. In particular, the tool provides the key features, typical examples, and open collaborations as below:

Support a wide range of Intel hardware such as Intel Xeon Scalable Processors, Intel Xeon CPU Max Series, Intel Data Center GPU Flex Series, and Intel Data Center GPU Max Series with extensive testing; support AMD CPU, ARM CPU, and NVidia GPU through ONNX Runtime with limited testing

Validate popular LLMs such as LLama2, Falcon, GPT-J, Bloom, OPT, and more than 10,000 broad models such as Stable Diffusion, BERT-Large, and ResNet50 from popular model hubs such as Hugging Face, Torch Vision, and ONNX Model Zoo, by leveraging zero-code optimization solution Neural Coder and automatic accuracy-driven quantization strategies

Collaborate with cloud marketplaces such as Google Cloud Platform, Amazon Web Services, and Azure, software platforms such as Alibaba Cloud, Tencent TACO and Microsoft Olive, and open AI ecosystem such as Hugging Face, PyTorch, ONNX, ONNX Runtime, and Lightning AI

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD), apre-training objective that combines diverse pre-training paradigms together. UL2 introduces a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes.

Computer Vision Classes

CSE 455: Computer Vision from Washington University has a comprehensive introductory material for computer vision. It has recordings as well.

Computer Vision from NYU has also good introductory material. It has also recent model architectures like Transformers.

Computer Vision from Berkeley has graduate level materials, but it is outdated(2017).