How to CI/CD ML Models

Gaussian Belief Propagation, MLP Mixer might be a CNN in disguise(maybe)

This week, we have 3 excellent articles that covers a discussion on one of the recent papers(MLP Mixer), Gaussian Belief Propagation(GBP) paper(interactive article, like distill article) and Uber’s CI/CD approach to machine learning model management.

Is MLP Mixer a CNN in disguise talks about if the paper of MLP Mixer actually uses convolutional neural network methods. It is a nice article that walks through the MLP mixer with a catchy title. The terminology wars aside, a good review of the original paper is in the article.

And of course, one might think that MLP itself is a convolution itself:

But, I will digress.

Even though Distill is in hiatus, I like seeing new and new approaches like the GBP article where researchers enable the research to a wider audience to make the paper content more accessible and certain cases interactive.

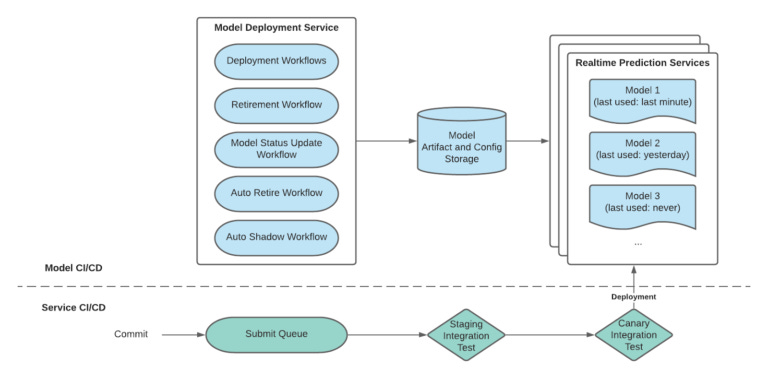

Uber has been recently on fire publishing post over post around MLOps practices that they have and this week is no exception, we have a really good and long article that covers how they manage machine learning models. In this article, they talk about how they retire and do shadow on the models. Retiring is important concept as the platform grows, there will be some ghost models(maybe, orphaned models phrase might suit better) that will be still deployed but will not serve any traffic(or meaningful percentage of traffic), they use a mixture of expiration date approach and probably, proactively measuring the traffic percentage to see which models do not serve traffic. The other one is how you actually deploy the model to production. Generally, it is a good idea to do offline testing with all good old cross validation. However, if your platform/infrastructure supports it, it is even better to do validation/testing online. You can get a holistic view of how model is performing(accuracy, performance), but also this is the only way you can actually make sure that model will be able to serve production traffic. Because of this, generally it is a good idea to replay production traffic against the model. In this article, Uber talks about “auto-shadowing” these production models and ensures that ml models can be tested first in a production setting without serving real traffic.

Some people reached out for sparsity in neural networks conference in Twitter to cover in newsletter, which you should check it out! I have not gone through any of the videos yet, so that will come next week.

Without further ado, let’s dive into the articles!

Bugra

Articles

Is MLP Mixer a CNN in disguise article answers to the question if the famous paper of MLP Mixer is a Convolutional Neural Network under the hood and goes over implementation details in PyTorch and JAX. It also evaluates the approach comparatively with the convolutional neural networks.

Read the article and let me know if you answer to the question yes or no.

Yann Lecun also weighted in to this discussion, the thread is a good read on the terminology, as well.

Gaussian Belief Propagation is an interactive article that walks through the method and how it is useful for probabilistic inference.

The paper has a great motivation introduction:

As we search for the best algorithms for probabilistic inference, we should recall the "Bitter Lesson" of machine learning (ML) research: "general methods that leverage computation are ultimately the most effective, and by a large margin". A reminder of this is the success of CNN-driven deep learning, which is well suited to GPUs, the most powerful widely-available processors in recent years. The bitter lesson is drawn from here.

Then, it goes by saying that we lost to the computation on deep learning. Can we somehow leverage this computation somehow to enable effective learning? With the new hardware where you can distribute computation many more machines, the paper says we can do that through Gaussian Belief Propagation mechanism.

Uber wrote a blog post on how they do continuous integration and deployment for machine learning models.

They have a variety of services that operate on the model which makes ml model easier. I especially like auto-retirement workflow and auto-shadow which makes the retirement of models that have not been used and release of the models to a traffic that is not serving production traffic much easy respectively.

Papers

Simon Says: Evaluating and Mitigating Bias in Pruned Neural Networks with Knowledge Distillation answers to the question “can we evaluate how much bias that pruning can bring to the neural networks” to a sounding yes and then find ways to mitigate this bias through knowledge distillation.

It first proposes two metrics to quantify the bias in the neural networks induced by pruning.

Then, it proposes knowledge distillation to mitigate and remove this bias.

The code of the paper is in here.

The MultiBERTs: BERT Reproductions for Robustness Analysis examines the impact of random initialization and hyperparameters such as step size on the accuracy of fine-tuning of BERT models that were popularized by Google. They train 25 different BERT models and release all of the BERT model checkpoints in here. The main conclusion is that the larger the step size, the model accuracy gets better.

Vision Transformer(annotated) I already shared Vision Transformer paper in previous newsletters, but labml had a nice annotated version along with a nice notebook. Even they have an experiment that you can run against CIFAR-10 dataset.

Deduplicating Training Data Makes Language Models Better is what its title says. All of the deep learning models use scraped data from the web can use some good old deduplicating. As the paper says, most of the scraped data contains a lot of duplicated data and that hurts model accuracy. They also release the code, written in Rust. Also, the code supports Tensorflow Dataset if you have a dataset and want to leverage the library while want to convert to Tensorflow dataset.

Libraries

Checklist is a library for NLP based systems to verify that provides a test suite for NLP based systems.

Archai is a platform library for Neural Architecture Search that supports PyTorch models.

Vega is a library that supports a variety of AutoML capabilities for Tensorflow, PyTorch and Mindspore.

Classes

Responsible Data Science covers the following topics:

Fairness

Data Protection/Lineage

Transparency and Interpretability

Its format of diving these into different modules and providing colab notebooks for each section is great. One of the examples of the colab notebook is here.