Google open-sources Gemma(2B, 7B parameter models)

VideoPrism: A new Visual Encoder, Mamba and HiddenMambaAttn

Articles

Google launches their new model series called Gemma. After disastrous rollout of Gemini, they wanted to also open-source smaller models for community to try out and adopting for their GCP(Google Cloud Platform) more when it comes to try out the models.

This is an excellent strategy for providing different options to the community, but also have solutions for enterprise and end user like ChatGPT.

Main features of the Gemma model family are in the following:

They release two models with two different sizes: Gemma 2B and Gemma 7B. Each size is released with pre-trained and instruction-tuned variants.

A new Responsible Generative AI Toolkit provides guidance and essential tools for creating safer AI applications with Gemma.

As far as I know, no other GenAI development is focusing the responsible AI aspect of these models. But then again, this probably contributed quite a bit on the disastrous rollout that Gemini has suffered.

They provide toolchains for inference and supervised fine-tuning (SFT) across all major frameworks: JAX, PyTorch, and TensorFlow through native Keras 3.0.

Google has been adopting more JAX internally, but for products that are aiming to increase adoption of GCP, they are externally agnostic to languages. I am looking for MLX support at some point as well.

They provide ready-to-use Colab and Kaggle notebooks, alongside integration with popular tools such as Hugging Face, MaxText, NVIDIA NeMo and TensorRT-LLM, make it easy to get started with Gemma.

Pre-trained and instruction-tuned Gemma models can run on your laptop, workstation, or Google Cloud with easy deployment on Vertex AI and Google Kubernetes Engine (GKE).

This is probably the most important reason why they open-sourced these models. GCP wants to catchup with Azure and AWS and one advantage and moat that they have over other vendors is arguably AI and TPU(Tensor Processing Unit); that we covered extensively in the last week’s newsletter.

Out of box optimization across multiple AI hardware platforms ensures industry-leading performance is also provided, including NVIDIA GPUs and Google Cloud TPUs.

Their getting started page is very easy to navigate if you want to get it started quickly.

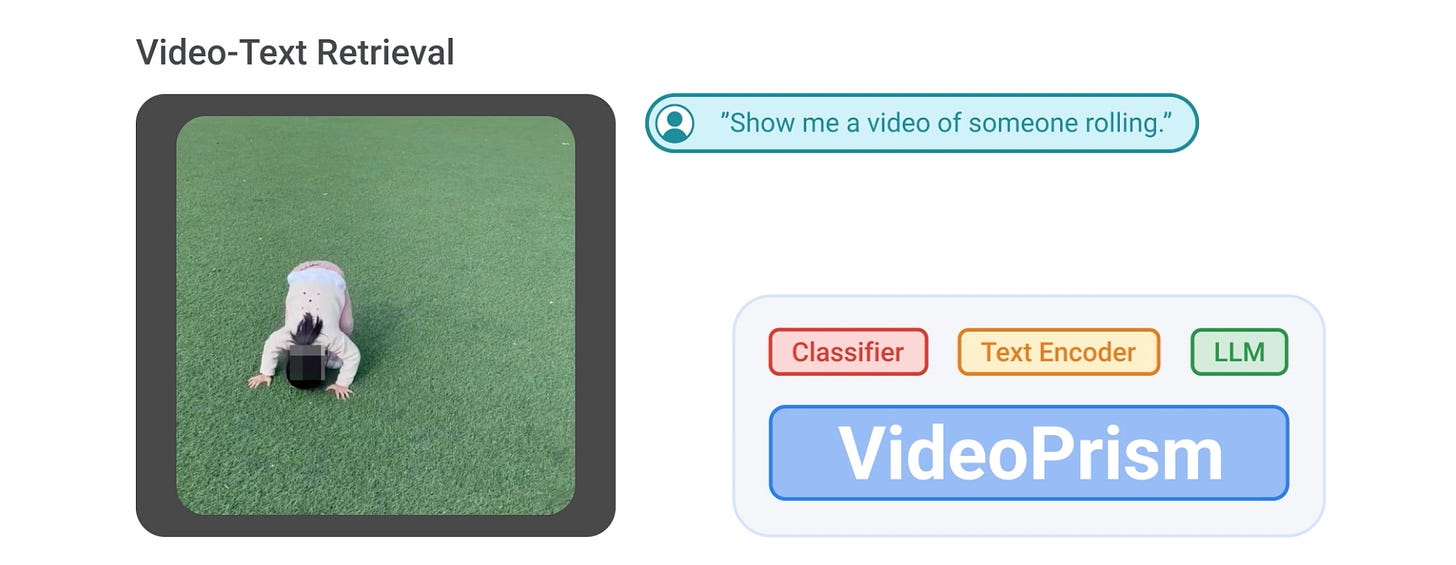

Google wrote a blog post on a new visual encoder for their video understanding. As vast amount of video data exists, encompassing various content, from sports highlights and movie trailers to educational videos and home movies. They also have Youtube videos that covers much more content beyond these themes like music. They are asking a question if they can build a powerful visual encoder to see if they can leverage all of the videos that they have.

Their problem to solve is follows: traditional methods for analyzing video data, such as hand-crafted features and deep learning models trained on specific tasks, are limited in their ability to handle the complexity and diversity of real-world video. Existing video understanding models often struggle to handle the complexity and diversity of video data. They may be good at one specific task, such as video classification or captioning, but they are not general-purpose enough to handle a wide range of tasks. Additionally, these models often require large amounts of labeled training data, which can be expensive and time-consuming to collect. They introduce VideoPrism, a foundational video encoder that is designed for general-purpose video understanding. VideoPrism is a large, self-supervised model that is trained on a massive dataset of video-text pairs and video clips with noisy or machine-generated text.

Their approach differs from the existing methods as they use two-stage training approach that leverages both video-text data and video data with noisy text descriptions. In the first stage, contrastive learning is used to teach the model to match videos with their own descriptions. In the second stage, masked video modeling is used to predict masked patches in a video, and the model is trained to predict both the video-level global embedding and token-wise embeddings. This approach is different from existing methods, which typically rely on a single-stage training approach that uses only video-text data.

Jack Cook wrote an excellent blog post on Mamba.

He explains the shortcomings of Transformers:

Transformer models are widely used for language modeling tasks.

They suffer from a quadratic attention mechanism, which makes them slow for long sequences.

The slowness of Transformers for long sequences limits their usefulness.

How Mamba is solving these problems:

Mamba is a new model that uses an SSM architecture for linear-time scaling.

It is based on S4, a state space model, which can be discretized for use with text data.

Mamba can be used in two modes: RNN mode and CNN mode. RNN mode is better for inference while CNN mode is better for training.

Mamba introduces selectivity to the model by making the model parameters dependent on the input.

Mamba is a linear-time language model that outperforms Transformers on various tasks. It achieves this speedup by using a combination of techniques including an SSM architecture and recurrent updates. Overall, the post explains how Mamba solves mostly long sequence limitations especially Transformer being computationally expensive to understand and model long sequences of user interactions.

Libraries

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

AutoGen enables building next-gen LLM applications based on multi-agent conversations with minimal effort. It simplifies the orchestration, automation, and optimization of a complex LLM workflow. It maximizes the performance of LLM models and overcomes their weaknesses.

It supports diverse conversation patterns for complex workflows. With customizable and conversable agents, developers can use AutoGen to build a wide range of conversation patterns concerning conversation autonomy, the number of agents, and agent conversation topology.

It provides a collection of working systems with different complexities. These systems span a wide range of applications from various domains and complexities. This demonstrates how AutoGen can easily support diverse conversation patterns.

AutoGen provides enhanced LLM inference. It offers utilities like API unification and caching, and advanced usage patterns, such as error handling, multi-config inference, context programming, etc.

Microsoft has a good getting-started page.

MLX-LLM has a number of LLMs that you can run against Apple silicon. It has a very intuitive API to load models from pre-trained weights, but also it has support for creating a chat agent as well like the following:

DistiLLM is official PyTorch implementation of DistiLLM: Towards Streamlined Distillation for Large Language Models. Many ideas as well as the code was following the following paper: MiniLLM: Knowledge Distillation of Large Language Models

HiddenMambaAttn is a library that implements the Mamba layer offers an efficient state space model (SSM) that is highly effective in modeling multiple domains including long-range sequences and images. SSMs are viewed as dual models, in which one trains in parallel on the entire sequence using convolutions, and deploys in an autoregressive manner. This new perspective enables them to compare the underlying mechanisms to that of the self-attention layers in transformers and allows us to peer inside the inner workings of the Mamba model with explainability methods.