DataGemma through RIG and RAG

Apple’s On-Device and Server Foundation Models

Articles

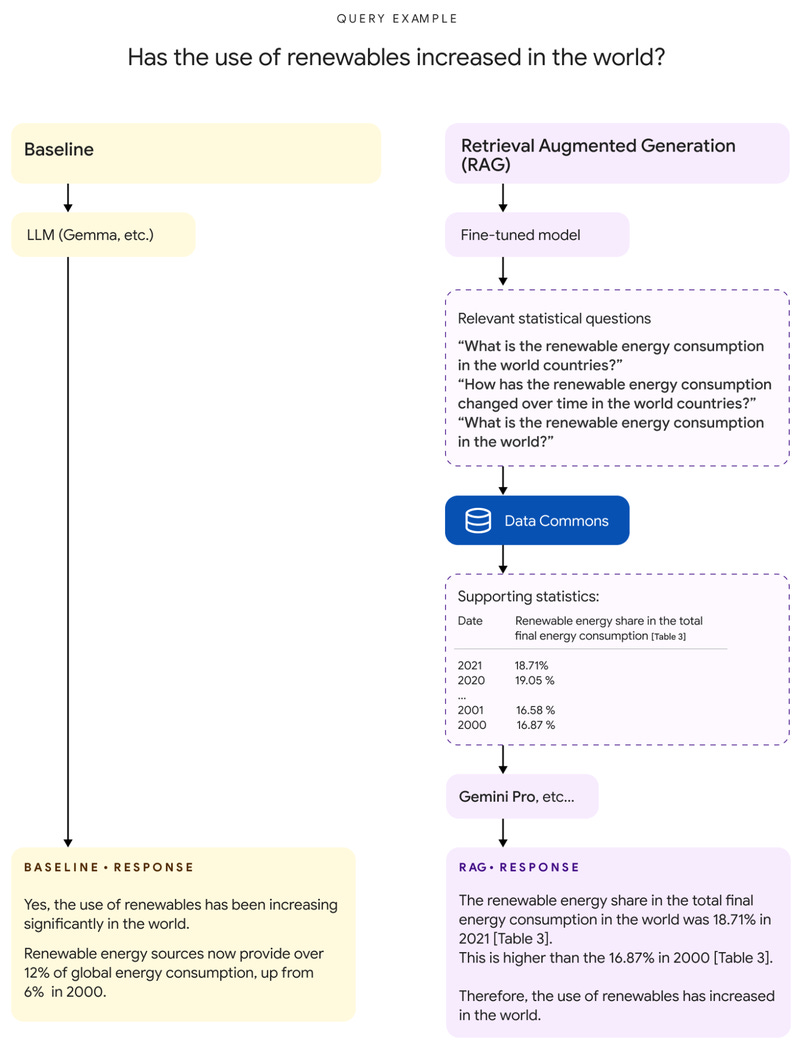

Google wrote an article on DataGemma where they focus on how important the data is in developing LLM; specifically the LLM family of Gemma. Gemma is a family of lightweight, state-of-the-art, open models built from the same research and technology used to create our Gemini models. DataGemma expands the capabilities of the Gemma family by harnessing the knowledge of Data Commons to enhance LLM factuality and reasoning. By leveraging innovative retrieval techniques, DataGemma helps LLMs access and incorporate into their responses data sourced from trusted institutions (including governmental and intergovernmental organizations and NGOs), mitigating the risk of hallucinations and improving the trustworthiness of their outputs.

Main problem that they solve through is the prevention of hallucination of LLMs in a way that they want to make the LLM “grounded” on a factual information as much as possible. This is the main disadvantage as LLMs often struggle with grounding their responses in verifiable facts. This is partly due to the scattered nature of real-world knowledge across various sources with different data formats, schemas, and APIs. The lack of grounding can lead to hallucinations, where the model generates incorrect or misleading information. One can solve this problem through “grounding” LLM into data layer a lot more and much more closer.

Data Commons and DataGemma

Data Commons is Google's publicly available knowledge graph containing over 250 billion global data points across hundreds of thousands of statistical variables.It sources information from trusted organizations like the United Nations, World Health Organization, health ministries, and census bureaus. This vast repository covers a wide range of topics, including economics, climate change, health, and demographics. This is the foundation for the factual data and this will be the source of truth for real-world representation.

DataGemma expands the capabilities of Google's Gemma family of models by leveraging the knowledge within Data Commons.It uses innovative retrieval techniques to help LLMs access and incorporate data from trusted institutions, thereby mitigating the risk of hallucinations and improving the trustworthiness of their outputs.

DataGemma employs two different approaches to connect LLMs with Data Commons:

1. Retrieval Interleaved Generation (RIG)

RIG fine-tunes Gemma 2 to identify statistics within its responses and annotate them with a call to Data Commons.The process works as follows:

A user submits a query to the LLM.

The DataGemma model generates a response, including a natural language query for Data Commons.

Data Commons is queried, and the retrieved data replaces potentially inaccurate numbers in the initial response.

The final response is presented to the user with a link to the source data for transparency and verification.

Advantages:

Works effectively in all contexts without altering the user query.

Maintains a more natural conversation flow, as the retrieval process is integrated into the response generation.

Can potentially provide more precise answers by directly replacing inaccurate information with factual data.

Disadvantages:

The LLM doesn't retain updated data for secondary reasoning.

Fine-tuning requires specialized datasets.

May introduce latency in responses due to the interleaved retrieval process.

The model's performance is heavily dependent on the quality of the fine-tuning process.

Updating the model with new information requires retraining, which can be time-consuming and resource-intensive.

2. Retrieval Augmented Generation (RAG)

RAG retrieves relevant information from Data Commons before the LLM generates text, providing a factual foundation for its response.The process involves:

A user submits a query to the LLM.

The DataGemma model analyzes the query and generates corresponding queries for Data Commons.

Data Commons is queried, and relevant data tables, source information, and links are retrieved.

The retrieved information is added to the original user query, creating an augmented prompt.

A larger LLM (e.g., Gemini 1.5 Pro) uses this augmented prompt to generate a comprehensive and grounded response.

Advantages:

Automatically benefits from ongoing model evolution and improvements in the LLM generating the final response.

Can handle a wider range of queries without requiring specific fine-tuning for each domain.

Provides flexibility in updating the knowledge base without retraining the entire model.

Enhances the accuracy and reliability of generative AI models with knowledge from external sources.

Disadvantages:

Modifying the user's prompt can lead to a less intuitive user experience.

The effectiveness depends on the quality of generated queries to Data Commons.

May require more computational resources due to the need for processing larger context windows.

Can introduce increased latency and computational costs, especially when using rerankers to optimize results.

The quality of responses is highly dependent on the relevance and accuracy of the retrieved information.

This Netflix wrote a blog post discusses their approach to designing recommendation systems that optimize for long-term member satisfaction rather than just short-term engagement.

Main motivation is to create recommendations that not only engage members in the moment but also enhance their long-term satisfaction, leading to increased value from the service and higher retention rates. And reason why rewards are relatively important is due to the fact that traditional recommender systems often focus on short-term metrics like clicks or engagement, which may not fully capture long-term satisfaction which results in retention of the user over the long term.

In order to build such a system, one can consider the following approaches:

Contextual Bandit Framework: Netflix views recommendations as a contextual bandit problem, where the system selects actions (recommendations) based on context (user visit) and receives feedback (user interactions)

Proxy Rewards: Instead of directly optimizing for retention, which has several drawbacks, Netflix uses proxy reward functions that align with long-term satisfaction while being sensitive to individual recommendations

Beyond Click-Through Rate (CTR): While CTR is a common baseline, Netflix looks beyond simple interactions to better align with long-term satisfaction. They consider various user actions and their implications on satisfaction

Reward Engineering: This iterative process involves refining the proxy reward function to better align with long-term member satisfaction. It includes hypothesis formation, defining new proxy rewards, training new bandit policies, and A/B testing

Handling Delayed Feedback: To address the challenge of delayed or missing user feedback, Netflix predicts missing feedback using observed data and other relevant information. This allows them to update the bandit policy more quickly while still incorporating longer-term feedback

Two Types of ML Models:

Delayed Feedback Prediction Models: Used offline to predict final feedback based on observed feedbacks.

Bandit Policy Models: Used online to generate real-time recommendations

Addressing Online-Offline Metric Disparity: When improved models show better offline metrics but flat or negative online metrics, Netflix further refines the proxy reward definition to better align with the improved model

They finally decided to use a combination of complex proxy rewards, delayed feedback prediction, and a two-model approach was based on this iterative process of testing and refinement. This method allowed Netflix to balance immediate user engagement with long-term satisfaction, while also addressing the practical challenges of delayed feedback and the need for timely policy updates.

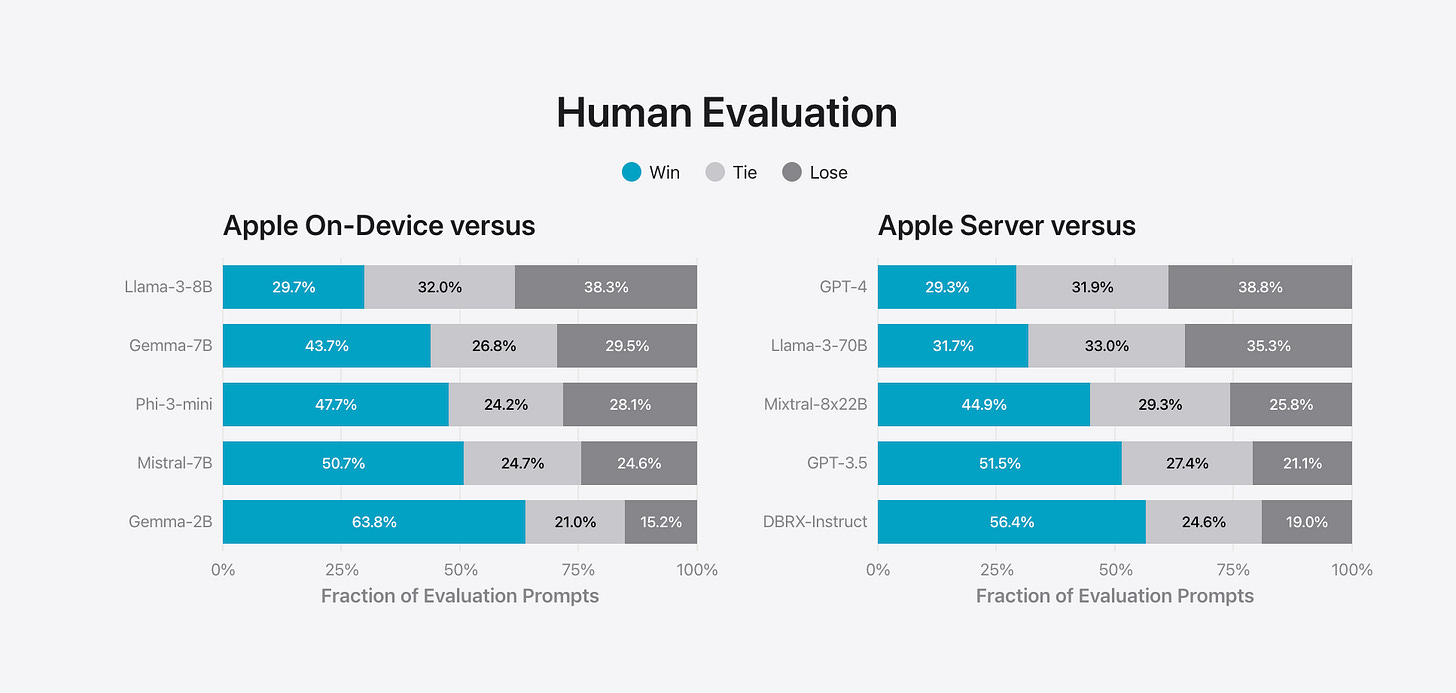

Apple introduced their Foundation Model stack in a blog post. Their foundation models for on-device and server-based AI, which form the core of Apple Intelligence, a personal intelligence system integrated into iOS 18, iPadOS 18, and macOS Sequoia.

It is very itneresting that they bet on the XLA and Jax over other types of training frameworks. I was specifically expecting MLX, but there was no MLX mentioned, they go all-in for the JAX it sounds like from the blog post.

Model Architecture:

On-device model: ~3 billion parameters

Larger server-based model running on Apple silicon servers

Both models are part of a larger family of generative models, including coding and diffusion models

Grouped-query-attention for both models

Shared input/output vocab embedding tables

Low-bit palletization for on-device inference (3.7 bits-per-weight average)

LoRA adapters for maintaining model quality

Activation and embedding quantization

Efficient Key-Value (KV) cache update on neural engines

Training Framework:

Apple chose JAX and XLA for their AXLearn framework because:It allows for high efficiency and scalability across various hardware platforms (TPUs, cloud GPUs, on-premise GPUs)

Enables combination of data parallelism, tensor parallelism, sequence parallelism, and Fully Sharded Data Parallel (FSDP) for multi-dimensional scaling

Model Adaptation:

Use of adapters for fine-tuning specific tasks

Dynamic loading and swapping of adapters for on-the-fly specialization

Efficient infrastructure for rapid retraining and deployment of adapters

Performance in Mobile Space:

On iPhone 15 Pro: 0.6ms per prompt token for first-token latency

Generation rate of 30 tokens per second

Further improvements expected with token speculation techniques

Value in Mobile and Server Spaces:

On-device model outperforms larger models (e.g., Mistral-7B, Gemma-7B) in human evaluations

Server model compares favorably to larger models (e.g., DBRX-Instruct, Mixtral-8x22B) while being highly efficient

Both models show strong performance in instruction-following and safety benchmarks

Adapters allow for task-specific optimizations while maintaining a small memory footprint

Libraries

AXLearn is a library built on top of JAX and XLA to support the development of large-scale deep learning models.

AXLearn takes an object-oriented approach to the software engineering challenges that arise from building, iterating, and maintaining models. The configuration system of the library lets users compose models from reusable building blocks and integrate with other libraries such as Flax and Hugging Face transformers.

AXLearn is built to scale. It supports the training of models with up to hundreds of billions of parameters across thousands of accelerators at high utilization. It is also designed to run on public clouds and provides tools to deploy and manage jobs and data. Built on top of GSPMD, AXLearn adopts a global computation paradigm to allow users to describe computation on a virtual global computer rather than on a per-accelerator basis.

AXLearn supports a wide range of applications, including natural language processing, computer vision, and speech recognition and contains baseline configurations for training state-of-the-art models.

Datasets For Recommender Systems of public data sources for Recommender Systems (RS).

All of these recommendation datasets can convert to the atomic files defined in RecBole, which is a unified, comprehensive and efficient recommendation library.

After converting to the atomic files, you can use RecBole to test the performance of different recommender models on these datasets easily. For more information about RecBole, please refer to RecBole.

Kotaemon is an open-source clean & customizable RAG UI for chatting with your documents. Built with both end users and developers in mind.

Microsoft News Dataset is a large-Scale English dataset for news recommendation research. This repository provides the script to evaluate models and a sample submission for MIND News Recommendation Challenge.