Compositional Object Classifier

Dynamic Neural Networks meets Computer Vision

This week, we have an amazing post from Facebook on how product matching in an e-commerce application. Be sure to read that even if you are not working in e-commerce domain.

I work in the model optimization domain and quantization, pruning and all of these techniques that make the serving of deep learning models more efficient in terms of compute and memory. There is a workshop in this week’s newsletter (Dynamic Neural Networks meets Computer Vision) which I highly recommend if you are working in this domain.

Distill published a post recently that they are taking a hiatus which is not part of the newsletter as it is not related to ml, deep learning or MLOps. However, I want to highlight this publication as it paved the way for high quality articles especially in the area of understanding neural networks. Very big loss.

Bugra

Articles

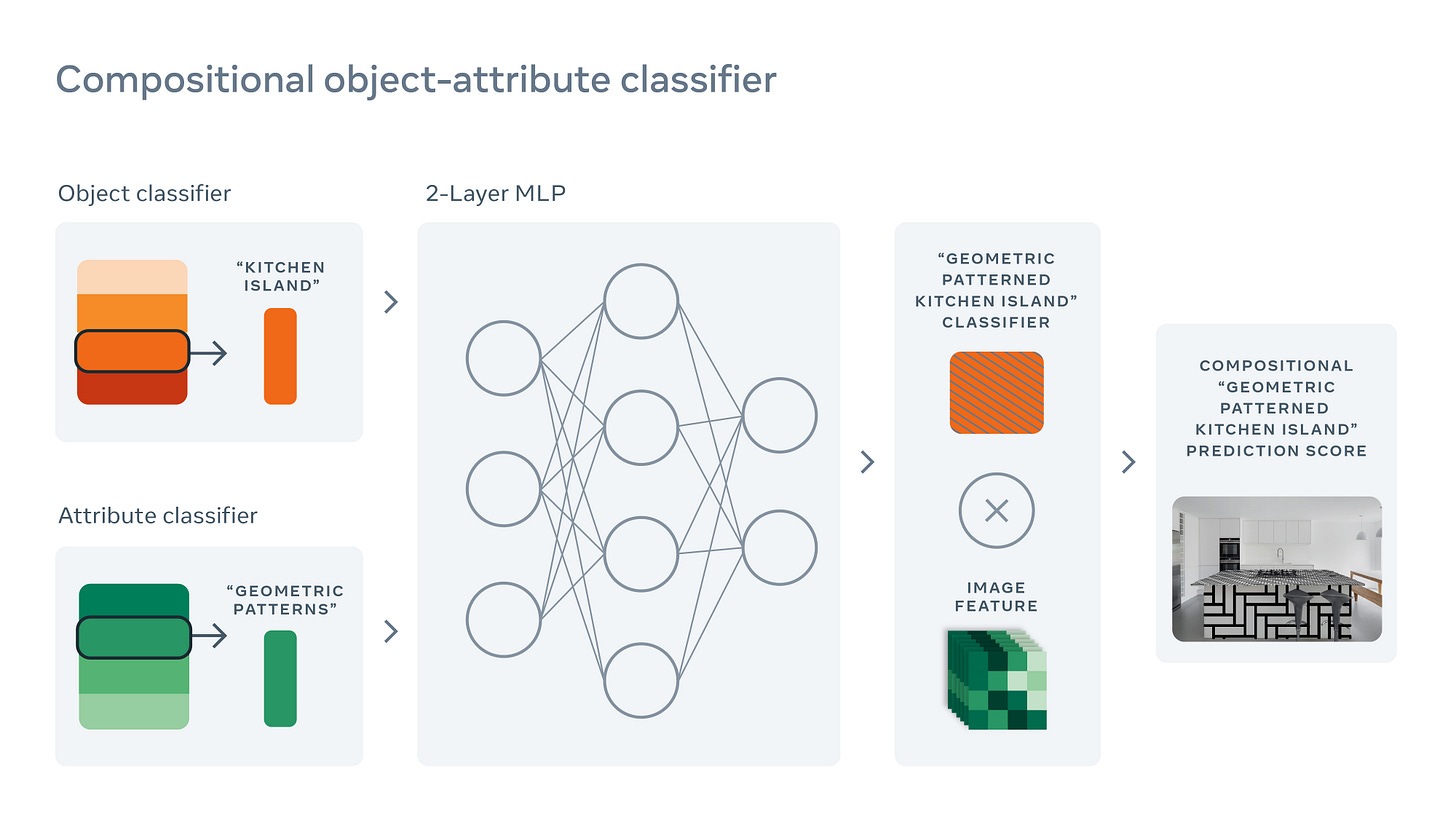

Facebook wrote how they are building a system that can match any images to product images. To do that, they are building compositional system which composes object classification and attribute classification results into a combined result. By doing so, system can predict on the objects that it has never seen before to predict what it might look like by combining objects and other attributes.

Tensorflow published a new library that leverages quantum computation and explains the domains on quantum computing can be useful and better comparing to traditional machine learning libraries. They have a tutorial on how you can use the library for Fashion MNIST dataset. There is a nature paper if you want to learn more about this in detail.

Google published select models and their performance in the following post through mlperf framework. In certain models, Google’s TPUs can do better than Nvidia’s A100 GPU’s such as BERT and DLRM.

Papers

I wrote about “unlearning” in this newsletter in a comprehensive way. Especially, with new regulations(like GDPR), this is a topic of an interest in research recently and there are a lot of good papers coming out from academia due to that. HedgeCut: Maintaining Randomised Trees for Low-Latency Machine Unlearning is proposing a method based on an ensemble randomized decision trees with a low latency. Library is available in GitHub and written in Rust. There is also a presentation that explains core concepts in this paper:

Lightweight Inspection of Data Preprocessing in Native Machine Learning Pipelines introduces a way to inspect data pipelines and machine learning classifier on the data distribution and how the data preprocessing could impact the results of the classifier. It tries to be method agnostic to find various bugs/issues in the data preprocessing and machine learning pipelines. mlinspect is available in here and there is a nice notebook that gives an overview of the package and how it can be used for pandas/scikit-learn packages.

Break-It-Fix-It: Unsupervised Learning for Program Repair tackles how to build a system to learn syntactic errors of code(“bad code”) to produce “fixed code”. It shows how to build a multi-step system to first emulate the real programming errors by training a “breaker” and after that, compiling a “real” looking bad examples, to train a machine learning model to produce good code. The code is available in GitHub.

Revisiting the Primacy of English in Zero-shot Cross-lingual Transfer answers to the question: “Is English best language to use for cross-lingual transfer in a zero-shot learning?” The answer is a resounding no. Paper finds Russian and German are very well suited languages to use for zero-shot learning.

Mandoline: Model Evaluation under distribution shift answers to the question: “Can we somehow use our knowledge on data distribution shift between training/serving layers to yield more accurate model evaluation results?”. The answer is yes, paper goes into various techniques such as “slicing” the data and weighting. The code of the paper is available in GitHub.

Libraries

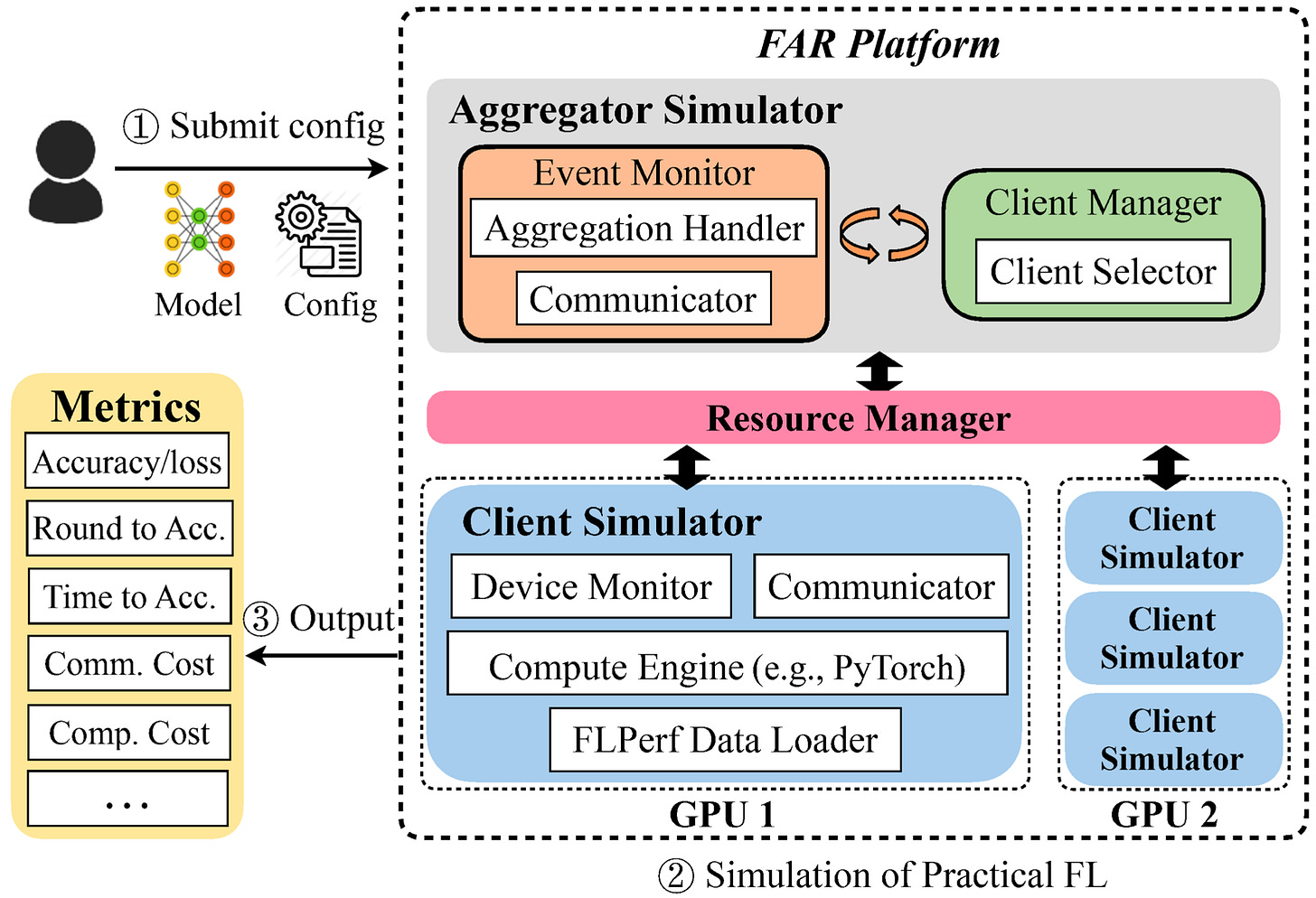

FedScale is a Python library that provides a platform to register various Federated Learning models to promote reproducibility and benchmarking between different models. The paper goes into more detail on the platform.

PyTorch Geometric is an extension library that is built on top of PyTorch that has geometric deep learning methods are available. By open-sourcing this library, Twitter popularizes Geometric deep learning methods.

Torch Liberator is a library to package and statically extract PyTorch models into “deployable” state.

MMF is a multi-modal framework to build PyTorch models that target audio, vision and other modalities. It is modular and composable to enable building deep learning models and iterate on them easily.

NeMo is a conversational toolkit that helps to build ml models that are used to build conversational AI products.

MeerKat is a new data library that allows you to be flexible in terms of what you store and still provide pandas’ dataframe usability.

Flower is a unified federated learning framework that supports multiple backends(PyTorch, Tensorflow, MXNet). There was a recent conference that went into details of the library in here.

Workshops/Tutorials

Dynamic Neural Networks Meet Computer Vision is a workshop that covers Dynamic Neural Networks and their applications on computer vision. Especially, Not Every Image Region is Worth the Same Compute: Spatial Dynamic Neural Networks is great for motivating why dynamic neural networks is needed/better for various computer vision applications. This area(dynamic computation/selection of neural networks) could be yet another area for model optimization techniques along with quantization, pruning and knowledge distillation.

Tensorflow has a nice video that walks through TF-GAN library.

Books

Fair ML Book covers fairness topic. They have good tutorial sequence as well: