Google Research has introduced a novel framework called Chain-of-Agents (CoA) to address the challenges of long-context tasks in large language models (LLMs). It leverages multi-agent collaboration to improve performance on tasks requiring extensive input processing.

LLMs have demonstrated different capabilities in various tasks such as reasoning, knowledge retrieval, and generation. However, they face limitations when dealing with long inputs due to constraints on input length, which hinders their performance on tasks like long summarization, question answering, and code completion.Previous approaches to tackle this issue have primarily focused on two directions:

Input reduction: This method involves reducing the length of the input context before feeding it to LLMs. Retrieval Augmented Generation (RAG) is an example of this approach, breaking the input into chunks and retrieving answers based on embedding similarity.

Window extension: This approach extends the context window of LLMs through fine-tuning, allowing them to consume longer inputs. For instance, Gemini can process up to 2M tokens per input.

However, these methods have their drawbacks. Input reduction techniques may provide incomplete context due to low retrieval accuracy, while window extension approaches struggle with focusing on relevant information and suffer from ineffective context utilization as the input length increases.

Chain-of-Agents (CoA)

CoA is designed to address these challenges by mimicking the way humans interleave reading and processing of long contexts under limited working memory constraints. It has mainly two different stages:

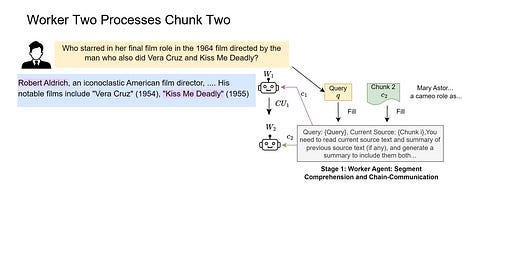

Stage 1: Worker Agent - Segment Comprehension and Chain-Communication

In this stage, a series of worker agents process different chunks of the long context sequentially. Each worker agent:

Receives a portion of the source text

Processes the query

Follows instructions for a specific task

Analyzes the message passed from the previous agent

Outputs a message for the next worker in the chain

This unidirectional communication chain allows for efficient information extraction and comprehension across the entire input.

Stage 2: Manager Agent - Information Integration and Response Generation

After the worker agents have processed the input, the manager agent:

Receives the accumulated knowledge from the last worker

Synthesizes the relevant information

Generates the final answer based on the query and instructions

Key Advantages

Training-free: CoA does not require additional training of the underlying LLMs.

Task-agnostic: The framework can be applied to various long-context tasks.

Highly interpretable: The step-by-step process allows for easy understanding of how the final answer is derived.

Cost-effective: CoA reduces time complexity from n2n2 to nknk, where nn is the number of input tokens and kk is the context limit of the LLM.

Computational Efficiency

CoA reduces the time complexity of processing long inputs from n2n2 to nknk, where:

nn is the number of input tokens

kk is the context limit of the LLM

This improvement in efficiency makes CoA more cost-effective for processing long contexts compared to full-context approaches.

Multi-hop Reasoning Capabilities

CoA demonstrated superior performance in multi-hop reasoning tasks compared to RAG. In an example from the HotpotQA dataset, CoA's collaborative approach allowed for:

The first agent to explore related topics without knowing the answer

The second agent to broaden the topic scope by incorporating new information

The third agent to discover the answer by synthesizing information from earlier agents and new data

This process highlights CoA's ability to facilitate complex reasoning across long context tasks.

Comparison with Long Context LLMs

When compared to long context LLMs on the NarrativeQA and BookSum datasets, CoA (8k) significantly outperformed both RAG (8k) and Full-Context (200k) baselines. This was true even when using Claude 3 models (Haiku, Sonnet, and Opus) with a context limit of 200k tokens.

Performance on Longer Inputs

The researchers compared CoA and Full-Context approaches using Claude 3 on the BookSum dataset with varying input lengths. Key findings include:

CoA outperformed the Full-Context (200k) baseline across all tested input lengths.

CoA's performance improved as the input length increased.

The improvement over Full-Context (200k) became more significant with longer inputs, reaching around 100% when the length exceeded 400k tokens.

These results demonstrate that CoA can enhance LLM performance even for models with very long context window limits and provides greater performance gains for longer inputs.

Libraries

Julep is a platform for creating AI agents that remember past interactions and can perform complex tasks. It offers long-term memory and manages multi-step processes.

Julep enables the creation of multi-step tasks incorporating decision-making, loops, parallel processing, and integration with numerous external tools and APIs.

While many AI applications are limited to simple, linear chains of prompts and API calls with minimal branching, Julep is built to handle more complex scenarios which:

have multiple steps,

make decisions based on model outputs,

spawn parallel branches,

use lots of tools, and

run for a long time.

Transformer Lab allows you to:

💕 One-click Download Hundreds of Popular Models:

DeepSeek, Llama3, Qwen, Phi4, Gemma, Mistral, Mixtral, Command-R, and dozens more

⬇ Download any LLM from Huggingface

🎶 Finetune / Train Across Different Hardware

Finetune using MLX on Apple Silicon

Finetune using Huggingface on GPU

⚖️ RLHF and Preference Optimization

DPO

ORPO

SIMPO

Reward Modeling

💻 Work with LLMs Across Operating Systems:

Windows App

MacOS App

Linux

💬 Chat with Models

Chat

Completions

Preset (Templated) Prompts

Chat History

Tweak generation parameters

Batched Inference

Tool Use / Function Calling (in alpha)

🚂 Use Different Inference Engines

MLX on Apple Silicon

Huggingface Transformers

vLLM

Llama CPP

🧑🎓 Evaluate models

📖 RAG (Retreival Augmented Generation)

Drag and Drop File UI

Works on Apple MLX, Transformers, and other engines

📓 Build Datasets for Training

Pull from hundreds of common datasets available on HuggingFace

Provide your own dataset using drag and drop

🔢 Calculate Embeddings

💁 Full REST API

🌩 Run in the Cloud

You can run the user interface on your desktop/laptop while the engine runs on a remote or cloud machine

Or you can run everything locally on a single machine

🔀 Convert Models Across Platforms

Convert from/to Huggingface, MLX, GGUF

🔌 Plugin Support

Easily pull from a library of existing plugins

Write your own plugins to extend functionality

🧑💻 Embedded Monaco Code Editor

Edit plugins and view what's happening behind the scenes

📝 Prompt Editing

Easily edit System Messages or Prompt Templates

📜 Inference Logs

While doing inference or RAG, view a log of the raw queries sent to the LLM

And you can do the above, all through a simple cross-platform GUI.

Oumi is a fully open-source platform that streamlines the entire lifecycle of foundation models - from data preparation and training to evaluation and deployment. Whether you're developing on a laptop, launching large scale experiments on a cluster, or deploying models in production, Oumi provides the tools and workflows you need.

With Oumi, you can:

🚀 Train and fine-tune models from 10M to 405B parameters using state-of-the-art techniques (SFT, LoRA, QLoRA, DPO, and more)

🤖 Work with both text and multimodal models (Llama, DeepSeek, Qwen, Phi, and others)

🔄 Synthesize and curate training data with LLM judges

⚡️ Deploy models efficiently with popular inference engines (vLLM, SGLang)

📊 Evaluate models comprehensively across standard benchmarks

🌎 Run anywhere - from laptops to clusters to clouds (AWS, Azure, GCP, Lambda, and more)

🔌 Integrate with both open models and commercial APIs (OpenAI, Anthropic, Vertex AI, Together, Parasail, ...)

All with one consistent API, production-grade reliability, and all the flexibility you need for research.

RQ-VAE Recommender a PyTorch implementation of a generative retrieval model using semantic IDs based on RQ-VAE from "Recommender Systems with Generative Retrieval". The model has two stages:

Items in the corpus are mapped to a tuple of semantic IDs by training an RQ-VAE (figure below).

Sequences of semantic IDs are tokenized by using a frozen RQ-VAE and a transformer-based is trained on sequences of semantic IDs to generate the next ids in the sequence.

Datasets: Amazon Reviews (Beauty, Sports, Toys), MovieLens 1M, MovieLens 32M

RQ-VAE Pytorch model implementation + KMeans initialization + RQ-VAE Training script.

Decoder-only retrieval model + Training code with semantic id user sequences from randomly initialized or pretrained RQ-VAE.

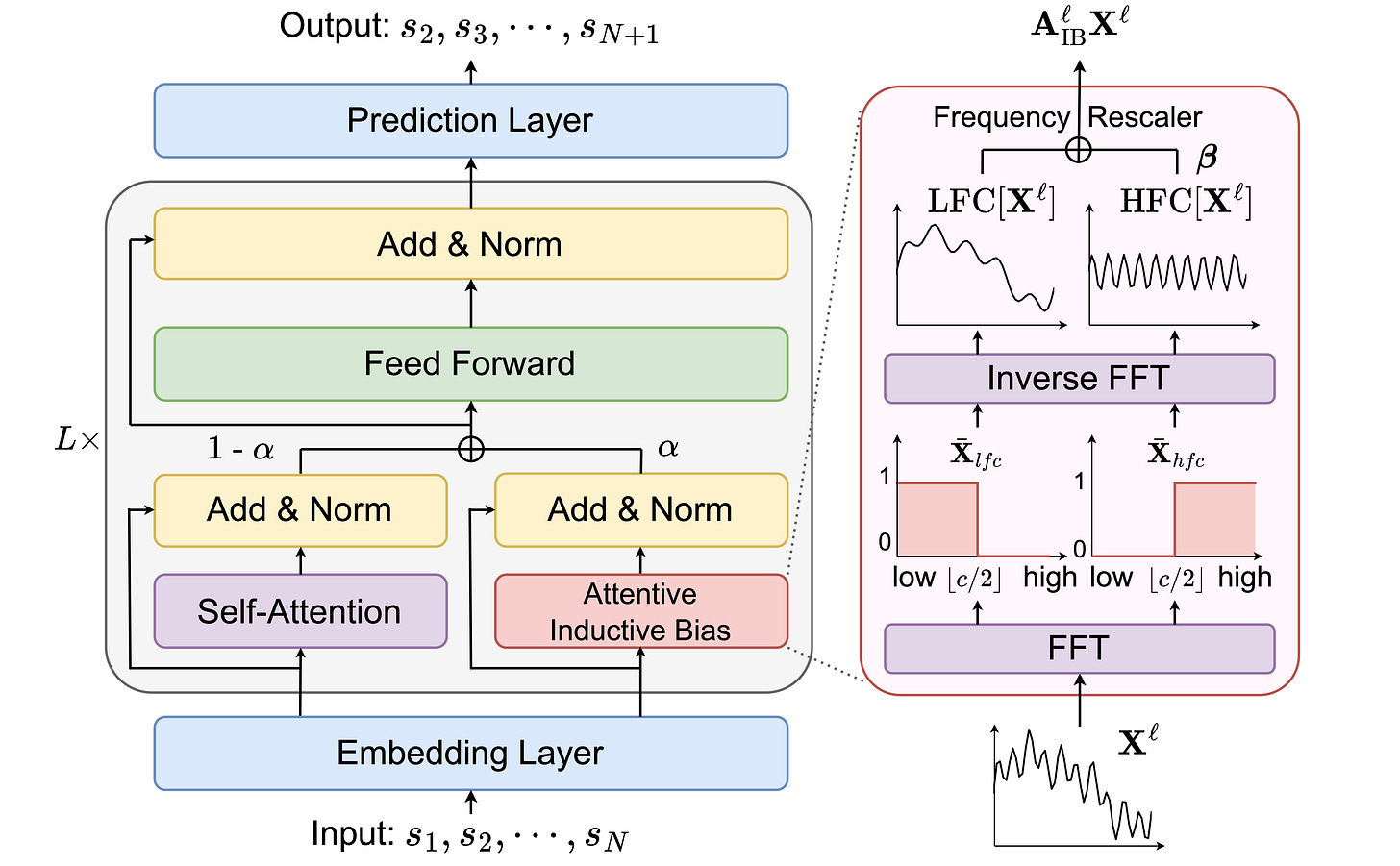

Beyond Self-Attention for Sequential Recommendation (BSARec) leverages Fourier transform to strike a balance between our inductive bias and self-attention.

Below the Fold

Dario Amodei(Founder of Antropic) wrote a rather interesting piece to detail about DeepSeek and what it means from US point of view with regards to chip export control of USA and its significance in terms of geopolitically.