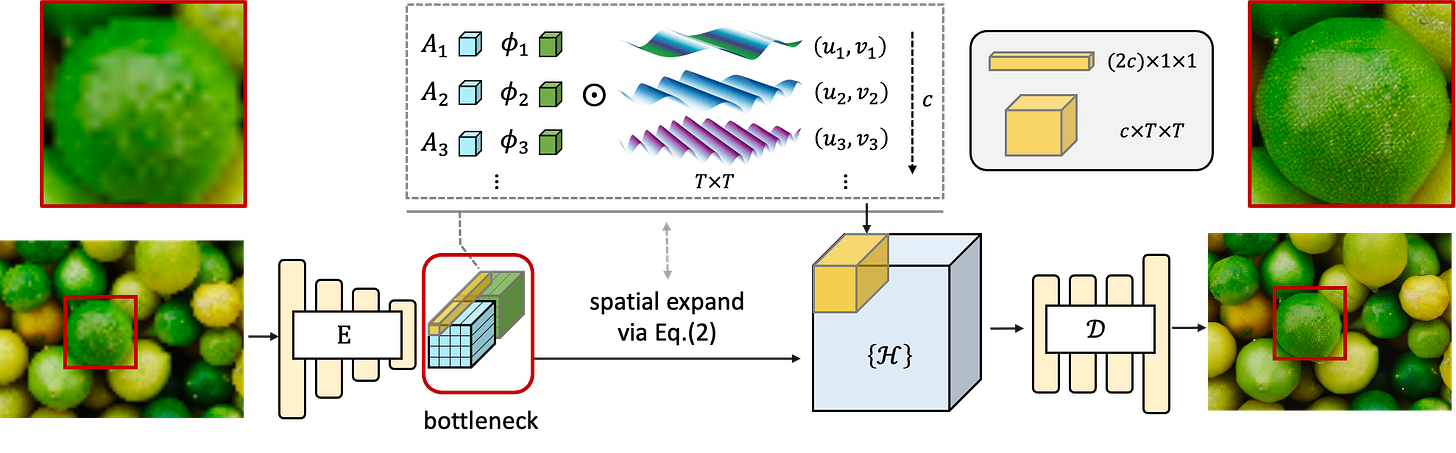

NVIDIA introduces CosAE (Cosine Autoencoder), a novel, generic Autoencoder that seamlessly leverages the classic Fourier series with a feed-forward neural network. CosAE represents an input image as a series of 2D Cosine time series, each defined by a tuple of learnable frequency and Fourier coefficients. This method stands in contrast to a conventional Autoencoder that often sacrifices detail in their reduced-resolution bottleneck latent spaces. CosAE, however, encodes frequency coefficients, i.e., the amplitudes and phases, in its bottleneck. This encoding enables extreme spatial compression, e.g., 64x downsampled feature maps in the bottleneck, without losing detail upon decoding.

I think there is a strong argument on why this type of architecture can be better than traditional vanilla AE:

Fourier coefficients exploit information that occurs in the images in a more straightforward manner.

Fourier coefficients can provide compression capability in a much better than the traditional vanilla auto encoder architectures.

There is also strong argument on why this type of architecture can be worse than traditional vanilla AE:

Fourier coefficients would be better for natural images and images that are generally good for visual elements of human eye and from the real world, but may not work as well for synthetically generated images.

While Fourier coefficients can provide better compression capabilities, it might also prevent other types of relationships that a traditional autoencoder might uncover.

Attention Wasn’t All We needed

Stephen Diehl wrote an excellent article around attention and subsequent developments around attention and how crucial they are for optimizing the architecture and hence the cheeky title “Attention Wasn't All We Needed”.

"Attention Is All You Need" paper established the foundation for transformer architectures, but subsequent research has revealed significant opportunities for optimization and enhancement of this architecture both from efficiency and better accuracy point of view.

Dielh looks at three critical attention mechanism innovations that address fundamental limitations in the original transformer design: 1. Group Query Attention (GQA), 2. Multi-head Latent Attention, and 3. Flash Attention.

These mechanisms tackle distinct but somehow interconnected challenges including memory bandwidth bottlenecks during inference, quadratic computational complexity for long sequences, and inefficient GPU memory utilization patterns.

Group Query Attention: Optimizing Memory Bandwidth for Inference

Group Query Attention(GQA) represents a shift in how attention mechanisms balance computational efficiency with representational capacity during inference. It specifically targets the key-value (KV) cache bottleneck that emerges during autoregressive generation, where previously computed keys and values must be stored and repeatedly accessed for each new token prediction. The main idea of GQA is that the computational bottleneck and memory footprint in multi-head attention are heavily influenced by the size of the K and V projections and their corresponding caches, rather than the query projections themselves.

Traditional multi-head attention maintains N_h distinct heads for queries, keys, and values, resulting in substantial memory requirements during inference when the KV cache must store all previous computations. GQA introduces an asymmetric design where N_h query heads operate with only N_kv key-value heads, where N_kv < N_h and N_h is typically a multiple of N_kv. This architectural modification directly addresses the observation that key and value representations can be effectively shared across multiple query heads without significant performance degradation.

The mathematical foundation of GQA builds upon standard attention mechanisms while introducing parameter sharing. Given an input sequence representation X ∈ R^(L×d_model), where L represents sequence length and d_model denotes embedding dimension, the mechanism first projects X into queries, keys, and values using distinct linear transformations: Q = XW_Q, K = XW_K, and V = XW_V. The distinction lies in the dimensionality of these projection matrices, where W_Q ∈ R^(d_model×(N_h d_k)) maintains full query capacity while W_K, W_V ∈ R^(d_model×(N_kv d_k)) operate with reduced key-value dimensions.

The grouping mechanism divides the N_h query heads into N_kv groups, with each group containing g = N_h/N_kv query heads that share the same key and value representations. For the i-th query head, the corresponding key and value heads are determined by K_⌈i/g⌉ and V_⌈i/g⌉, where the ceiling function ensures proper group assignment. The attention computation for each head follows the standard scaled dot-product attention formula, but with shared key-value pairs:

Through this, we can keep the full expressiveness of query representations while dramatically reducing the memory requirements for key-value storage.

Implementation Efficiency and Practical Considerations

The practical implementation of GQA leverages efficient tensor operations to minimize computational overhead while maximizing memory savings. The technique employs repetition and interleaving operations to align the reduced set of key-value heads with the full set of query heads before performing batched matrix multiplication for attention scores. This approach, implemented through operations like repeat_interleave, ensures that the computational pattern remains highly parallelizable and compatible with existing GPU kernels.

The memory bandwidth benefits of GQA become particularly important during autoregressive generation, where the KV cache size directly impacts inference speed. By reducing N_kv, GQA significantly decreases the memory bandwidth required to load the KV cache at each decoding step, which represents the primary performance bottleneck in large model deployment. Empirical evaluations demonstrate that GQA achieves favorable trade-offs, maintaining most of the representational quality of full multi-head attention while delivering substantial speedups and memory savings.

Multi-query attention (MQA) represents an extreme case of GQA where N_kv = 1, providing maximum memory efficiency at the potential cost of some representational capacity. The flexible framework allows practitioners to tune the N_h/N_kv ratio based on specific deployment constraints and performance requirements.

Multi-head Latent Attention: Breaking Quadratic Complexity Barriers

Computational Complexity Revolution

Multi-head Latent Attention addresses one of the most fundamental limitations of transformer architectures: the quadratic computational complexity O(L²) with respect to sequence length L that emerges from the need for every input element to attend to every other element. This quadratic scaling becomes prohibitive for applications involving very long sequences, such as document processing, high-resolution image analysis, or extended audio processing. The mechanism introduces a sophisticated intermediary approach that decouples direct sequence interactions through a fixed-size set of learnable latent vectors.

The core architectural change replacing direct self-attention with a two-stage cross-attention process mediated by N_latents learnable vectors. This design assumes that essential information from long input sequences can be effectively compressed and summarized through these latent representations, thus maintaining representational power while achieving linear scaling with sequence length. The approach transforms the computational complexity from O(L²) to O(L × N_latents), enabling transformer-like architectures to handle previously intractable sequence lengths when N_latents << L.

Two-Stage Attention Mechanism Architecture

The mathematical formulation of Multi-head Latent Attention operates through two distinct cross-attention stages, each serving a specific information aggregation purpose. Let the input sequence be represented as X ∈ R^(L×d) and the learnable latent array as L ∈ R^(N_latents×d), where both undergo projection into query, key, and value representations using either shared or separate projection matrices.

The first cross-attention stage focuses on information extraction from the input sequence to the latent space. Latent queries Q_L attend to input keys K_X and aggregate information from input values V_X through the standard attention mechanism. This operation enables the latent vectors to selectively extract and compress relevant information from the entire input sequence, effectively creating a condensed representation that captures global context.

The second cross-attention stage reverses the information flow, allowing input queries Q_X to attend to the updated latent representations. Input queries attend to latent keys K_L and aggregate information from the processed latent values H_L. This bidirectional information flow ensures that each input element can access the globally-relevant information captured by the latent vectors while maintaining the ability to produce element-specific outputs.

Applications and Representational Trade-offs

Multi-head Latent Attention is very important in domains where traditional self-attention becomes computationally infeasible due to sequence length constraints. Applications include processing long documents where maintaining global coherence is crucial, high-resolution image processing where pixel patches are treated as sequence elements, extended audio signal analysis, and video processing where temporal dependencies span many frames. The fixed number of latent vectors provides a scalable approach that remains computationally tractable regardless of input sequence length. For recommendation systems, you can imagine that for each activity, user interactions, you want to preserve a strong understanding and connection between them even though they might occur not in close temporal proximity of each other.

The learnable latent vectors, initialized randomly and updated through standard backpropagation, adapt during training to function as a compressed memory bank relevant to the specific task. This adaptive behavior allows the mechanism to automatically discover the most relevant global patterns and dependencies for the target application. However, the approach does introduce an information bottleneck that may limit fine-grained local interactions compared to full self-attention, representing a trade-off between computational efficiency and representational completeness.

Despite this limitation, Multi-head Latent Attention is very efficient at capturing global context, making it particularly valuable for tasks where long-range dependencies are more important than detailed local interactions. The mechanism has proven effective across various modalities, enabling transformer-like architectures to be applied to domains previously constrained by computational limitations.

Flash Attention: Revolutionizing Memory Hierarchy Utilization

Memory Bandwidth Optimization Strategy

Flash Attention, particularly in its latest FlashAttention-3 implementation, changes how attention mechanisms interact with modern GPU memory hierarchies. The standard attention computation requires materializing and storing the full attention score matrix S = QK^T, where Q, K ∈ R^(N×d) represent query and key matrices for a sequence of length N. This conventional approach necessitates storing the complete N × N matrix S, resulting in O(N²) memory complexity that becomes prohibitive for long sequences due to the limitations of GPU high bandwidth memory.

The approach of Flash Attention circumvents this memory bottleneck by avoiding the materialization of the full attention matrix in the GPU's slower high bandwidth memory. Instead, the mechanism leverages tiling and recomputation techniques that process attention computations in smaller blocks specifically sized to fit within the much faster on-chip SRAM. This architectural awareness represents a crucial shift from algorithm-centric optimization to hardware-aware design that maximizes the efficiency of modern accelerator architectures.

Tiling and Recomputation Framework

The core computational strategy of Flash Attention involves decomposing the Q, K, and V matrices into manageable blocks that can be processed within the constraints of on-chip memory. This tiling approach ensures that each computational step operates on data that can be efficiently cached in SRAM, minimizing the expensive memory transfers to and from high bandwidth memory that typically dominate attention computation costs.

The recomputation aspect of Flash Attention represents a sophisticated trade-off between memory usage and computational redundancy. Rather than storing intermediate attention scores that would exceed SRAM capacity, the mechanism strategically recomputes certain values when needed, leveraging the superior computational throughput of modern GPUs compared to their memory bandwidth limitations. This approach aligns with the evolving hardware landscape where computational resources often exceed memory bandwidth capacity.

Convergence of Optimization Strategies

The three attention mechanisms outlined above represent complementary approaches to addressing distinct bottlenecks in transformer scaling and deployment.

Group Query Attention targets inference-time memory bandwidth,

Multi-head Latent Attention addresses training-time computational complexity for long sequences,

Flash Attention optimizes hardware utilization patterns.

This convergence suggests that future transformer architectures will likely integrate multiple optimization strategies rather than relying on single-point solutions.

Libraries

SGLang is a fast serving framework for large language models and vision language models. It makes your interaction with models faster and more controllable by co-designing the backend runtime and frontend language. The core features include:

Fast Backend Runtime: Provides efficient serving with RadixAttention for prefix caching, zero-overhead CPU scheduler, continuous batching, token attention (paged attention), speculative decoding, tensor parallelism, chunked prefill, structured outputs, quantization (FP8/INT4/AWQ/GPTQ), and multi-lora batching.

Flexible Frontend Language: Offers an intuitive interface for programming LLM applications, including chained generation calls, advanced prompting, control flow, multi-modal inputs, parallelism, and external interactions.

Extensive Model Support: Supports a wide range of generative models (Llama, Gemma, Mistral, Qwen, DeepSeek, LLaVA, etc.), embedding models (e5-mistral, gte, mcdse) and reward models (Skywork), with easy extensibility for integrating new models.

Active Community: SGLang is open-source and backed by an active community with industry adoption.

BLIP3-o is a unified multimodal model that combines the reasoning and instruction following strength of autoregressive models with the generative power of diffusion models. Unlike prior works that diffuse VAE features or raw pixels, BLIP3-o diffuses semantically rich CLIP image features, enabling a powerful and efficient architecture for both image understanding and generation.

Fully Open-Source:

Pretraining Data: 24 Million Detailed Captions, 5 Million Short Captions

Instruction Tuning Data: 60 k GPT-4o Distilled Instruction Tuning Data

Unified Architecture: for both image understanding and generation.

CLIP Feature Diffusion: Directly diffuses semantic vision features for stronger alignment and performance.

State-of-the-art performance: across a wide range of image understanding and generation benchmarks.

CHOP is an optimization library based on PyTorch, with applications to adversarial examples and structured neural network training.

Below The Fold

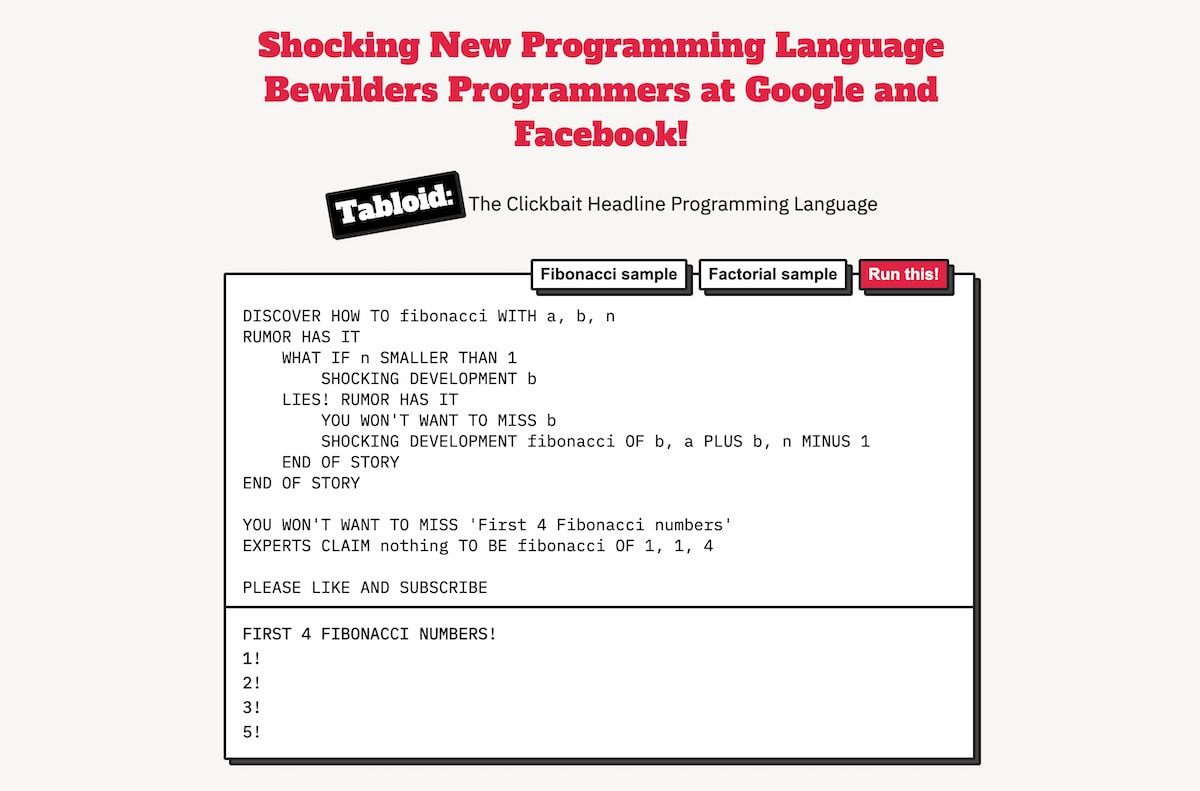

Tabloid is as minimal but Turing complete programming language inspired, nay, supercharged by clickbait headlines that rule the Internet today. You can try Tabloid on the Tabloid website.

Mermaid is a JavaScript-based diagramming and charting tool that uses Markdown-inspired text definitions and a renderer to create and modify complex diagrams. The main purpose of Mermaid is to help documentation catch up with development.

Querybook is a Big Data IDE that allows you to discover, create, and share data analyses, queries, and tables.

Features

📚 Organize analyses with rich text, queries, and charts

✏️ Compose queries with autocompletion and hovering tooltip

📈 Use scheduling + charting in DataDocs to build dashboards

🙌 Live query collaborations with others

📝 Add additional documentation to your tables

🧮 Get lineage, sample queries, frequent user, search ranking based on past query runs