Alibaba Foundation Models: QWEN Series

Do you want to chat to a codebase? Sage got you covered!

Articles

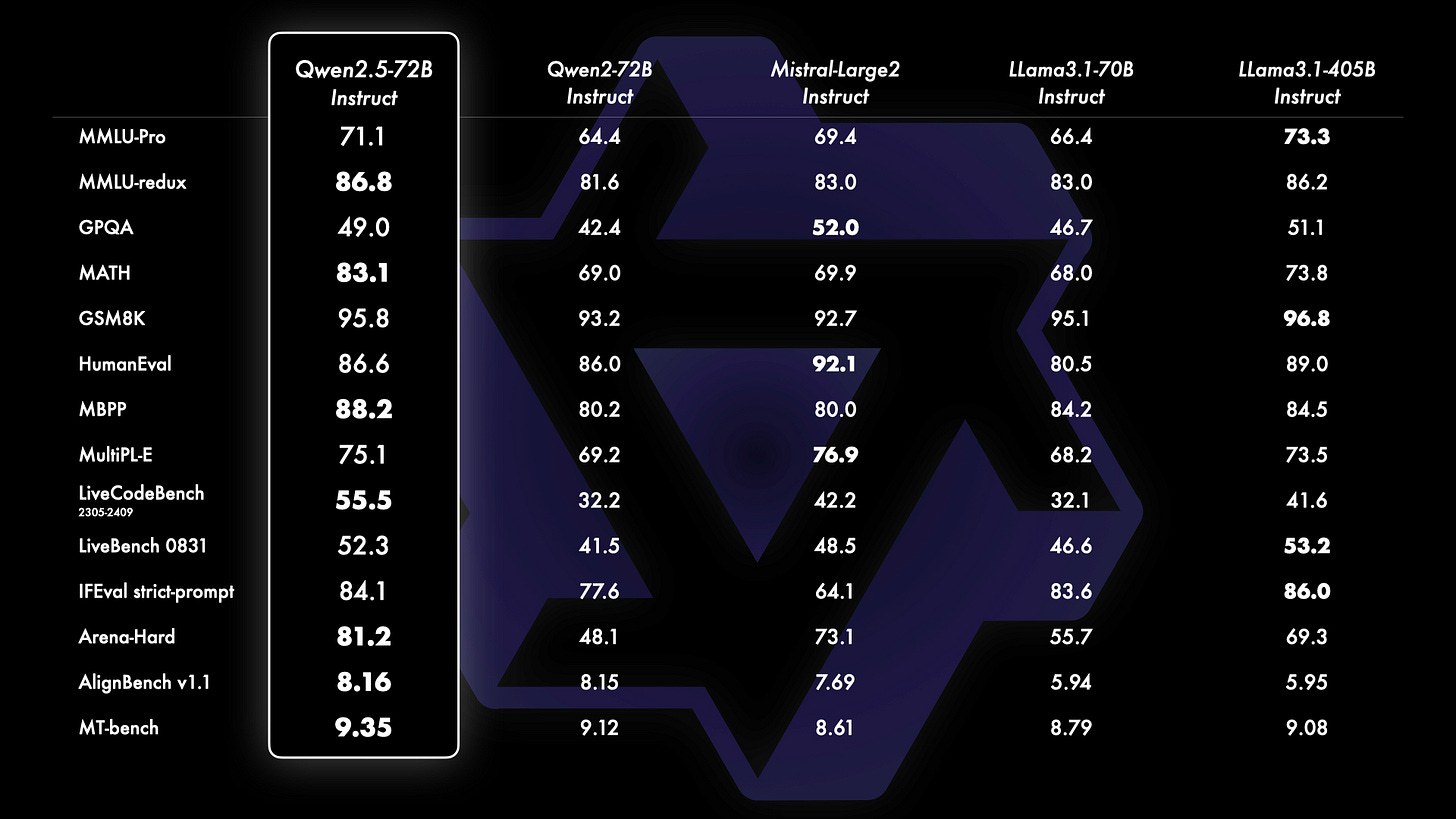

Alibaba announced their Foundation Model series(QWEN) in the following blog post. This is the model versioned 2.5 and it brings the following improvements upon Qwen2:

Significantly more knowledge and has greatly improved capabilities in coding and mathematics, thanks to our specialized expert models in these domains.

Significant improvements in instruction following, generating long texts (over 8K tokens), understanding structured data (e.g, tables), and generating structured outputs especially JSON. More resilient to the diversity of system prompts, enhancing role-play implementation and condition-setting for chatbots.

Long-context Support up to 128K tokens and can generate up to 8K tokens.

Multilingual support for over 29 languages, including Chinese, English, French, Spanish, Portuguese, German, Italian, Russian, Japanese, Korean, Vietnamese, Thai, Arabic, and more.

Qwen2.5 comes with specialized models for coding, Qwen2.5-Coder, and mathematics, Qwen2.5-Math. All open-weight models are dense, decoder-only language models, available in various sizes, including:

Qwen2.5: 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B

Qwen2.5-Coder: 1.5B, 7B, and 32B on the way

Qwen2.5-Math: 1.5B, 7B, and 72B.

They have separate blog posts that explain further these different models as well, Qwen LLM, Qwen Coder, Qwen Math.

More about model parameters, size as well as some of the model architecture details:

Model card can be obtained and model can be used in HuggingFace.

Airbnb has developed an internal platform called Sandcastle that enables data scientists, engineers, and product managers to quickly create and share data/AI-powered web applications within the company. This platform addresses several challenges in bringing data and AI ideas to life in an interactive format that resonates with design-focused leadership.

The main problem it solves is prototyping and make the product iteration, especially in the incubation stage to guide the designers and product engineers, “what can be possible phase”. I think this approach is very interesting as it provides a nice playground to people that are not familiar with the models and ML in general to build products on top of the capabilities. In the demo, it is mainly focused on LLM and its capabilities, but I can imagine, this would be applicable across all of the ML based products.

Pain Points that Sandcastle solves

Demonstrating ideas interactively to non-technical stakeholders

Enabling data scientists to create web applications without extensive frontend development knowledge

Packaging and sharing applications for easy reproduction

Managing infrastructure, networking, and authentication

Creating shareable "handles" for internal viral spread

Sandcastle brings three key components:

Onebrain: Airbnb's packaging framework for data science and prototyping code

kube-gen: Infrastructure for generated Kubernetes configuration

OneTouch: Infrastructure layer for dynamically scaled Kubernetes clusters

This combination allows team members to go from idea to live internal app in less than an hour.

Onebrain

Onebrain solves the challenge of packaging code reproducibly. It uses a project file (onebrain.yml) that includes metadata, entry points, and environment specifications. Developers can use the "brain run" command for interactive development, and the system integrates with Airbnb's continuous integration for easy publishing and deployment.

kube-gen

kube-gen simplifies cloud infrastructure configuration for data scientists. It generates most of the necessary service configuration files, leaving developers to write only a simple container configuration file. This process results in a live app with an easily shareable URL within 10-15 minutes of configuration.

As a result of Sandcastle, Airbnb achieved the following outcomes:

Rapid deployment and iteration of new ideas, especially in data and ML spaces

Direct iteration on interactive prototypes by data scientists and PMs without lengthy engineering cycles

Development of over 175 live prototypes in the last year, with 6 used for high-impact cases

Visits by over 3,500 unique internal users across 69,000 distinct active days

A cultural shift from using decks/docs to live prototypes

Common Crawl published a new set of datasets in their website.

The data was crawled between October 3rd and October 16th, and contains 2.49 billion web pages (or 365 TiB of uncompressed content). Page captures are from 47.5 million hosts or 38.3 million registered domains and include 1.03 billion new URLs, not visited in any of our prior crawls.

Libraries

MUSE is a Python library for multilingual word embeddings, whose goal is to provide the community with:

state-of-the-art multilingual word embeddings (fastText embeddings aligned in a common space)

large-scale high-quality bilingual dictionaries for training and evaluation

They include two methods, one supervised that uses a bilingual dictionary or identical character strings, and one unsupervised that does not use any parallel data (see Word Translation without Parallel Data for more details).

MMLU-Pro is an enhanced benchmark designed to evaluate language understanding models across broader and more challenging tasks. Building on the Massive Multitask Language Understanding (MMLU) dataset, MMLU-Pro integrates more challenging, reasoning-focused questions and increases the answer choices per question from four to ten, significantly raising the difficulty and reducing the chance of success through random guessing. MMLU-Pro comprises over 12,000 rigorously curated questions from academic exams and textbooks, spanning 14 diverse domains including Biology, Business, Chemistry, Computer Science, Economics, Engineering, Health, History, Law, Math, Philosophy, Physics, Psychology, and Others.

Faiss is a library for efficient similarity search and clustering of dense vectors. It contains algorithms that search in sets of vectors of any size, up to ones that possibly do not fit in RAM. It also contains supporting code for evaluation and parameter tuning. Faiss is written in C++ with complete wrappers for Python/numpy. Some of the most useful algorithms are implemented on the GPU. It is developed primarily at Meta's Fundamental AI Research group.

llm.js is a node.js module providing inference APIs for large language models, with simple CLI.

Powered by node-mlx, a machine learning framework for Node.js.

Inspectus is a versatile visualization tool for machine learning. It runs smoothly in Jupyter notebooks via an easy-to-use Python API.

HuggingFace has created a LLM Evaluation guidebook. It covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience.

Whether working with production models, a researcher or a hobbyist, I hope you'll find what you need; and if not, open an issue (to suggest ameliorations or missing resources) and I'll complete the guide!

There are two personas that this guide targets to:

Beginner user: If you don't know anything about evaluation, you should start by the

Basicssections in each chapter before diving deeper. You'll also find explanations to support you about important LLM topics inGeneral knowledge: for example, how model inference works and what tokenization is.Advanced user: The more practical sections are the

Tips and Tricksones, andTroubleshootingchapter. You'll also find interesting things in theDesigningsections.

Sometimes you just want to learn how a codebase works and how to integrate it, without spending hours sifting through the code itself.

sage is like an open-source GitHub Copilot with the most up-to-date information about your repo.

Features:

Dead-simple set-up. Run two scripts and you have a functional chat interface for your code. That's really it.

Heavily documented answers. Every response shows where in the code the context for the answer was pulled from. Let's build trust in the AI.

Runs locally or on the cloud.

Plug-and-play. Want to improve the algorithms powering the code understanding/generation? We've made every component of the pipeline easily swappable. Google-grade engineering standards allow you to customize to your heart's content.

Locality alignment is a post-training stage that helps vision transformers (ViTs) learn to extract local image semantics (i.e., class contents for each image region). We use an efficient fine-tuning procedure to teach this capability using only self-supervision — masked embedding self-consistency (MaskEmbed). These techniques are introduced in this paper.

Locality alignment is useful for pre-trained backbones like CLIP and SigLIP that are only exposed to image-level supervision (e.g., image-caption pairs) instead of dense, region-level supervision. Our paper shows improved performance on a local feature probing benchmark, and better benchmark performance in vision-language models. See our repositories below for usage in these tasks: