Eight Things to Know about Large Language Models

SelfRec, Pipeline RL, TRL, Knowledge Pack, DataHerald

Articles

Samuel Bowman has a rather interesting paper to talk about some of the properties of LLMs and the future direction on this area. He argues the following 8 things in the paper for LLM to be true:

LLMs predictably get more capable with increasing investment, even without targeted innovation.

Many important LLM behaviors emerge unpredictably as a byproduct of increasing investment.

LLMs often appear to learn and use representations of the outside world.

There are no reliable techniques for steering the behavior of LLMs.

Experts are not yet able to interpret the inner workings of LLMs.

Human performance on a task isn’t an upper bound on LLM performance.

LLMs need not express the values of their creators nor the values encoded in web text.

Brief interactions with LLMs are often misleading

I will try to expand these areas in the following parts:

1. LLMs Predictably Get More Capable With Increasing Investment, Even Without Targeted Innovation

A foundational insight is that the capabilities of LLMs improve predictably as a function of scale-measured in three primary dimensions:

Model size (number of parameters)

Training data volume

Compute used for training (FLOPs)

This relationship is formalized in scaling laws paper, which show that as these dimensions increase, the model’s performance on a broad range of language tasks improves smoothly and predictably, often following power-law trends.

For example, the progression from GPT to GPT-2 to GPT-3 involved relatively minor architectural changes but massive increases in training compute (up to 20,000× more for GPT-3) and data, resulting in qualitative leaps in capability. GPT-4 continued this trend, outperforming humans on many professional exams.

This enables LLMs to demonstrate the following properties when it comes to scaling:

Predictability: Scaling laws allow researchers to estimate performance improvements before training expensive models, reducing trial-and-error.

Economic justification: The ability to forecast returns on investment has driven multi-billion-dollar funding rounds.

Limited innovation needed: Most gains come from investing more compute/data rather than fundamentally new architectures or training algorithms.

2. Many Important LLM Behaviors Emerge Unpredictably as a Byproduct of Increasing Investment

While overall performance improves predictably, specific capabilities often emerge abruptly and unpredictably once the model crosses certain scale thresholds. This phenomenon is called emergent abilities. Some of these emergent abilities demonstrated in LLMs are:

Few-shot learning: The ability to perform new tasks from just a few examples in the prompt, which was not present in smaller models.

Chain-of-thought reasoning: The capacity to generate step-by-step reasoning improving performance on complex tasks.

These enable the following properties for LLMs:

Uncertainty: Developers know that larger models will be better overall but cannot reliably predict which new skills will appear or when.

“Mystery box” effect: Investing in larger models is akin to buying a black box with unknown but potentially valuable new capabilities.

Planning challenges: Responsible deployment and preparation for novel capabilities require flexibility and ongoing monitoring.

3. LLMs Often Appear to Learn and Use Representations of the Outside World

Despite being trained solely on text prediction through training datasets compiled through various resources, LLMs develop internal representations that correspond to real-world concepts and abstractions:

Semantic representations: Models encode color concepts in ways that align with human perception.

Theory of mind: LLMs can infer what an author knows or believes and use this to predict text continuation.

Spatial and object representations: Models track properties and locations of objects in stories, sometimes representing spatial layouts.

Visual reasoning: Even without direct visual training, models like GPT-4 can generate instructions in graphics languages to draw objects.

Game state tracking: Models trained on textual game move descriptions learn internal representations of board states.

Common sense and fact-checking: LLMs can distinguish misconceptions from facts and estimate claim plausibility.

Passing reasoning tests: LLMs perform well on benchmarks like the Winograd Schema Challenge, which require commonsense reasoning beyond surface text cues.

These capabilities enables the following properties for LLMs to demonstrate:

Beyond next-word prediction: While technically LLMs predict text, their learned representations enable abstract reasoning and world modeling.

Weak but growing: These abilities are currently imperfect and sporadic but improve with scale and training innovations.

Augmentation: Integration with vision models and external tools further enhances world understanding.

4. There Are No Reliable Techniques for Steering the Behavior of LLMs

LLMs are pretrained to predict text continuations, but practical applications require them to follow instructions or behave in desired ways. Steering model behavior is challenging:

Fine-tuning and instruction tuning: Adjusting model weights on specialized datasets can improve alignment but is costly and imperfect.

Prompt engineering: Carefully crafting input prompts can guide outputs but is brittle and often unreliable.

Reinforcement learning from human feedback (RLHF): Human preferences guide model outputs but cannot guarantee consistent behavior.

Lack of interpretability: Without understanding internal mechanisms, it is hard to predict or guarantee model responses.

These properties create the following issues/problems for the LLMs:

Unpredictability: Models can produce harmful, biased, or nonsensical outputs despite steering attempts.

Safety concerns: Deploying LLMs safely requires extensive monitoring and fallback mechanisms.

Research gap: Developing robust, reliable steering methods remains a major open challenge.

5. Experts Are Not Yet Able to Interpret the Inner Workings of LLMs

LLMs are deep neural networks with billions of parameters and highly distributed representations:

Opaque internals: The learned weights and activations do not correspond to human-understandable concepts.

Lack of interpretability tools: Current methods (e.g., attention visualization, neuron activation analysis) provide limited insight.

Complex emergent phenomena: Behaviors arise from interactions of many components, not isolated modules.

Ongoing research: Efforts in mechanistic interpretability aim to reverse-engineer model reasoning but are nascent.

These properties create the following issues/problems for the LLMs:

Black-box nature: Understanding why a model produces a certain output is difficult.

Trust and accountability: Lack of interpretability complicates debugging, auditing, and regulatory compliance.

Safety risks: Hidden failure modes and biases may go undetected.

6. Human Performance on a Task Isn’t an Upper Bound on LLM Performance

LLMs have demonstrated superhuman performance on many benchmarks:

Standardized exams: GPT-4 outperforms average qualified humans on the bar exam, SAT, and other professional tests.

Speed and scale: LLMs can process and generate text orders of magnitude faster than humans.

Novel capabilities: Some tasks, like large-scale code generation or multi-document synthesis, exceed typical human abilities.

These properties create the following issues/problems for the LLMs:

Revising expectations: Human benchmarks are not ceilings; LLMs can surpass human performance in many domains.

New applications: This opens possibilities for automating complex cognitive tasks.

Ethical considerations: Superhuman performance raises questions about job displacement, decision-making authority, and AI governance.

7. LLMs Need Not Express the Values of Their Creators Nor the Values Encoded in Web Text

LLMs are trained on vast datasets scraped from the internet, which contain diverse and often conflicting viewpoints, biases, and cultural values:

Value misalignment: Models may generate outputs that do not reflect the ethical or normative values of developers or society.

Bias amplification: Models can reproduce or amplify harmful stereotypes present in training data.

Steering attempts: Efforts to align models with human values (e.g., RLHF) are imperfect and context-dependent.

Value pluralism: The multiplicity of values in training data means models may express contradictory or incoherent value systems.

These properties create the following issues/problems for the LLMs:

Ethical challenges: Ensuring that LLMs behave in socially acceptable ways is complex.

Governance needs: Transparency, auditing, and stakeholder engagement are critical.

Customization: Tailoring models to domain- or community-specific values may be necessary.

8. Brief Interactions with LLMs Are Often Misleading

Users often form impressions of LLMs based on short, anecdotal interactions, which can be deceptive:

Overestimation: Early impressive outputs may lead users to overestimate model understanding or reliability.

Inconsistency: Models can produce contradictory or erroneous answers on repeated queries.

Context sensitivity: Small changes in prompts or conversation history can drastically alter outputs.

Evaluation difficulty: Measuring true model capabilities requires systematic, large-scale testing rather than isolated demos.

These properties create the following issues/problems for the LLMs:

Caution in interpretation: Users and policymakers should avoid drawing conclusions from limited interactions.

Need for rigorous evaluation: Benchmarking and adversarial testing provide more reliable assessments.

User education: Training users to understand model limitations is essential for responsible use.

Overall, the paper talks about very interesting observations and properties for LLMs in 8 different dimensions that are both positive and negative aspects to pay attention to.

Libraries

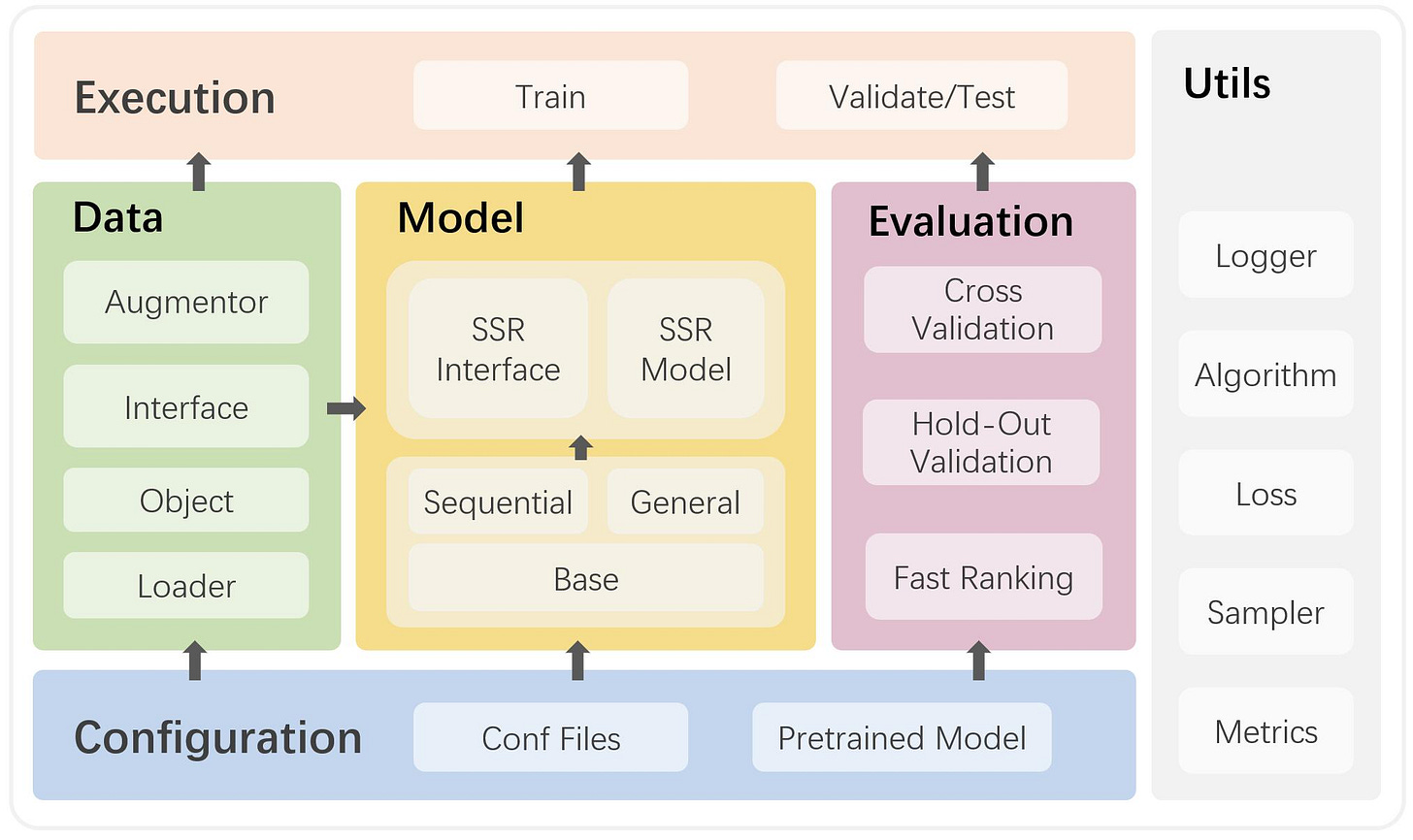

SELFRec is a Python framework for self-supervised recommendation (SSR) which integrates commonly used datasets and metrics, and implements many state-of-the-art SSR models. SELFRec has a lightweight architecture and provides user-friendly interfaces. It can facilitate model implementation and evaluation.

Pipeline RL is a scalable asynchronous reinforcement learning implementation with in-flight weight updates. Designed to maximize GPU utilization while staying as on-policy as possible.

TRL is a cutting-edge library designed for post-training foundation models using advanced techniques like Supervised Fine-Tuning (SFT), Proximal Policy Optimization (PPO), and Direct Preference Optimization (DPO). Built on top of the 🤗 Transformers ecosystem, TRL supports a variety of model architectures and modalities, and can be scaled-up across various hardware setups.

FramePack is a next-frame (next-frame-section) prediction neural network structure that generates videos progressively.

FramePack compresses input contexts to a constant length so that the generation workload is invariant to video length.

FramePack can process a very large number of frames with 13B models even on laptop GPUs.

FramePack can be trained with a much larger batch size, similar to the batch size for image diffusion training.

Knowledge Graph Attention Network (KGAT) is a new recommendation framework tailored to knowledge-aware personalized recommendation. Built upon the graph neural network framework, KGAT explicitly models the high-order relations in collaborative knowledge graph to provide better recommendation with item side information.

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over relational data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to:

Allow business users to get insights from the data warehouse without going through a data analyst

Enable Q+A from your production DBs inside your SaaS application

Create a ChatGPT plug-in from your proprietary data

NeuralNote is the audio plugin that brings state-of-the-art Audio to MIDI conversion into your favorite Digital Audio Workstation.

Works with any tonal instrument (voice included)

Supports polyphonic transcription

Supports pitch bend detection

Lightweight and very fast transcription

Allows to adjust the parameters while listening to the transcription

Allows to scale and time quantize transcribed MIDI directly in the plugin

AudioCraft is a PyTorch library for deep learning research on audio generation. AudioCraft contains inference and training code for two state-of-the-art AI generative models producing high-quality audio: AudioGen and MusicGen.

mlx-audio is a text-to-speech (TTS) and Speech-to-Speech (STS) library built on Apple's MLX framework, providing efficient speech synthesis on Apple Silicon.

Below The Fold

Posting is an HTTP client, not unlike Postman and Insomnia. As a TUI application, it can be used over SSH and enables efficient keyboard-centric workflows. Your requests are stored locally in simple YAML files, so they're easy to read and version control.

With CubeCL, you can program your GPU using Rust, taking advantage of zero-cost abstractions to develop maintainable, flexible, and efficient compute kernels. CubeCL currently fully supports functions, generics, and structs, with partial support for traits, methods and type inference. As the project evolves, we anticipate even broader support for Rust language primitives, all while maintaining optimal performance.

LazyDocker is a simple terminal UI for both docker and docker-compose, written in Go with the gocui library.

Prefect is a workflow orchestration framework for building data pipelines in Python. It's the simplest way to elevate a script into a production workflow. With Prefect, you can build resilient, dynamic data pipelines that react to the world around them and recover from unexpected changes.

With just a few lines of code, data teams can confidently automate any data process with features such as scheduling, caching, retries, and event-based automations.

DrawDB is a robust and user-friendly database entity relationship (DBER) editor right in your browser. Build diagrams with a few clicks, export sql scripts, customize your editor, and more without creating an account. See the full set of features here.

Index is the SOTA open-source browser agent for autonomously executing complex tasks on the web.

UIT is a library for performant, modular, low-memory file processing at scale, in the Cloud. It works by offering a 4-step process to gather a file hierarchy from any desired modality, apply filters and transformations, and output it in any desired modality.

performance: speed is of essence when navigating and searching through large amounts of data

low-memory by applying streaming and parallelization we can run this in low-memory environments such as Cloudflare workers

modular: modularity is beneficial because by making it composable we get a clear high-level overview of all building blocks. also, not all building blocks can be ran in the same runtime or location.